SIST-TP CEN/TR 17602-30-03:2022

(Main)Space product assurance - Human dependability handbook

Space product assurance - Human dependability handbook

The handbook defines the principles and processes of human dependability as integral part of system safety and dependability. The handbook focuses on human behaviour and performance during the different operation situations as for example in a control centre such as handover to routine mission operation, routine mission operation, satellite maintenance or emergency operations.

This handbook illustrates the implementation of human dependability in the system life cycle, where during any project phase there exists the need to systematically include considerations of the:

- Human element as part of the space system,

- Impact of human behaviour and performance on safety and dependability.

Within this scope, the main application areas of the handbook are to support the:

a. Development and validation of space system design during the different project phases,

b. Development, preparation and implementation of space system operations including their support such as the organisation, rules, training etc.

c. Collection of human error data and investigation of incidents or accidents involving human error.

The handbook does not address:

- Design errors: The handbook intends to support design (and therefore in this sense, addresses design errors) regarding the avoidance or mitigation of human errors during operations. However, human error during design development are not considered.

- Quantitative (e.g. probabilistic) analysis of human behaviour and performance: The handbook does not address probabilistic assessment of human errors as input to system level safety and dependability analysis and consideration of probabilistic targets, and

- Intentional malicious acts and security related issues: Dependability and safety deals with "threats to safety and mission success" in terms of failures and human non malicious errors and for the sake of completeness includes "threats to safety and mission success" in terms of malicious actions, which are addressed through security risk analysis. However by definition "human dependability" as presented in this handbook excludes the consideration of "malicious actions" and security related issues i.e. considers only "non-malicious actions" of humans.

The handbook does not directly provide information on some disciplines or subjects, which only indirectly i.e. at the level of PSFs (see section 5) interface with "human dependability". Therefore the handbook does not provide direct support to "goals" such as:

- optimize information flux in control room during simulations and critical operations,

- manage cultural differences in a team,

- cope with negative group dynamics,

- present best practices and guidelines about team training needs and training methods,

- provide guidelines and best practices concerning planning of shifts,

- present basic theory about team motivation, and

- manage conflict of interests on a project.

1.2 Objectives

The objectives of the handbook are to support:

- Familiarization with human dependability (see section 5 "principles of human dependability"). For details and further reading see listed "references" at the end of each section of the handbook.

- Application of human dependability; (see section 6 "human dependability processes" and 7 "implementation of human dependability in system life cycle").

Raumfahrtproduktsicherung - Handbuch zur menschlichen Zuverlässigkeit

Assurance produit des projets spatiaux - Guide sur le facteur humain

Zagotavljanje kakovosti proizvodov v vesoljski tehniki - Priročnik o človekovi zanesljivosti

Ta priročnik določa načela in postopke človekove zanesljivosti kot sestavni del varnosti in zanesljivosti sistemov. Priročnik se osredotoča na človekovo vedenje in zmogljivost v različnih situacijah, na primer v nadzornem centru (kot je prehod na rutinske postopke v okviru misije, izvajanje rutinskih postopkov v okviru misije, vzdrževanje satelitov ali izvajanje postopkov v sili).

V tem priročniku je predstavljeno izvajanje človekove zanesljivosti v življenjskem ciklu sistema, kadar je treba v kateri koli fazi projekta sistematično upoštevati:

– človeški dejavnik kot del vesoljskega sistema;

– vpliv človeškega vedenja in zmogljivosti na varnost ter zanesljivost.

Priročnik se v tem smislu uporablja predvsem kot podpora za:

a. razvoj in potrjevanje načrta vesoljskega sistema v različnih fazah projekta;

b. razvoj, pripravo in izvajanje postopkov vesoljskega sistema, vključno z njihovo podporo (npr. organizacija, pravila, usposabljanje itd.);

c. zbiranje podatkov o človeških napakah in preiskovanje incidentov ali nesreč, ki vključujejo človeške napake.

Ta priročnik ne obravnava:

– napak pri načrtovanju: namen priročnika je podpora pri načrtovanju (v tem smislu torej obravnava napake pri načrtovanju) v zvezi s preprečevanjem ali zmanjševanjem človeških napak med izvajanjem postopkov, vendar človeške napake med razvojem načrtovanja niso upoštevane;

– kvantitativnih (npr. verjetnostnih) analiz človeškega vedenja in zmogljivosti: priročnik ne obravnava verjetnostne ocene človeških napak kot vhodnega podatka za analizo varnosti in zanesljivosti na ravni sistema ter upoštevanje verjetnostnih ciljev; in

– namernih zlonamernih dejanj in težav, povezanih z varnostjo: priročnik na področju zanesljivosti in varnosti obravnava »grožnje za varnost in uspešnost misije« v smislu napak in človeških nezlonamernih napak ter zavoljo celovitosti vključuje »grožnje za varnost in uspešnost misije« v smislu zlonamernih dejanj, ki so obravnavane z analizo varnostnega tveganja. Vendar v skladu z opredelitvijo »človekova zanesljivost«, kot je predstavljena v tem priročniku, ne upošteva »zlonamernih dejanj« in težav, povezanih z varnostjo, tj. upošteva zgolj človeška »nezlonamerna dejanja«.

V tem priročniku niso neposredno vključene informacije o nekaterih disciplinah ali temah, ki so s »človekovo zanesljivostjo« povezane zgolj posredno, tj. na ravni PSF (glej razdelek 5). Priročnik zato ne zagotavlja neposredne podpore za »cilje«, kot so:

– optimizacija pretoka informacij v nadzorni sobi med simulacijami in kritičnimi postopki;

– obvladovanje kulturnih razlik znotraj ekipe;

– obvladovanje negativne skupinske dinamike;

– predstavitev najboljših praks in smernic v zvezi s potrebami po usposabljanju ekipe ter metodami usposabljanja;

– zagotavljanje smernic in najboljših praks v zvezi z načrtovanjem izmen;

– predstavitev osnovne teorije o motiviranju ekipe; ter

– obvladovanje navzkrižja interesov pri posameznem projektu.

General Information

- Status

- Published

- Public Enquiry End Date

- 20-Oct-2021

- Publication Date

- 19-Dec-2021

- Technical Committee

- I13 - Imaginarni 13

- Current Stage

- 6060 - National Implementation/Publication (Adopted Project)

- Start Date

- 08-Dec-2021

- Due Date

- 12-Feb-2022

- Completion Date

- 20-Dec-2021

Overview

SIST-TP CEN/TR 17602-30-03:2022 - Space product assurance: Human dependability handbook is a non‑normative technical report that defines principles and processes for integrating human dependability into space system safety and dependability. Focused on human behaviour and performance in operational situations (e.g., control centre handovers, routine mission operations, satellite maintenance and emergency handling), the handbook explains how to systematically consider the human element throughout the system life cycle to prevent, mitigate and investigate non‑malicious human errors.

Key topics and technical content

- Principles of human dependability: conceptual framing of human roles, error types and the relationship between human performance and system safety.

- Human error taxonomy: error precursors (performance shaping factors, PSFs), error mitigators and basic error types used to inform design and operations.

- Human error analysis: objectives, principles and iterative processes for analysing human contribution to failure scenarios (informative examples and data models included).

- Reporting and investigation: structured processes for human error reporting, root‑cause investigation and data collection to support lessons learned.

- Life‑cycle implementation: recommended human dependability activities mapped to project phases (Feasibility → Preliminary → Detailed → Qualification & Production → Operations/Disposal).

- Supporting materials: annexes with example data, analysis forms and question sets to guide practical assessments.

- Interfaces with related disciplines such as human factors engineering and human‑system integration (references to ECSS and NASA guidance).

Note: the handbook excludes probabilistic quantification of human errors, development‑phase design errors (human error during design work), and intentional malicious acts or security issues.

Practical applications

- Embed human dependability into system design reviews, verification and validation to reduce operational human error risk.

- Structure mission operations procedures, training preparation and control‑room practices with human error mitigations in mind.

- Provide a framework for incident investigation teams to collect human error data and perform root‑cause analysis.

- Inform product assurance and safety activities that complement FMECA and hazard analysis by addressing human contributions to failures.

Who should use this standard

- Space system engineers, safety and dependability engineers

- Human factors and human‑systems integration specialists

- Mission operations planners, control‑centre managers and AIT/launch teams

- Product assurance, quality and incident investigation teams

Related standards and keywords

Related standards: ECSS‑Q‑ST‑30 (Dependability), ECSS‑Q‑ST‑40 (Safety), ECSS‑E‑ST‑10‑11C (Human factors engineering).

SEO keywords: human dependability, space product assurance, human error analysis, space system operations, performance shaping factors (PSFs), mission safety and dependability.

Frequently Asked Questions

SIST-TP CEN/TR 17602-30-03:2022 is a technical report published by the Slovenian Institute for Standardization (SIST). Its full title is "Space product assurance - Human dependability handbook". This standard covers: The handbook defines the principles and processes of human dependability as integral part of system safety and dependability. The handbook focuses on human behaviour and performance during the different operation situations as for example in a control centre such as handover to routine mission operation, routine mission operation, satellite maintenance or emergency operations. This handbook illustrates the implementation of human dependability in the system life cycle, where during any project phase there exists the need to systematically include considerations of the: - Human element as part of the space system, - Impact of human behaviour and performance on safety and dependability. Within this scope, the main application areas of the handbook are to support the: a. Development and validation of space system design during the different project phases, b. Development, preparation and implementation of space system operations including their support such as the organisation, rules, training etc. c. Collection of human error data and investigation of incidents or accidents involving human error. The handbook does not address: - Design errors: The handbook intends to support design (and therefore in this sense, addresses design errors) regarding the avoidance or mitigation of human errors during operations. However, human error during design development are not considered. - Quantitative (e.g. probabilistic) analysis of human behaviour and performance: The handbook does not address probabilistic assessment of human errors as input to system level safety and dependability analysis and consideration of probabilistic targets, and - Intentional malicious acts and security related issues: Dependability and safety deals with "threats to safety and mission success" in terms of failures and human non malicious errors and for the sake of completeness includes "threats to safety and mission success" in terms of malicious actions, which are addressed through security risk analysis. However by definition "human dependability" as presented in this handbook excludes the consideration of "malicious actions" and security related issues i.e. considers only "non-malicious actions" of humans. The handbook does not directly provide information on some disciplines or subjects, which only indirectly i.e. at the level of PSFs (see section 5) interface with "human dependability". Therefore the handbook does not provide direct support to "goals" such as: - optimize information flux in control room during simulations and critical operations, - manage cultural differences in a team, - cope with negative group dynamics, - present best practices and guidelines about team training needs and training methods, - provide guidelines and best practices concerning planning of shifts, - present basic theory about team motivation, and - manage conflict of interests on a project. 1.2 Objectives The objectives of the handbook are to support: - Familiarization with human dependability (see section 5 "principles of human dependability"). For details and further reading see listed "references" at the end of each section of the handbook. - Application of human dependability; (see section 6 "human dependability processes" and 7 "implementation of human dependability in system life cycle").

The handbook defines the principles and processes of human dependability as integral part of system safety and dependability. The handbook focuses on human behaviour and performance during the different operation situations as for example in a control centre such as handover to routine mission operation, routine mission operation, satellite maintenance or emergency operations. This handbook illustrates the implementation of human dependability in the system life cycle, where during any project phase there exists the need to systematically include considerations of the: - Human element as part of the space system, - Impact of human behaviour and performance on safety and dependability. Within this scope, the main application areas of the handbook are to support the: a. Development and validation of space system design during the different project phases, b. Development, preparation and implementation of space system operations including their support such as the organisation, rules, training etc. c. Collection of human error data and investigation of incidents or accidents involving human error. The handbook does not address: - Design errors: The handbook intends to support design (and therefore in this sense, addresses design errors) regarding the avoidance or mitigation of human errors during operations. However, human error during design development are not considered. - Quantitative (e.g. probabilistic) analysis of human behaviour and performance: The handbook does not address probabilistic assessment of human errors as input to system level safety and dependability analysis and consideration of probabilistic targets, and - Intentional malicious acts and security related issues: Dependability and safety deals with "threats to safety and mission success" in terms of failures and human non malicious errors and for the sake of completeness includes "threats to safety and mission success" in terms of malicious actions, which are addressed through security risk analysis. However by definition "human dependability" as presented in this handbook excludes the consideration of "malicious actions" and security related issues i.e. considers only "non-malicious actions" of humans. The handbook does not directly provide information on some disciplines or subjects, which only indirectly i.e. at the level of PSFs (see section 5) interface with "human dependability". Therefore the handbook does not provide direct support to "goals" such as: - optimize information flux in control room during simulations and critical operations, - manage cultural differences in a team, - cope with negative group dynamics, - present best practices and guidelines about team training needs and training methods, - provide guidelines and best practices concerning planning of shifts, - present basic theory about team motivation, and - manage conflict of interests on a project. 1.2 Objectives The objectives of the handbook are to support: - Familiarization with human dependability (see section 5 "principles of human dependability"). For details and further reading see listed "references" at the end of each section of the handbook. - Application of human dependability; (see section 6 "human dependability processes" and 7 "implementation of human dependability in system life cycle").

SIST-TP CEN/TR 17602-30-03:2022 is classified under the following ICS (International Classification for Standards) categories: 03.120.99 - Other standards related to quality; 49.140 - Space systems and operations. The ICS classification helps identify the subject area and facilitates finding related standards.

SIST-TP CEN/TR 17602-30-03:2022 is associated with the following European legislation: Standardization Mandates: M/496. When a standard is cited in the Official Journal of the European Union, products manufactured in conformity with it benefit from a presumption of conformity with the essential requirements of the corresponding EU directive or regulation.

SIST-TP CEN/TR 17602-30-03:2022 is available in PDF format for immediate download after purchase. The document can be added to your cart and obtained through the secure checkout process. Digital delivery ensures instant access to the complete standard document.

Standards Content (Sample)

SLOVENSKI STANDARD

01-februar-2022

Zagotavljanje kakovosti proizvodov v vesoljski tehniki - Priročnik o človekovi

zanesljivosti

Space product assurance - Human dependability handbook

Raumfahrtproduktsicherung - Handbuch zur menschlichen Zuverlässigkeit

Assurance produit des projets spatiaux - Guide sur le facteur humain

Ta slovenski standard je istoveten z: CEN/TR 17602-30-03:2021

ICS:

03.120.99 Drugi standardi v zvezi s Other standards related to

kakovostjo quality

49.140 Vesoljski sistemi in operacije Space systems and

operations

2003-01.Slovenski inštitut za standardizacijo. Razmnoževanje celote ali delov tega standarda ni dovoljeno.

TECHNICAL REPORT CEN/TR 17602-30-03

RAPPORT TECHNIQUE

TECHNISCHER BERICHT

December 2021

ICS 49.140

English version

Space product assurance - Human dependability handbook

Assurance produit des projets spatiaux - Guide sur le Raumfahrtproduktsicherung - Handbuch zur

facteur humain menschlichen Zuverlässigkeit

This Technical Report was approved by CEN on 22 November 2021. It has been drawn up by the Technical Committee

CEN/CLC/JTC 5.

CEN and CENELEC members are the national standards bodies and national electrotechnical committees of Austria, Belgium,

Bulgaria, Croatia, Cyprus, Czech Republic, Denmark, Estonia, Finland, France, Germany, Greece, Hungary, Iceland, Ireland, Italy,

Latvia, Lithuania, Luxembourg, Malta, Netherlands, Norway, Poland, Portugal, Republic of North Macedonia, Romania, Serbia,

Slovakia, Slovenia, Spain, Sweden, Switzerland, Turkey and United Kingdom.

CEN-CENELEC Management Centre:

Rue de la Science 23, B-1040 Brussels

© 2021 CEN/CENELEC All rights of exploitation in any form and by any means Ref. No. CEN/TR 17602-30-03:2021 E

reserved worldwide for CEN national Members and for

CENELEC Members.

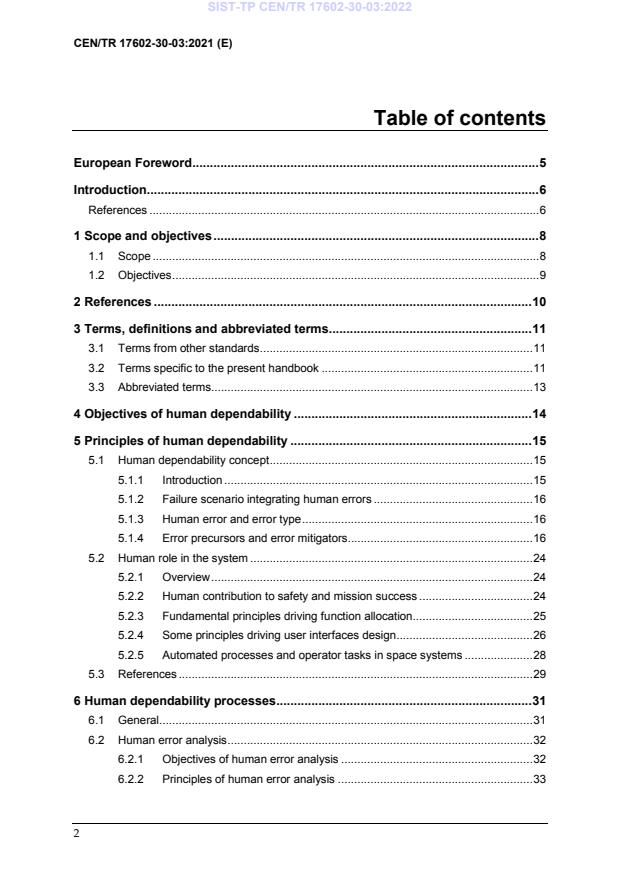

Table of contents

European Foreword . 5

Introduction . 6

References . 6

1 Scope and objectives . 8

1.1 Scope . 8

1.2 Objectives . 9

2 References . 10

3 Terms, definitions and abbreviated terms . 11

3.1 Terms from other standards . 11

3.2 Terms specific to the present handbook . 11

3.3 Abbreviated terms. 13

4 Objectives of human dependability . 14

5 Principles of human dependability . 15

5.1 Human dependability concept . 15

5.1.1 Introduction . 15

5.1.2 Failure scenario integrating human errors . 16

5.1.3 Human error and error type . 16

5.1.4 Error precursors and error mitigators. 16

5.2 Human role in the system . 24

5.2.1 Overview . 24

5.2.2 Human contribution to safety and mission success . 24

5.2.3 Fundamental principles driving function allocation. 25

5.2.4 Some principles driving user interfaces design . 26

5.2.5 Automated processes and operator tasks in space systems . 28

5.3 References . 29

6 Human dependability processes . 31

6.1 General . 31

6.2 Human error analysis . 32

6.2.1 Objectives of human error analysis . 32

6.2.2 Principles of human error analysis . 33

6.2.3 Human error analysis process . 37

6.3 Human error reporting and investigation . 41

6.3.1 Objectives of human error reporting and investigation . 41

6.3.2 Principles of human error reporting and investigation . 41

6.3.3 Human error reporting and investigation process . 43

6.4 References . 45

7 Implementation of human dependability in system life cycle . 46

7.1 General . 46

7.2 Human dependability activities in project phases . 47

7.2.1 Overview . 47

7.2.2 Phase A: Feasibility . 47

7.2.3 Phase B: Preliminary Definition . 48

7.2.4 Phase C: Detailed Definition . 49

7.2.5 Phase D: Qualification and Production . 50

7.2.6 Phases: E Operations/Utilization and F Disposal . 52

7.3 References . 53

Annex A (informative) Human error analysis data - examples . 54

A.1 Overview . 54

A.2 Examples of the Evolution of PSFs . 55

A.3 Examples of Human Error Scenario Data . 58

A.4 References . 58

Annex B (informative) Human error analysis documentation . 59

Annex C (informative) Human error analysis example questions . 61

C.1 Examples of questions to support a risk analysis on anomalies and human

error during operations . 61

C.2 References . 63

Annex D (informative) Human dependability in various domains . 64

D.1 Human dependability in industrial sectors . 64

D.2 References . 66

Bibliography . 68

Figures

Figure 5-1: Examples of human error in failure scenarios . 16

Figure 5-2: Error precursors, error mitigators and human error in failure scenarios . 17

Figure 5-3: HFACS model . 20

Figure 5-4: Levels of human performance . 21

Figure 5-5: Basic error types . 23

Figure 5-6: MABA-MABA principle . 25

Figure 5-7: Small portion of Chernobyl nuclear power plant control room (from

http://www.upandatom.net/Chernobyl.htm) . 26

Figure 5-8: Example of a computer-based, concentrated control room (Large Hadron

Collider at CERN) . 27

Figure 5-9. Example of a computer-based, concentrated user interface – the glass

cockpit (transition to glass cockpit for the Boeing 747) . 27

Figure 6-1: Human error reduction examples . 33

Figure 6-2: Human error analysis and reduction process . 37

Figure 6-3: Human error analysis iteration . 41

Figure 6-4: Human error reporting and investigation process . 44

Figure 7-1: Human dependability in system life cycle . 46

Tables

Table A-1 : SPAR_H PSF modelling considerations for MIDAS [57] . 55

Table B-1 : Example of an “Human Error Analysis Form sheet” . 60

Table D-1 : Examples of Comparable External Domains . 65

European Foreword

This document (CEN/TR 17602-30-03:2021) has been prepared by Technical Committee

CEN/CLC/JTC 5 “Space”, the secretariat of which is held by DIN.

It is highlighted that this technical report does not contain any requirement but only collection of data

or descriptions and guidelines about how to organize and perform the work in support of EN 16602-

30.

This Technical report (CEN/TR 17602-30-03:2021) originates from ECSS-Q-HB-30-03A.

Attention is drawn to the possibility that some of the elements of this document may be the subject of

patent rights. CEN [and/or CENELEC] shall not be held responsible for identifying any or all such

patent rights.

This document has been prepared under a mandate given to CEN by the European Commission and

the European Free Trade Association.

This document has been developed to cover specifically space systems and has therefore precedence

over any TR covering the same scope but with a wider domain of applicability (e.g.: aerospace).

Introduction

Space systems always have “human in the loop” such as spacecraft operators in a control centre, test

or maintenance staff on a ground or astronauts on board.

Human dependability complements disciplines that concern the interaction of the human element

with or within a complex sociotechnical system and its constituents and processes such as human

factors engineering (see ECSS-E-ST-10-11C “Human factors engineering” [1]), human systems

integration [2], human performance capabilities, human-machine interaction and human-computer

interaction in the space domain [3],[4].

Human dependability captures the emerging consensus and nascent effort in the space sector to

systematically include the considerations of “human behaviour and performance” in the design,

validation and operations of both crewed and un-crewed systems to take benefit of human capabilities

and to prevent human errors. Human behaviour and performance can be influenced by various

factors, also called precursors (e.g. performance shaping factors), resulting in human errors, or error

mitigators, limiting the occurrence or impact of human errors. Human errors can originate from

inadequate system design i.e. that ignores or does not properly account for human factor engineering

and system operation. Human errors can contribute to or be part of failure or accident scenarios

leading to undesirable consequences on a space mission such as loss of mission or as worst case loss of

life.

In the space domain, human dependability as a discipline first surfaced during contractor study and

policy work in the early 1990s in the product assurance, system safety and knowledge management

domain [5],[6] and concerned principles and practices to improve the safety and dependability of

space systems by focusing on human error, related design recommendations and root cause analysis

[7],[8].

The standards ECSS-Q-ST-30C “Dependability”[9] and ECSS-Q-ST-40C”Safety” [10] define principles

and requirements to assess and reduce safety and dependability risks and address aspects of human

dependability such as human error failure tolerance and human error analysis to complement FMECA

and hazard analysis. The objective of human error analysis is to identify, assess and reduce human

errors involved failure scenarios and their consequences. Human error analysis can be implemented

through an iterative process, with iterations being determined by the project progress through the

different project phases. Human error analysis is not to be seen as the conclusion of an investigation,

but rather as a starting point to ensure safety and mission operations success.

The main focus of the handbook is on human dependability associated with humans directly involved

in the operations of a space system (“humans” understood here as individual human operator or

astronaut or groups of humans i.e. e.g. a crew, a team or an organization including AIT (assembly,

integration and test)and launch preparation). This includes and concerns especially the activities

related to the planning and implementation of space system control and mission operations from

launch to disposal, and can be extended to cover operations such as AIT and launch preparation.

References

[1] ECSS-E-ST-10-11C - Space engineering - Human factors engineering, 31 July 2008 (Number of EN

version: EN 16603-10-11)

[2] Booher, Harold R. (Ed.) (2003) Handbook of Human Systems Integration. New York: Wiley.

[3] NASA (2010) Human Integration Design Handbook NASA/SP-2010-3407 (Baseline).

Washington, D.C.: NASA.

[4] NASA (2011) Space Flight Human-System Standard Vol. 2: Human Factors, Habitability, and

Environmental Health NASA-STD-3001,Vol. 2. Washington, D.C.: NASA.

[5] Atkins, R. K. (1990) Human Dependability Requirements, Scope and Implementation at the

European Space Agency. Proceedings of the Annual Reliability and Maintainability

Symposium, IEEE, pp. 85-89.

[6] Meaker, T. A. (1992) Future role of ESA R&M assurance in space flight operation. Proceedings

of the Annual Reliability and Maintainability Symposium, IEEE, pp. 241-242.

[7] Alenia Spazio (1994) Human Dependability Tools, Techniques and Guidelines: Human Error

Avoidance Design Guidelines and Root Cause Analysis Method (SD-TUN-AI-351, -353, -351).

Noordwijk: ESTEC.

[8] Cojazzi, G. (1993) Root Cause Analysis Methodologies: Selection Criteria and Preliminary

Evaluation, ISEI/IE/2443/93, JRC Ispra, Italy: Institute for System Engineering and Informatics.

[9] ECSS-Q-ST-30 – Space product assurance - Dependability, 6 March 2009 (Number of EN version:

EN 16602-30)

[10] ECSS-Q-ST-40 – Space product assurance - Safety, 6 March 2009 (Number of EN version:

EN 16602-40)

Scope and objectives

1.1 Scope

The handbook defines the principles and processes of human dependability as integral part of system

safety and dependability. The handbook focuses on human behaviour and performance during the

different operation situations as for example in a control centre such as handover to routine mission

operation, routine mission operation, satellite maintenance or emergency operations.

This handbook illustrates the implementation of human dependability in the system life cycle, where

during any project phase there exists the need to systematically include considerations of the:

Human element as part of the space system,

Impact of human behaviour and performance on safety and dependability.

Within this scope, the main application areas of the handbook are to support the:

a. Development and validation of space system design during the different project phases,

b. Development, preparation and implementation of space system operations including their

support such as the organisation, rules, training etc.

c. Collection of human error data and investigation of incidents or accidents involving human error.

The handbook does not address:

Design errors: The handbook intends to support design (and therefore in this sense, addresses

design errors) regarding the avoidance or mitigation of human errors during operations.

However, human error during design development are not considered.

Quantitative (e.g. probabilistic) analysis of human behaviour and performance: The handbook

does not address probabilistic assessment of human errors as input to system level safety and

dependability analysis and consideration of probabilistic targets, and

Intentional malicious acts and security related issues: Dependability and safety deals with

“threats to safety and mission success” in terms of failures and human non malicious errors and

for the sake of completeness includes “threats to safety and mission success” in terms of

malicious actions, which are addressed through security risk analysis. However by definition

“human dependability” as presented in this handbook excludes the consideration of “malicious

actions” and security related issues i.e. considers only “non-malicious actions” of humans.

The handbook does not directly provide information on some disciplines or subjects, which only

indirectly i.e. at the level of PSFs (see section 5) interface with “human dependability”. Therefore the

handbook does not provide direct support to “goals” such as:

optimize information flux in control room during simulations and critical operations,

manage cultural differences in a team,

cope with negative group dynamics,

present best practices and guidelines about team training needs and training methods,

provide guidelines and best practices concerning planning of shifts,

present basic theory about team motivation, and

manage conflict of interests on a project.

1.2 Objectives

The objectives of the handbook are to support:

Familiarization with human dependability (see section 5 “principles of human dependability”).

For details and further reading see listed “references” at the end of each section of the

handbook.

Application of human dependability; (see section 6 “human dependability processes“ and 7

“implementation of human dependability in system life cycle“).

References

Due to the structure of the document, each section includes at its end the references called in it.

The Bibliography at the end of this document contains a list of recommended literature.

Terms, definitions and abbreviated terms

3.1 Terms from other standards

a. For the purpose of this document, the terms and definitions from ECSS-S-ST-00-01 apply

b. For the purpose of this document, the terms and definitions from ECSS-Q-ST-40 apply, in

particular the following term:

1. operator error

3.2 Terms specific to the present handbook

3.2.1 automation

design and execution of functions by the technical system that can include functions resulting from

the delegation of user’s tasks to the system

3.2.2 error mitigator

set of conditions and circumstances that influences in a positive way human performance and the

occurrence of a human error

NOTE The conditions and circumstances are best described by the

performance shaping factors and levels of human performance.

3.2.3 error precursor

set of conditions and circumstances that influences in a negative way human performance and the

occurrence of a human error

3.2.4 human dependability

performance of the human constituent element and its influencing factors on system safety, reliability,

availability and maintainability

3.2.5 human error

inappropriate or undesirable observable human behaviour with potential impact on safety,

dependability or system performance

NOTE Human behaviour can be decomposed into perception, analysis,

decision and action.

3.2.6 human error analysis

systematic and documented process of identification and assessment of human errors, and analysis

activities supporting the reduction of human errors

3.2.7 human error reduction

elimination, or minimisation and control of existing or potential conditions for human errors

NOTE The conditions and circumstances are best described by the

performance shaping factors and levels of human performance.

3.2.8 human error type

classification of human errors into slips, lapses or mistakes

NOTE The types of human error are described in section 6.2 on the human

dependability concept.

3.2.9 level of human performance

categories of human performances resulting from human cognitive, perceptive or motor behaviour in

a given situation

NOTE 1 As an example, categories of human performances can be "skill

based", "rule based" and "knowledge based".

NOTE 2 The level of human performance results from the combination of the

circumstances and current situation (e.g. routine situation, trained

situation, novel situation) and the type of control of the human action

(e.g. consciously or automatically).

3.2.10 operator-centred design

approach to human-machine system design and development that focuses, beyond the other technical

aims, on making systems usable

3.2.11 performance shaping factor

specific error precursor or error mitigator that influences human performance and the likelihood of

occurrence of a human error.

NOTE 1 Performance shaping factors are either error precursors or error

mitigators appearing in a failure scenario and enhance or degrade

human performance.

NOTE 2 Different performances shaping factors are listed in section 5 of this

document.

3.2.12 resilience

ability to anticipate and adapt to the potential for “surprise and error” in complex sociotechnical

systems

NOTE Resilience engineering provides a framework for understanding and

addressing the context of failures i.e. as a symptom of more in-depth

structural problems of a system.

3.2.13 socio-technical system

holistic view of the system including the operators, the organization in which the operator is involved

and the technical system operated.

NOTE A socio-technical system is the whole structure including

administration, politics, economy and cultural ingredients of an

organisation or a project.

3.3 Abbreviated terms

For the purpose of this document, the following abbreviated terms apply:

Abbreviation Meaning

AIT assembly, integration and testing

FDIR failure detection, isolation and recovery

FMEA failure mode effect analysis

FMECA failure mode effect and criticality analysis

HET human error type

HFACS Human Factors Analysis and Classification System

HMI human-machine interface

HUDEP human dependability

LHP level of human performance

O&M organizational and management

PIF performance influencing factors

PSF performance shaping factor

RAMS reliability, availability, maintainability and safety

SRK skill, rule, knowledge

VACP visual, auditory, cognitive and psychomotor

Objectives of human dependability

Objectives of human dependability during the space system life cycle include:

Definition of the role and involvement of the human in the system, for example support

selection of an automation strategy, enhancement of system resilience due to operator

intervention to prevent incidents or accidents;

Definition and verification of human dependability requirements on a project such as human

error tolerance as part of overall failure tolerance;

Assessment of safety and dependability risks of a system from design to operation with respect

to human behaviour and performance, and identification of their positive and negative

contributions to safety and mission success;

Identification of failure scenarios involving human errors through “human error analysis” as

input to safety and dependability analysis and as basis of a “human error reduction”;

Definition of human error reduction means to drive the definition and implementation of, for

example system design and operation requirements, specifications, operation concepts,

operation procedures, and operator training requirements;

Support of the development of operation procedures for normal, emergency and other

conditions with respect to human performance and behaviour;

Collection and reporting of human error data; and

Support of the investigation of failure scenarios involving human errors.

Principles of human dependability

5.1 Human dependability concept

5.1.1 Introduction

Human dependability is based on the following concept. When a human operates a system, human

capabilities (skills and knowledge) are exploited. These capabilities have the potential to mitigate

expected and unexpected undesired system behaviour, however they can also introduce human errors

causing or contributing to failure scenarios of the system.

In a first step, for safety and dependability analysis at functional analysis level there is no need to

discriminate how functions are implemented i.e. using hardware, software or humans. Indeed

functional failures (loss or degradation of functions) are identified and the associated consequences

with their consequence severities are determined.

However, in further steps, at lower level of safety and dependability analysis such as FMECA on the

physical design the fact that the function is implemented by hardware or software is considered (see

section 6). Similarly, when operations are analysed, the interaction of the human with the rest of the

system is investigated.

Technical failures and human errors are inevitable in complex systems. The human performance and

the technical system can be seen as functioning as a joint cognitive system [11] with three human

relationships:

Human - environment (technical system),

Human - human (operating team), and

Human - itself (work orientation, motivation) and the associated outcome in terms of human

performance and the overall socio-technical system one.

In order to prevent or mitigate as much as possible human errors systems need to be designed taking

into account human factors engineering considerations (see ECSS-E-ST-10-11C “Human factors

engineering” [12]). Proper consideration of human error to achieve the design and operation of a safe

and dependable system is based on analysis of human performance and acknowledges the fact that

humans err.

Human error analysis addresses the systematic identification and assessment of human functions with

the aim of reducing and mitigating human errors. Such human error analyses are an integral part of

safety and dependability through the safety and dependability analysis process. However, human

dependability analysis has to consider both positive and negative aspects of the human contribution

to safety and mission success (as presented in section 5.2). The outcomes of these analyses will, on the

one hand, provide recommendations for reducing and mitigating human errors and on the other hand

provide recommendations to foster and encourage positive contributions.

Including in an explicit and possible systematic way both types of contributions in failure scenarios is

a mean of identifying recommendations for human error prevention, removal and tolerance.

NOTE More information about human error analysis data are provided in

the Annex A.

5.1.2 Failure scenario integrating human errors

Failure scenarios describe failures and human error in terms of event propagation from causes to

consequences, as shown at Figure 5-1 where the upper part presents for example a human error

causing an undesirable event leading to a consequence. The lower part of the Figure 5-1 describes a

technical failure followed by human error which leads to an undesirable event and a consequence.

Consequences are characterized by their severity.

Cause:

Undesirable Event Consequence

Human Error

Cause:

Human Error Undesirable Event Consequence

Technical Failure

Figure 5-1: Examples of human error in failure scenarios

Space systems with human operator in the loop need to be designed having in mind strengths of the

human (such as the mental capability to resolve problems in case of failures in the system) and

weaknesses of the human (in particular regarding human error and reaction to technical failures in the

system).

Human factors engineering (see ECSS-E-ST-10-11C“Human factors engineering” [12]) deals with the

specifics of human performance and how it can be improved. Ergonomics deals with the physical and

physiological aspects of the system design and operations while human-computer interaction

addresses presentation and interaction aspects when computers are included in the technical system.

5.1.3 Human error and error type

A human error is characterised by a type. For example: the human error event “pressing cancel button

inadvertently” can be categorised as the error type “slip”. Such categorization is meant to help the

analyst in identifying means for preventing their occurrence or mitigating their impact depending on

the objectives and levels of the analysis.

5.1.4 Error precursors and error mitigators

5.1.4.1 Overview

Human performance in complex systems is influenced by:

Performance Shaping Factors (PSF)

Levels of Human Performance (LHP)

The occurrence of human errors and their propagation in failure scenarios is influenced by error

precursors and error mitigators as shown at Figure 5-2. Precursors and mitigators of a human error

are the set of conditions and circumstances under which a human behaves and which influence the

human performance. They are best described in terms of PSFs and LHPs.

The PSFs and LHPs interfere with each other. For example, the PSF ”stress” might not be relevant

under “nominal operational conditions” (corresponding to the operations under LHP “skill”). The

stress-factor can become more significant when the “normal operational conditions” are changed to

“abnormal operation with limited time availability” (corresponding to the operations under LHP

“rule”) and might become even more important when no rules (e.g. a contingency procedure) are

defined for the specific abnormal situation (corresponding to the operations under LHP “knowledge”

as the operator has to employ his/her knowledge of the domain to define an operational rule to handle

the abnormal situation).

Precursor and Mitigator

influence

Human Cause:

Undesirable Event Consequence

Performance Human Error

Precursor and Mitigator

influence

Cause:

Human

Human Error Undesirable Event Consequence

Technical

Performance

Failure

Figure 5-2: Error precursors, error mitigators and human error in failure scenarios

5.1.4.2 Performance shaping factors

Performance shaping factors (PSFs) - sometimes referred as PIF (as Performance Influencing Factors) -

can affect human performance in a positive (“help performance”) or negative (“hinder performance”)

manner. It is important to note that some of the PSFs can be considered as meta-PSFs as they influence

other PSFs. This is the case, for instance, for the PSF “levels of automation” that influence vigilance,

trust, complacency, … as detailed below. PSFs can be broadly grouped into two types [13]:

External PSFs that are external to the operators divided in two groups: organizational and

management (O&M) factors and job factors,

Internal PSFs that can be part of operators’ internal characteristics, also called personal factors

Another classification of PSF has been proposed in [13] dividing them in “direct” and “indirect”.

Identifying PSFs according to this classification is important when predicting human error.

Direct PSFs Those PSFs, such as time to complete the task, that can be measured directly,

whereby there is a one-to-one relationship between the magnitude of the PSF and that which is

measured.

Indirect PSFs Those PSFs, such as fitness for duty, that cannot be measured directly, whereby

the magnitude of the PSF can only be determined multivariately or subjectively, or through

other measures or PSFs.

Typical external O&M PSFs are as follows:

a. Work or customer pressures (e.g. production vs. safety)

b. Workload (e.g. allocation of work and tasks for personal)

c. Level and nature of supervision and leadership

d. Organization complexity, multi-cultural issues with other partners

e. Communication, with colleagues, supervision, contractor, other

f. Turn-over and training management

g. Manning levels

h. Clarity of roles and responsibilities

i. Working environment (e.g. noise, heat, space, lighting, ventilation, hygiene care, catering)

j. Violations of procedures and rules (e.g. widespread violations)

k. Team or crew dynamics

l. Available staffing and resources

m. Effectiveness of organisational learning (learning from experiences)

n. Safety culture (e.g. everyone breaks the rules)

Typical external job PSFs are as follows:

a. Clarity of signs, signals, instructions and other information

b. System and equipment interface (labelling, alarms, error avoidance, error tolerance)

c. Difficulty and complexity of task

d. Routine or unusual

e. Divided attention

f. Procedures inadequate or inappropriate

g. Preparation for task (e.g. permits, risk assessments, checking)

h. Time available and time pressure

i. Training and experience Tools appropriate for task

j. Usability of the operator interfaces

k. Levels of automation (meta-PSF impacting many of others such as overconfidence,

complacency, vigilance)

Typical internal personal PSFs are as follows:

a. Physical capability and condition

b. Fatigue (acute from temporary situation, or chronic)

c. Vigilance

d. Complacency and over-attention

e. Trust or overconfidence and mistrust

f. Stress and morale

g. Peer pressure

h. individual behaviour and character (e.g. anti-authority, impulsiveness, invulnerability,

machismo, shyness , hoarding (no sharing of) knowledge)

i. Work overload or under-load (it can be caused also by inadequate personal organization)

j. Competence to deal with circumstances (inept or too skilled)

k. Motivation vs. other priorities

l. Deviation from procedures and rules.

Organizational and Management Factors (O&M) factors are important PSFs that are more and more

pointed out in human error prevention and reduction issues [14]. Carefully considering these factors

contributes to safety and mission success. To highlight them is a way to involve organizations and all

level of management in the process of human error reduction. The manager implication in human

error prevention and reduction is crucial because decision makers are most of the time in the best

position to argue and take remedial actions taking into account trade-off between finance and risks.

Symptoms on personal (e.g. operator) and management actions due to organizational issues are for

example:

a. Lack of personal (e.g. operator) commitment: widespread violations of routine procedures and

rules.

b. Lack of management commitment: management decisions that consistently put e.g.

“production” or “cost factors” before safety, or a tendency to focussing on the short-term and

being highly reactive. Postponing training due to crew resources scarcity is also a latent factor

for human error.

As illustrated in Figure 5-3 showing the “Human Factors Analysis and Classification System” HFACS

“organizational factors” can influence “management issues” (also referred to as “supervision”) which

in turn can influence “job and personal factors” (for further details see [15] or [16]).

Figure 5-3: HFACS model

Decisions of upper-level management can directly have an effect on lower-level managers or

supervisors practices as well as the conditions and actions of the rest of personnel. Consequently,

organizational influences can result in a system failure, human error or an unsafe situation. Three

categories of possible negative organizational influences can be considered:

a. Resource and acquisition management: this category refers to the management, allocation and

maintenance of organizational resources (human or monetary) and equipment/facilities.

b. Organizational climate: this category refers to a broad class of organizational variables that

influence worker performance and in general is the usual environment within the organization.

This category is related with the Safety Culture or the definition of policies and rules.

c. Organizational process: this category refers to the formal process by which things are done in

the organization and includes definition of operations and procedures and control of activities.

On the other hand, four major categories of negative management and supervisory influences can be

considered, as showed in Figure 5-3:

a. Inadequate management and supervision: this category refers to those times when management

results are inappropriate, improper or cannot occur at all.

b. Planned inappropriate operations: this category affects to the appropriate planning of

operational schedule or selection of operators.

c. Failure to correct a known problem: this category refers to deficiencies affecting personnel,

equipment, training or procedures that are “known” by the management, but yet they are

allowed to continue uncorrected.

d. Management and supervisory violations: this category refers to situations when managers

disregard existing rules and regulations.

Understanding these influences is useful for defining recommendations and mitigations means for

“O&M factors” (like for instance those indicated in section 5.1.2) that could lead to human errors.

Performance shaping factors can change during a failure scenario and influence how a failure scenario

develops. Three types of PSF modifications are considered:

a. Static Condition: PSFs remain constant across the events in a scenario,

b. Dynamic Progression: PSFs evolve across events in a scenario,

c. Dynamic Initiator: a sudden change in a scenario causes changes in the PSFs.

See Annex A for some examples of types of PSF modifications.

This HFACS process highlights Reason’s principle of latent and active failures [17]. Indeed, O&M

PSFs usually don’t play an active role in a human error but they provide the underlying foundations

for reducing or increasing human errors occurrence.

5.1.4.3 Level of human performance

5.1.4.3.1 Overview

To simplify the complex human cognitive behaviour three levels of human performance are described

as proposed by Jens Rasmussen in [18]:

• Skill Based Performance (S): requires little or no cognitive effort, which is acquired by

training or repetition of actions (e.g. changing gears while driving a car).

• Rule Based Performance (R): is driven by procedures or rules, which is already present in

the head of the operator (e.g. considering the traffic regulation).

• Knowledge Based Performance (K): requires problem-solving and decision-making that

produces new rules that are then executed as presented in rule based section (e.g.

identifying a new route to avoid traffic jam).

These levels of human performance (LHPs) can be associated with specific “situations” and “control

modes” as shown at Figure 5-4 (excerpt from [19]).

Control Modes

Conscious Mixed Automatic

Situations

Routine Skill

based

Trained for Rule

problems based

Knowledge

Novel

based

problems

Figure 5-4: Levels of human performance

The modes to control a situation can be further characterized as follows:

a. Conscious mode:

1. Laborious

2. Slow and sequential

3. Error-prone

4. Potentially very smart

b. Automatic mode:

1. Unconscious

2. Fast, parallel operation

3. Effortless

4. Highly specialized for routine events

The control mode “mixed” can be also referred to as “subconscious”.

Operators can switch from one mode to another one during the same task.

The spectrum of event situations covers:

a. Situations involving routine tasks,

b. Problem situations for which the operator is trained,

c. Situations with problems new to the operator.

5.1.4.3.2 Skill based performance

Skill Based Performance involves the ability to carry out a task using smooth, automated and highly

integrated patterns. Triggered by a specific event, the skill-based processing is normally performed

without conscious monitoring. During Skilled Based Performance human errors are often caused by

attentional slips and/or lapses of memory.

NOTE Skill-based behaviour is characterized by a quasi-instinctive respons

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.

Loading comments...