CEN/TR 17465:2020

(Main)Space - Use of GNSS-based positioning for road Intelligent Transport Systems (ITS) - Field tests definition for basic performance

Space - Use of GNSS-based positioning for road Intelligent Transport Systems (ITS) - Field tests definition for basic performance

The purpose is to define the tests to be performed in order to evaluate the performances of road applications’ GNSS-based positioning terminal (GBPT). To fully define the tests, this task will address the test strategy, the facilities to be used, the test scenarios (e.g. environments and characteristics, which shall allow the comparison of different tests), and the test procedures. The defined tests and process will be validated by performing various in-field tests. The defined tests focus essentially on accuracy, integrity and availability as required in the statement of work included in the invitation to tender.

This document will benefit to:

- The consolidation of EN 16803-1: "Definitions and system engineering procedures for the establishment and assessment of performances"

- The elaboration of EN 16803-2: "Assessment of basic performances of GNSS-based positioning terminals"

- The elaboration of EN 16803-3: "Assessment of security performances of GNSS based positioning terminals".

Definition von Feldtests für Grundleistungen

Espace - Utilisation de la localisation basée sur les GNSS pour les systèmes de transport routiers intelligents - Définition des essais terrains pour les performances générales

Vesolje - Ugotavljanje položaja z uporabo sistema globalne satelitske navigacije (GNSS) pri inteligentnih transportnih sistemih (ITS) v cestnem prometu - Opredelitev terenskih preskusov za osnovno zmogljivost

General Information

- Status

- Published

- Publication Date

- 21-Apr-2020

- Technical Committee

- CEN/CLC/TC 5 - Space

- Current Stage

- 6060 - Definitive text made available (DAV) - Publishing

- Start Date

- 22-Apr-2020

- Due Date

- 16-Jan-2021

- Completion Date

- 22-Apr-2020

Overview

CEN/TR 17465:2020 - "Space - Use of GNSS‑based positioning for road Intelligent Transport Systems (ITS) - Field tests definition for basic performance" - defines a standardized test framework to evaluate GNSS‑based positioning terminals (GBPT) used in road ITS. The Technical Report specifies test strategy, facilities, operational scenarios, procedures and validation methods. The focus is on assessing basic performance metrics such as accuracy, integrity and availability, with in-field validation and reproducible laboratory approaches (including record & replay).

Key topics and technical requirements

- Test strategy and stakeholder roles: guidance on test combinatorics, responsibilities across industry value chain and a recommended homologation approach for complex hybrid GBPT systems.

- Operational scenarios: standardized environments, trajectories, road selections and environmental conditions to ensure repeatable and comparable field tests (including TTFF - Time To First Fix - assessments).

- Metrics and measurement: definitions and computation methods for accuracy, integrity, availability, continuity and timing metrics; guidance on data collection and the receiver outputs required for metric computation.

- Test facilities and equipment: overview of in‑field data collection tools, laboratory test beds, and log & replay solutions used to reproduce GNSS stimuli and assess DUT (Device Under Test) performance.

- Test and validation procedures: step‑by‑step field test plans, data analysis/archiving, environment characterization, replay testing, statistical representativity and quality checks for the reference trajectory.

- Reporting: standardized synthesis report layout, DUT identification, test conditions, raw data references and tools used for post‑processing.

Practical applications

- Homologation and performance verification of GNSS receivers used in road ITS (navigation, tolling, fleet management, safety services).

- Comparative benchmarking of GBPT products (manufacturers, OEMs and test laboratories).

- Development of test labs and log & replay facilities to accelerate R&D and certification.

- Supporting regulators and road authorities in defining performance requirements for ITS deployments.

- Informing procurement, system integration and quality assurance processes where GNSS performance (accuracy, integrity, availability) is critical.

Who should use this standard

- GNSS receiver manufacturers and system integrators

- ITS product developers and OEMs

- Independent test laboratories and certification bodies

- Road operators, transport authorities and regulators

- R&D teams and academic researchers in positioning and ITS

Related standards

- EN 16803‑1: Definitions and system engineering procedures for performance assessment

- EN 16803‑2: Assessment of basic performances of GNSS‑based positioning terminals

- EN 16803‑3: Assessment of security performances of GNSS‑based positioning terminals

- CEN/CLC/JTC 5 technical committee outputs and national adoption (SIST)

Keywords: GNSS, GBPT, ITS, field tests, accuracy, integrity, availability, TTFF, record & replay, test facilities, metrics, CEN/TR 17465:2020.

Get Certified

Connect with accredited certification bodies for this standard

BSI Group

BSI (British Standards Institution) is the business standards company that helps organizations make excellence a habit.

TL 9000 QuEST Forum

Telecommunications quality management system.

NYCE

Mexican standards and certification body.

Sponsored listings

Frequently Asked Questions

CEN/TR 17465:2020 is a technical report published by the European Committee for Standardization (CEN). Its full title is "Space - Use of GNSS-based positioning for road Intelligent Transport Systems (ITS) - Field tests definition for basic performance". This standard covers: The purpose is to define the tests to be performed in order to evaluate the performances of road applications’ GNSS-based positioning terminal (GBPT). To fully define the tests, this task will address the test strategy, the facilities to be used, the test scenarios (e.g. environments and characteristics, which shall allow the comparison of different tests), and the test procedures. The defined tests and process will be validated by performing various in-field tests. The defined tests focus essentially on accuracy, integrity and availability as required in the statement of work included in the invitation to tender. This document will benefit to: - The consolidation of EN 16803-1: "Definitions and system engineering procedures for the establishment and assessment of performances" - The elaboration of EN 16803-2: "Assessment of basic performances of GNSS-based positioning terminals" - The elaboration of EN 16803-3: "Assessment of security performances of GNSS based positioning terminals".

The purpose is to define the tests to be performed in order to evaluate the performances of road applications’ GNSS-based positioning terminal (GBPT). To fully define the tests, this task will address the test strategy, the facilities to be used, the test scenarios (e.g. environments and characteristics, which shall allow the comparison of different tests), and the test procedures. The defined tests and process will be validated by performing various in-field tests. The defined tests focus essentially on accuracy, integrity and availability as required in the statement of work included in the invitation to tender. This document will benefit to: - The consolidation of EN 16803-1: "Definitions and system engineering procedures for the establishment and assessment of performances" - The elaboration of EN 16803-2: "Assessment of basic performances of GNSS-based positioning terminals" - The elaboration of EN 16803-3: "Assessment of security performances of GNSS based positioning terminals".

CEN/TR 17465:2020 is classified under the following ICS (International Classification for Standards) categories: 03.220.20 - Road transport; 33.060.30 - Radio relay and fixed satellite communications systems; 35.240.60 - IT applications in transport. The ICS classification helps identify the subject area and facilitates finding related standards.

CEN/TR 17465:2020 is associated with the following European legislation: Standardization Mandates: M/496. When a standard is cited in the Official Journal of the European Union, products manufactured in conformity with it benefit from a presumption of conformity with the essential requirements of the corresponding EU directive or regulation.

CEN/TR 17465:2020 is available in PDF format for immediate download after purchase. The document can be added to your cart and obtained through the secure checkout process. Digital delivery ensures instant access to the complete standard document.

Standards Content (Sample)

SLOVENSKI STANDARD

01-julij-2020

Vesolje - Ugotavljanje položaja z uporabo sistema globalne satelitske navigacije

(GNSS) pri inteligentnih transportnih sistemih (ITS) v cestnem prometu -

Opredelitev terenskih preskusov za osnovno zmogljivost

Space - Use of GNSS-based positioning for road Intelligent Transport Systems (ITS) -

Field tests definition for basic performance

Definition von Feldtests für Grundleistungen

Espace - Utilisation de la localisation basée sur les GNSS pour les systèmes de

transport routiers intelligents - Définition des essais terrains pour les performances

générales

Ta slovenski standard je istoveten z: CEN/TR 17465:2020

ICS:

33.070.40 Satelit Satellite

35.240.60 Uporabniške rešitve IT v IT applications in transport

prometu

2003-01.Slovenski inštitut za standardizacijo. Razmnoževanje celote ali delov tega standarda ni dovoljeno.

TECHNICAL REPORT

CEN/TR 17465

RAPPORT TECHNIQUE

TECHNISCHER BERICHT

April 2020

ICS 03.220.20; 33.060.30; 35.240.60

English version

Space - Use of GNSS-based positioning for road Intelligent

Transport Systems (ITS) - Field tests definition for basic

performance

Espace - Utilisation de la localisation basée sur les Definition von Feldtests für Grundleistungen

GNSS pour les systèmes de transport routiers

intelligents - Définition des essais terrains pour les

performances générales

This Technical Report was approved by CEN on 23 February 2020. It has been drawn up by the Technical Committee

CEN/CLC/JTC 5.

CEN and CENELEC members are the national standards bodies and national electrotechnical committees of Austria, Belgium,

Bulgaria, Croatia, Cyprus, Czech Republic, Denmark, Estonia, Finland, France, Germany, Greece, Hungary, Iceland, Ireland, Italy,

Latvia, Lithuania, Luxembourg, Malta, Netherlands, Norway, Poland, Portugal, Republic of North Macedonia, Romania, Serbia,

Slovakia, Slovenia, Spain, Sweden, Switzerland, Turkey and United Kingdom.

CEN-CENELEC Management Centre:

Rue de la Science 23, B-1040 Brussels

© 2020 CEN/CENELEC All rights of exploitation in any form and by any means Ref. No. CEN/TR 17465:2020 E

reserved worldwide for CEN national Members and for

CENELEC Members.

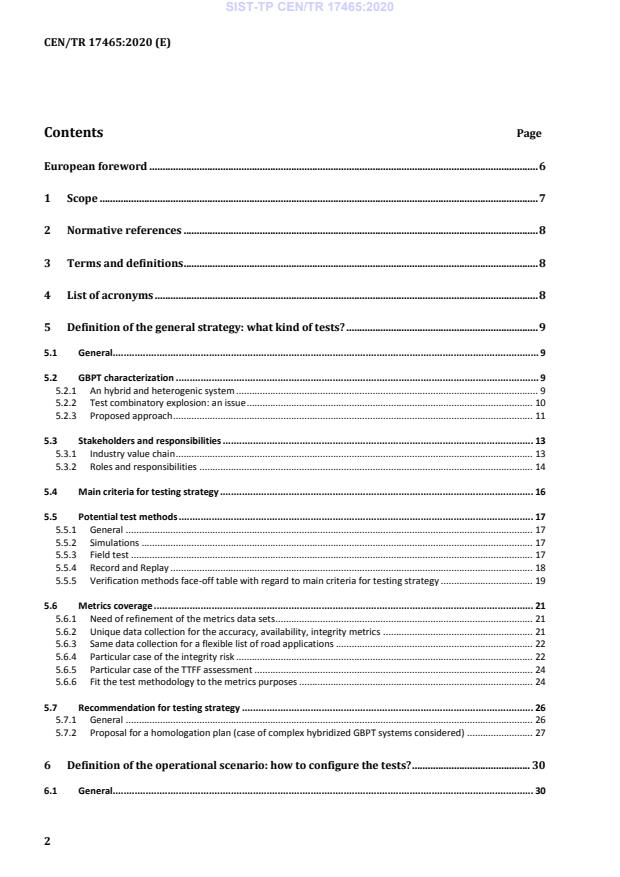

Contents Page

European foreword . 6

1 Scope . 7

2 Normative references . 8

3 Terms and definitions . 8

4 List of acronyms . 8

5 Definition of the general strategy: what kind of tests? . 9

5.1 General. 9

5.2 GBPT characterization . 9

5.2.1 An hybrid and heterogenic system . 9

5.2.2 Test combinatory explosion: an issue . 10

5.2.3 Proposed approach . 11

5.3 Stakeholders and responsibilities . 13

5.3.1 Industry value chain . 13

5.3.2 Roles and responsibilities . 14

5.4 Main criteria for testing strategy . 16

5.5 Potential test methods . 17

5.5.1 General . 17

5.5.2 Simulations . 17

5.5.3 Field test . 17

5.5.4 Record and Replay . 18

5.5.5 Verification methods face-off table with regard to main criteria for testing strategy . 19

5.6 Metrics coverage . 21

5.6.1 Need of refinement of the metrics data sets . 21

5.6.2 Unique data collection for the accuracy, availability, integrity metrics . 21

5.6.3 Same data collection for a flexible list of road applications . 22

5.6.4 Particular case of the integrity risk . 22

5.6.5 Particular case of the TTFF assessment . 24

5.6.6 Fit the test methodology to the metrics purposes . 24

5.7 Recommendation for testing strategy . 26

5.7.1 General . 26

5.7.2 Proposal for a homologation plan (case of complex hybridized GBPT systems considered) . 27

6 Definition of the operational scenario: how to configure the tests? . 30

6.1 General. 30

6.2 Preamble . 30

6.3 Status of definition of an operational scenario: discussions . 32

6.3.1 General . 32

6.3.2 Set-up conditions . 32

6.3.3 Trajectory/motion . 34

6.3.4 Environmental conditions . 35

6.3.5 Synthesis on the construction of the operational scenario . 37

6.4 Descriptions of operational scenarios expected for record and replay testing . 38

6.4.1 General . 38

6.4.2 Set-up conditions . 38

6.4.3 Selection of roads . 40

6.4.4 Selection of kinds of trips . 41

6.4.5 Crossing selected roads and trips: proposed organization of tests . 42

6.5 Operational scenarios for TTFF field tests . 46

6.5.1 General . 46

6.5.2 Set-up conditions . 47

6.5.3 Trajectories . 47

6.5.4 Environmental conditions . 48

6.5.5 Proposed combinations . 48

7 Definition of the metrics and related tools: what to measure? .49

7.1 General . 49

7.2 Accuracy metrics . 50

7.2.1 General . 50

7.2.2 Integrity metrics . 60

7.3 Availability metrics . 74

7.4 Continuity metrics . 75

7.5 Timing metrics . 76

7.6 Synthesis on the receiver outputs to be collected . 80

7.7 Synthesis of the metrics computation tool functions . 80

8 Definition of the test facilities: which equipment to use? .81

8.1 General . 81

8.2 Equipment panorama and characterization . 81

8.2.1 Equipment for in-field data collection . 81

8.2.2 Laboratory Test-beds . 86

8.2.3 Log and Replay Solutions . 91

8.3 Equipment Justification . 95

8.3.1 Equipment for in-field data collection . 95

8.3.2 “Log & Replay” Solutions . 98

9 Definition of the test procedures: how to proceed to the tests?. 100

9.1 General. 100

9.2 Field tests for recording the in-file data of the standardized operational scenario . 101

9.2.1 General . 101

9.2.2 Test plan. 101

9.2.3 Good functioning verification . 104

9.2.4 Field test conducting . 105

9.2.5 Data analysis and archiving . 107

9.3 Characterization of environment . 108

9.4 Replay step: assessing the DUT performances . 109

10 Definition of the validation procedures: how to be sure of the results? . 110

10.1 General. 110

10.2 Presentation of a scenario: rush time in Toulouse . 110

10.3 Quality of the reference trajectory . 112

10.4 Availability, regularity of the of the DUT’s outputs for the metrics computations . 113

10.5 Statistic representability of the results . 114

11 Definition of the synthesis report: how to report the results of the tests? . 118

11.1 General. 118

11.2 Identification of the DUT . 118

11.3 Identifications of test . 118

11.4 Personal responsible of tests . 119

11.5 Identification of the tests stimuli . 119

11.6 Report of tests conditions . 119

11.7 Identification of the files including the test raw data . 119

11.8 Identification of tools used in post processing for computing the metrics . 119

11.9 Results . 119

11.10 Date of report, responsible and contact, lab address and signatures . 120

Annex A (normative) ETSI test definition for GBLS . 121

A.1 Synthetic reporting of the ETSI specification for the operational environment and tests

conditions . 121

Annex B (normative) Detailed criteria for testing strategy . 133

B.1 First criteria: trust in metrology . 133

B.1.1 Reproducible results . 133

B.1.2 Representative and meaningful results for the road applications . 135

B.1.3 Reliable procedure for the metric assessment . 135

B.2 Second criteria: Reasonable cost for manufacturers . 135

B.2.1 Cost of test benches . 135

B.2.2 Cost of the test operations . 136

B.2.3 Additional costs . 136

B.3 Third criteria: clear responsibility and sharing between actors . 137

Annex C (normative) Size of data collection (GMV contribution) . 139

C.1 Data campaign definition: sample size . 139

C.2 Statistical significance . 140

Bibliography . 144

European foreword

This document (CEN/TR 17465:2020) has been prepared by Technical Committee CEN/TC 5 “Space”,

the secretariat of which is held by DIN.

Attention is drawn to the possibility that some of the elements of this document may be the subject of

patent rights. CEN shall not be held responsible for identifying any or all such patent rights.

1 Scope

This document is the output of WP1.2 “Field test definition for basic performances” of the GP-START

project.

The GP-START project aims to prepare the draft standards CEN/CENELEC/TC5 16803-2 and 16803-3

for the Use of GNSS-based positioning for road Intelligent Transport Systems (ITS). Part 2: Assessment of

basic performances of GNSS-based positioning terminals is the specific target of this document.

This document constitutes the part of the Technical Report on Metrics and Performance levels detailed

definition and field test definition for basic performances regarding the field tests definition.

The purpose of WP1.2 is to define the field tests to be performed in order to evaluate the

performances of road applications’ GNSS-based positioning terminal (GBPT). To fully define the tests,

this task addresses the test strategy, the facilities to be used, the test scenarios (e.g. environments and

characteristics, which should allow the comparison of different tests), and the test procedures. The

defined tests and process will be validated by performing various in-field tests. The defined tests focus

essentially on accuracy, integrity and availability as required in the statement of work included in the

invitation to tender.

This document will serve to:

• the consolidation of EN 16803-1: Definitions and system engineering procedures for the

establishment and assessment of performances;

• the elaboration of EN 16803-2: Assessment of basic performances of GNSS-based positioning

terminals;

• the elaboration of EN 16803-3: Assessment of security performances of GNSS-based positioning

terminals.

The document is structured as follows:

• Clause 1 is the present Scope;

• Clause 5 defines and justifies the global strategy for testing;

• Clause 6 defines and justifies the retained operational scenario;

• Clause 7 defines the metrics and related tools;

• Clause 8 defines the required tests facilities;

• Clause 9 defines the tests procedures;

• Clause 10 defines the validation procedures;

• Clause 11 defines how to report the tests results.

2 Normative references

The following documents are referred to in the text in such a way that some or all of their content

constitutes requirements of this document. For dated references, only the edition cited applies. For

undated references, the latest edition of the referenced document (including any amendments)

applies.

EN 16803-1:2016, Space — Use of GNSS-based positioning for road Intelligent Transport Systems (ITS)

— Part 1: Definitions and system engineering procedures for the establishment and assessment of

performances

3 Terms and definitions

No terms and definitions are listed in this document.

ISO and IEC maintain terminological databases for use in standardization at the following addresses:

• ISO Online browsing platform: available at http://www.iso.org/obp

• IEC Electropedia: available at http://www.electropedia.org/

4 List of acronyms

▪

GNSS Global navigation satellite system

▪

GPS Global positioning system

▪ SBAS Satellite based augmentation system

▪ COTS Commercial on the shelves

▪ GBPT GNSS based positioning terminal

▪ OTS On the shelves

▪

ITS Intelligent transport systems

▪

ETSI European telecommunications standards institute

▪

A-GNSS Assisted GNSS

▪

FAR False alarm rate

▪

PFA Probability of false alarm

▪

PMD Probability of miss detection

▪ PPK Post processing kinematic

▪ AIA Accuracy, integrity, availability

▪ SW Software

▪ LoS Line of Sight

5 Definition of the general strategy: what kind of tests?

5.1 General

The technical solutions for ITS (road environment), focused in the targeted standard, are more and

more complex.

One consequence is that their performances and behaviours will no more only depend on their design

but also, and strongly, depend on a lot of external situations and parameters, uncontrolled by the

stakeholders. Among those parameters, we can quote the dependencies on the status of international

worldwide space systems (GNSS), on physical atmospheric conditions, and other environmental

conditions in the proximity of the vehicle (traffic, tree foliage, buildings in vicinity etc.).

As an example, this situation implies that any realization of one field test procedure of a given product

at a given date and hour, will give a different result than the same test procedure of the same product

in the same location at a different date and hour (neither ergodic nor stationary stochastic process).

The obvious consequence is that, if a pure field test strategy is targeted as a preferred solution for the

performance assessment aiming homologation of devices, the analysis of the tests results would

require specialists, and may frequently result in intangible and unreliable interpretations, the opposite

of metrology.

A solution to avoid this issue is to have a total trust in simulations where all the tests conditions are

controlled and which could be perfectly repeatable. ETSI addressed a similar issue during its

standardization process targeting the GNSS based Location Based Services (See ETSI TS 103 246-1, −2,

−3, −4, −5). As a conclusion of its work, ETSI, selected a solution exclusively based on simulations (see

Annex A).

Considering that the real-life environment remains complex to be simulated, the pure simulation

technique will lead to scenarios with a very great number of parameters to be set-up, inducing risk of

human manipulation errors, and anyway a remaining lack of representation of the reality.

New paradigms have to be seriously considered, and this Clause 5 aims to open solutions by analysing

the best way to select and phase the tests to be performed in a standardized performance assessment.

5.2 GBPT characterization

5.2.1 An hybrid and heterogenic system

According to Figure 3 of (see EN 16803-1), Positioning-based road ITS system is the integration of the

GBPT into the road ITS application. Moreover, GBPT is presented also as a complex assembly of

sensors, with multiple interfaces with external systems.

The positioning level, focused in this part of document for the definition of tests, is still an assembly of

more or less complex components where at least one (1) component is a GNSS sensor.

The generic architecture of a Road ITS system ((EN 16803-1), Figure 4) shows directly that the

evaluation of the metrics related to the positioning (accuracy, integrity, availability) will be complex,

since it:

• covers intermediate outputs (position, speed) of a global integrated system, likely not easy to

capture in some future finally packaged and installed products: specific prescriptions and

communication protocols should be standardized;

• depends on worldwide and independently evolving infrastructures, namely GNSS infrastructure and

telecommunication networks, interacting each other’s (Assisted, Differential GNSS) and with the

system itself, and in particular influencing strongly its performances;

• covers sensing of the external environment and consequently depends on a huge number of

external environmental conditions (radio propagation for GNSS and telecommunications, light, fog

and dust for cam and LIDAR, etc.);

• covers in the same time sensing of the motion of the vehicle through odometers and inertial

sensors and consequently depends on the vehicle and its driving as well as additional external

environmental conditions (ex: meteorological, or road-holding for odometer).

In EN 16803-1:2016, Clause 8 presents a long (even if not exhaustive) list of parameters which should

impact the definition of the tests.

Synthesizing together, namely in an integrated lab test facility, the effects of worldwide radio

infrastructures (as existing currently or evolving in the future), their local radio propagation in road

environment, the motion sensing by inertial sensors, and others phenomena like climatic for odometer

or imaging for computer vision is today unfeasible.

Today, the lab facilities for making tests in the environmental conditions interesting each sensor exist

separately but are never integrated.

Figure 1 — Typical test bench in laboratories facilities

Left to right: GNSS signal generation (ESA radio navigation lab), radio oriented for radio navigation or

telecommunications, motion oriented and climatic oriented for inertial sensors (AIAA 092407), computed

vision oriented (JPL robotics facilities)

However, techniques like fusion of sensors and data (e.g. maps) appear more and more mandatory to

leverage the potential of automotive applications and businesses, considering the weakness of the

GNSS signals propagation environments.

5.2.2 Test combinatory explosion: an issue

It is the purpose of sensor fusion to make each sensor impacting (improving is expected) the

performances of the hybridized solution. However, each sensor has two (2) types of errors:

• principles: imperfections of the used physic law modelling the real world (ex: the GNSS

propagation is line of sight, except when refraction like in ionosphere or reflection like multipath

are encountered, accelerometer measuring only the sum of motion acceleration and gravity, etc.);

• measures: imperfections in the design and manufacturing (ex: bias and scale factors in inertial

sensors, thermal noise in electronic, etc.).

Also called ‘exteroceptive’ sensors: GNSS sensors, cam, LIDAR measure physical phenomenon of their extern

environment to deduce location data, to be compared to eyes, nose, (…) on the animals.

Also called ‘proprioceptive’ sensors: inertial units, odometer measure physical parameter changes due to motion in order

to deduce location data, to be compared to sensors belonging to muscles on the animals.

In particular when the performances of other sensors than GNSS are considered – required for hybridized systems.

By capturing information from a lot of parameters of the external environment or from the motion,

and because many physical principle errors of the measurements exist in the reality, the sensor fusion

multiplies also the risks of performance degradations. A strict performance assessment should

consequently measure the performances in any combination of favourable/unfavourable conditions

for good measurements, sensor by sensor. For GBPT, these combinations are still to be combined to

the multiplicity of the road usages, road environment varieties, and finally combined with the

multiplicity of installation and set-up in the vehicles. There are then a so vast set of possible

combinations that it becomes incredible to build reliably and exhaustively the list of the necessary test

scenarios covering correctly the computation of performance metrics.

This combinatory explosion affects as much the lab tests in terms of facilities and scenario diversity, as

the field tests or the simulation techniques, where, in addition, some of the physical effects are very

complex to model representatively.

This implies that experimenting field tests (where the sensors capture during each test one true

representation of the real life, namely one instance at a given instant and in a given location of all of

the parameters which control the GBPT performances) provide a unique and not reproducible

representation. This representation is thus unable to provide, on one test, whatever its length, a total

characterization of the statistical properties (like expected in the metric definition).

In this sense, GMV experiment on Madrid (TR WP1/D1) illustrated that very well: 12 samples of a

similar procedure (same location) have been run giving 12 separate cumulated distributive functions

of the accuracy metric:

Figure 2 — Diversity of field tests results [TR WP1/D1]

This experiment shows that the metric itself, as assessed by field tests, becomes a random function,

with a significant dispersion and that the field test proposition gives a non-ergodic random process,

preventing to become the best solution for the selected metric assessment.

A more practical approach should then be agreed in the standard, preventing useless, unreasonable

testing effort and costs.

The combinatory explosion finally prevents an exhaustive coverage by the metrics of all environmental

situations impacting the performances.

5.2.3 Proposed approach

In EN 16803-1, the standardization working group has already and clearly separated the performance

of the application in two (2) layers:

• the positioning level with the GBPT;

• the ITS application level.

The described previously problematic necessitates to propose an additional breakdown in several sub-

problematics.

The proposed approach to deal with the GBPT (hybridized systems) performance assessment through

testing is to impose a dichotomy between additive sensors (supplemental with respect to geolocation)

and primary sensor (GNSS) and positioning solution, as identified on the Figure 3.

Figure 3 — Proposed approach for the testing requirements

It should thus be retained for the future ITS applications a gradual verification and homologation

process.

This process could support three assessment levels:

• additive sensor level:

o separately, inertial sensors, odometer, vision sensors should be evaluated in terms of

performance, with their own metrics (e.g. measurement errors on ranges to the landmarks for

LIDAR, video, opening range[…], accelerometers biases, gyro drift, etc.);

o at their manufacturing level;

o covering, for example with lab tests, quasi exhaustively the specific environmental conditions,

up to limits of functioning, which affects their performances (weather, road grip, vibrations in

addition to motion, dust, etc.);

o leading to characterize separately the sensors with labels and classifications;

o leading to propose, for each sensor device an ‘equivalent reference additive sensor’ and/or a

‘additive sensor model’.

• GNSS based positioning level (optionally including fusion of sensors and data):

o for the test purpose, the GBPT device under test shall be able to receive GNSS signals (radiated

or conducted according to the antenna integration level);

o for the test purpose, the GBPT device under test shall be able to receive ‘reconstructed test

stimuli’ in place of each integrated additive sensors (necessitates standardized input/outputs

protocols);

o the scenario list shall represent a selected set (not exhaustive) agreed typical but realistic

combinations of operational situations as input/outputs of any sensors (including GNSS) so

that the fusion of sensors could be tested in, without trying to reach an exhaustive set of

situations.

• application level: PVT error models and sensitivity analysis as proposed in EN 16803-1.

In the above proposal, one should understand that:

• ‘equivalent reference additive sensor’ means a COTS sensor (among a list of homologated devices)

of same nature (inertial, odometer, visual) and same class of performances giving similar

availability behaviour and performances statistic behaviours, possibly used in GBPT field test for

capturing data;

• ‘sensor error model’ means an open SW model dedicated to one sensor kind (inertial, odometer,

etc.), whose inputs are physical parameters of the environment, like temperature, pressure,

humidity […] which may be easily measured during a data collection (possibly acquired during the

GBPT field tests) and sufficient to describe the performance behaviour and availability of the

sensor;

• ‘reconstructed test stimuli’ are data aresued either from the ‘equivalent reference additive sensor’

capturing directly the environment during field tests, or from the ‘additive sensor model’ using

inputs issued data acquired during field tests (temperature, pressure, humidity, etc.).

At positioning level, only an agreed selection of typical operative situations would have been performed

in field tests (or recorded for 'Record and Replay' solutions), avoiding the combinatory explosion. That

necessarily assumes that at lower level of manufacturing, sensors have typically be submitted to a

quite exhaustive coverage of any environmental conditions, comprising the limits of the operating

domain in order to get enough trust in a sensor model or in a reference sensor.

It is not in the scope of this document to go further in the definition of the process dedicated to the

characterization of additive sensor level, for which the involvement of the own industry (inertial units,

vision, wheel coding) is mandatory. We only recommend opening a complementary process to

standardize those issues, which are of utmost importance to address hybridized systems.

We now assume in this document that the proposed approach might be retained, that ‘equivalent

reference additive sensors’ are existing and known as well as ‘additive sensor error models’, and that

the standardized interfaces with additive sensors for test purposes are also known.

5.3 Stakeholders and responsibilities

5.3.1 Industry value chain

The life cycles of the development, production, integration of the electronic devices are industrials

features depending on the business models usually applied in the corresponding market.

To illustrate the GBPT for road ITS application, it is interesting to refer to the value chain elaborated

by the GSA (GNSS market report for road applications). This value chain allows to identify the

industrial actors and how they play a role and have responsibilities in the final positioning

performances and testing:

Figure 4 — Road value chain (according GSA market report)

5.3.2 Roles and responsibilities

The various cycles of products industrialization are to be considered in order to define a cost-effective

test strategy and phasing, up to an optional homologation or certification process for ITS operations by

adequate authorities and government bodies.

This way:

• The GNSS sensor (electronic radio chip) manufacturer has a first major responsibility in the final

GNSS positioning performance once embedded in the car, but can only test its production out of

this context; It will never take the complete liability regarding neither the ITS application nor the

road application. Having created a general-purpose device, it has no interest to particularly invest

for testing the road ITS applications, even if it cannot ignore this mass market. In the opposite, it

has the maximum skills to know how the final GNSS performances can depend on its product for

one part, and its integration in the second part. Finally, it is not necessarily an antenna

manufacturer even if it defines recommendations for these appendices.

• As well, the additive sensors manufacturers (inertial units, cameras or LIDAR, etc.) or data

providers, which are not specifically mentioned in Figure 4 because out of scope of the pure GNSS

value chain have also a clear responsibility in the final GBPT positioning performance when

hybridization or fusion of sensors are used. They too may have created a general-purpose device

and not a road specific one, have the maximum skills to know how the performances of their

sensors can depend on their products themselves, of the operational conditions where they work

(for example face sunlight, fog and humidity for the cameras, etc.) and finally, of their integration

in the vehicle. The sensor manufacturers shall provide a clear statement about these

performances and limits of operation and should provide clear recommendations for the

integration and installation.

• The GBPT manufacturer integrates the GNSS sensors with other sensors, data and has a major

responsibility in the final positioning performance on board the vehicle. It is more specialized on

road applications than the sensor manufacturer, and will invest in specific means and models for

stimulating the internal sensors and for testing the integration on car, maybe recommending the

best integration and installation. While keeping a distance with the final ITS application for

avoiding a too customized product, it will still remain independent from the car manufacturer in

order to keep the potential of its market, but will certainly build strong partnership with some of

them. It can, but not necessarily, integrates the antenna, and also depends, for the final

performances, on some final installation concepts. Therefore, it should occupy the best stage for

endorse a black labialization of its product on the shelves, with respect to the GBPT positioning

performances and participate to the elaboration of a technical agreement for integration and

installation of the dedicated electronic devices allowing to finalize the certification of a particular

ITS application. The main test effort with respect to the standardized accuracy, integrity,

availability performance assessment should take place with these manufacturers, considering a

variety of situations in terms of propagation environment even if it should well be noticed that it is

only a “on the shelves” evaluation, therefore rather a “lab” evaluation. At this stage, conducted

tests are preferable, using either simulations or replays.

• The important step to be held by the vehicle manufacturers (and system integrators for the second

market if any) should be the setup of the facilities components of the final integration of the GBPT

like power supply, communication buses connections, computing resources, antenna location and

fixation, mechanical interactions between GBPT and vehicle, location of the system (antenna,

inertial unit, etc.) with respect to the dynamic figures of the vehicle motion (centre of gravity,

centre of rotation, etc.). None of these facilities is the core of the positioning functions but almost

all have an impact on the final positioning performances assessment (power micro-cut duration as

an example, or vibrations). The process will depend on each vehicle’s model (each first or

modified installation) and therefore, even if a same GBPT on the shelf is retained in this process

for several models, a particular field test campaign of the final installation should be led for

verifying the overall positioning performance in a good functioning verification. At this level, the

goal should not be to cover any propagation situation with respect to environment (atmospheric,

rural/ cities, etc.) but to cover real situations of radiated signals, electromagnetic compatibilities

between each connected component […]. (as an example GNSS and A-GNSS with entertainment

applications, etc.) and finally dynamics coverage for the inertial and other proprioceptive sensors.

T

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.

Loading comments...