ISO/IEC 14496-11:2005

(Main)Information technology — Coding of audio-visual objects — Part 11: Scene description and application engine

Information technology — Coding of audio-visual objects — Part 11: Scene description and application engine

ISO/IEC 14496-11:2005 specifies the coded representation of interactive audio-visual scenes and applications. It specifies the following tools: the coded representation of the spatio-temporal positioning of audio-visual objects as well as their behaviour in response to interaction (scene description); the coded representation of synthetic two-dimensional (2D) or three-dimensional (3D) objects that can be manifested audibly and/or visually; the Extensible MPEG-4 Textual (XMT) format, a textual representation of the multimedia content described in ISO/IEC 14496 using the Extensible Markup Language (XML); and a system level description of an application engine (format, delivery, lifecycle, and behaviour of dowloadable Java byte code applications).

Technologies de l'information — Codage des objets audiovisuels — Partie 11: Description de scène et moteur d'application

General Information

- Status

- Withdrawn

- Publication Date

- 04-Dec-2005

- Withdrawal Date

- 04-Dec-2005

- Current Stage

- 9599 - Withdrawal of International Standard

- Start Date

- 02-Nov-2015

- Completion Date

- 12-Feb-2026

Relations

- Effective Date

- 06-Jun-2022

- Effective Date

- 06-Jun-2022

- Effective Date

- 08-May-2020

- Effective Date

- 16-Oct-2025

- Effective Date

- 16-Oct-2025

- Effective Date

- 16-Oct-2025

- Effective Date

- 09-Feb-2013

- Effective Date

- 15-Apr-2008

- Effective Date

- 15-Apr-2008

- Effective Date

- 15-Apr-2008

- Revises

ISO/IEC 14496-1:2001 - Information technology — Coding of audio-visual objects — Part 1: Systems - Effective Date

- 15-Apr-2008

- Effective Date

- 20-Jun-2008

- Effective Date

- 15-Apr-2008

ISO/IEC 14496-11:2005 - Information technology -- Coding of audio-visual objects

ISO/IEC 14496-11:2005 - Information technology -- Coding of audio-visual objects

Get Certified

Connect with accredited certification bodies for this standard

BSI Group

BSI (British Standards Institution) is the business standards company that helps organizations make excellence a habit.

NYCE

Mexican standards and certification body.

Sponsored listings

Frequently Asked Questions

ISO/IEC 14496-11:2005 is a standard published by the International Organization for Standardization (ISO). Its full title is "Information technology — Coding of audio-visual objects — Part 11: Scene description and application engine". This standard covers: ISO/IEC 14496-11:2005 specifies the coded representation of interactive audio-visual scenes and applications. It specifies the following tools: the coded representation of the spatio-temporal positioning of audio-visual objects as well as their behaviour in response to interaction (scene description); the coded representation of synthetic two-dimensional (2D) or three-dimensional (3D) objects that can be manifested audibly and/or visually; the Extensible MPEG-4 Textual (XMT) format, a textual representation of the multimedia content described in ISO/IEC 14496 using the Extensible Markup Language (XML); and a system level description of an application engine (format, delivery, lifecycle, and behaviour of dowloadable Java byte code applications).

ISO/IEC 14496-11:2005 specifies the coded representation of interactive audio-visual scenes and applications. It specifies the following tools: the coded representation of the spatio-temporal positioning of audio-visual objects as well as their behaviour in response to interaction (scene description); the coded representation of synthetic two-dimensional (2D) or three-dimensional (3D) objects that can be manifested audibly and/or visually; the Extensible MPEG-4 Textual (XMT) format, a textual representation of the multimedia content described in ISO/IEC 14496 using the Extensible Markup Language (XML); and a system level description of an application engine (format, delivery, lifecycle, and behaviour of dowloadable Java byte code applications).

ISO/IEC 14496-11:2005 is classified under the following ICS (International Classification for Standards) categories: 35.040 - Information coding; 35.040.40 - Coding of audio, video, multimedia and hypermedia information. The ICS classification helps identify the subject area and facilitates finding related standards.

ISO/IEC 14496-11:2005 has the following relationships with other standards: It is inter standard links to ISO/IEC 14496-11:2005/Amd 6:2009, ISO/IEC 14496-11:2005/Amd 5:2007, ISO/IEC 14496-11:2005/Amd 7:2010, ISO/IEC 14496-1:2001/Amd 7:2004, ISO/IEC 14496-1:2001/Amd 8:2004, ISO/IEC 14496-1:2001/Amd 3:2004, ISO/IEC 14496-11:2015, ISO/IEC 14496-1:2001/FDAM 2, ISO/IEC 14496-1:2001/Amd 1:2001, ISO/IEC 14496-1:2001/Amd 4:2003, ISO/IEC 14496-1:2001; is excused to ISO/IEC 14496-11:2005/Amd 6:2009, ISO/IEC 14496-11:2005/Amd 5:2007. Understanding these relationships helps ensure you are using the most current and applicable version of the standard.

ISO/IEC 14496-11:2005 is available in PDF format for immediate download after purchase. The document can be added to your cart and obtained through the secure checkout process. Digital delivery ensures instant access to the complete standard document.

Standards Content (Sample)

INTERNATIONAL ISO/IEC

STANDARD 14496-11

First edition

2005-12-15

Information technology — Coding of

audio-visual objects —

Part 11:

Scene description and application engine

Technologies de l'information — Codage des objets audiovisuels —

Partie 11: Description de scène et moteur d'application

Reference number

©

ISO/IEC 2005

PDF disclaimer

This PDF file may contain embedded typefaces. In accordance with Adobe's licensing policy, this file may be printed or viewed but

shall not be edited unless the typefaces which are embedded are licensed to and installed on the computer performing the editing. In

downloading this file, parties accept therein the responsibility of not infringing Adobe's licensing policy. The ISO Central Secretariat

accepts no liability in this area.

Adobe is a trademark of Adobe Systems Incorporated.

Details of the software products used to create this PDF file can be found in the General Info relative to the file; the PDF-creation

parameters were optimized for printing. Every care has been taken to ensure that the file is suitable for use by ISO member bodies. In

the unlikely event that a problem relating to it is found, please inform the Central Secretariat at the address given below.

© ISO/IEC 2005

All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized in any form or by any means,

electronic or mechanical, including photocopying and microfilm, without permission in writing from either ISO at the address below or

ISO's member body in the country of the requester.

ISO copyright office

Case postale 56 • CH-1211 Geneva 20

Tel. + 41 22 749 01 11

Fax + 41 22 749 09 47

E-mail copyright@iso.org

Web www.iso.org

Published in Switzerland

ii © ISO/IEC 2005 – All rights reserved

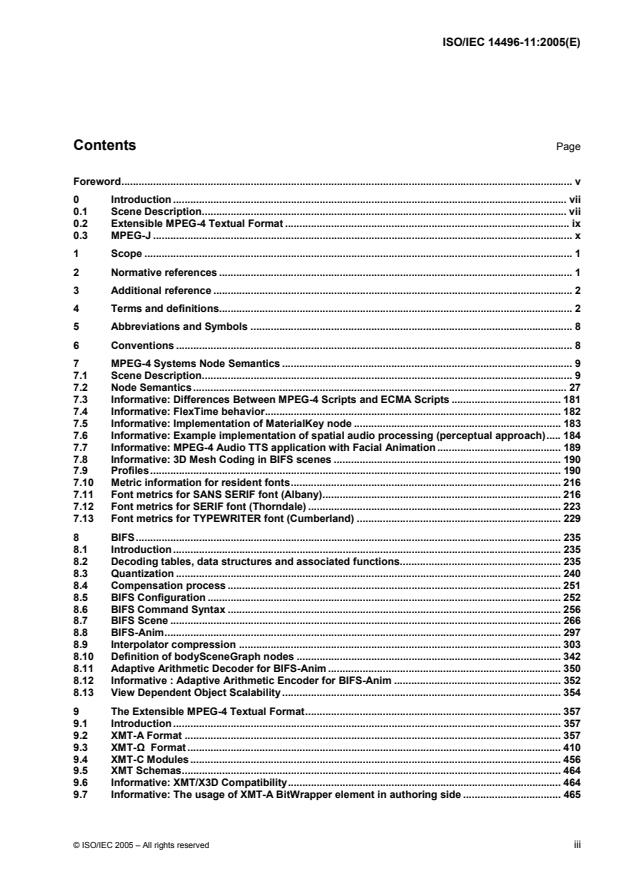

Contents Page

Foreword. v

0 Introduction . vii

0.1 Scene Description. vii

0.2 Extensible MPEG-4 Textual Format . ix

0.3 MPEG-J. x

1 Scope. 1

2 Normative references. 1

3 Additional reference . 2

4 Terms and definitions. 2

5 Abbreviations and Symbols . 8

6 Conventions . 8

7 MPEG-4 Systems Node Semantics . 9

7.1 Scene Description. 9

7.2 Node Semantics. 27

7.3 Informative: Differences Between MPEG-4 Scripts and ECMA Scripts . 181

7.4 Informative: FlexTime behavior. 182

7.5 Informative: Implementation of MaterialKey node . 183

7.6 Informative: Example implementation of spatial audio processing (perceptual approach). 184

7.7 Informative: MPEG-4 Audio TTS application with Facial Animation. 189

7.8 Informative: 3D Mesh Coding in BIFS scenes . 190

7.9 Profiles. 190

7.10 Metric information for resident fonts. 216

7.11 Font metrics for SANS SERIF font (Albany). 216

7.12 Font metrics for SERIF font (Thorndale) . 223

7.13 Font metrics for TYPEWRITER font (Cumberland) . 229

8 BIFS. 235

8.1 Introduction . 235

8.2 Decoding tables, data structures and associated functions. 235

8.3 Quantization . 240

8.4 Compensation process . 251

8.5 BIFS Configuration . 252

8.6 BIFS Command Syntax . 256

8.7 BIFS Scene. 266

8.8 BIFS-Anim. 297

8.9 Interpolator compression. 303

8.10 Definition of bodySceneGraph nodes . 342

8.11 Adaptive Arithmetic Decoder for BIFS-Anim . 350

8.12 Informative : Adaptive Arithmetic Encoder for BIFS-Anim . 352

8.13 View Dependent Object Scalability. 354

9 The Extensible MPEG-4 Textual Format. 357

9.1 Introduction . 357

9.2 XMT-A Format. 357

9.3 XMT-Ω Format . 410

9.4 XMT-C Modules. 456

9.5 XMT Schemas. 464

9.6 Informative: XMT/X3D Compatibility. 464

9.7 Informative: The usage of XMT-A BitWrapper element in authoring side . 465

© ISO/IEC 2005 – All rights reserved iii

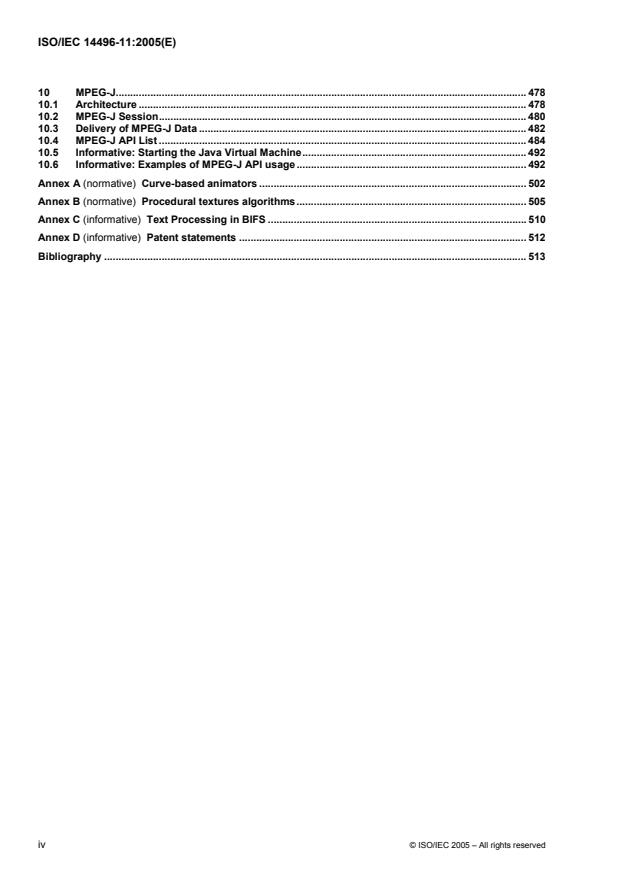

10 MPEG-J. 478

10.1 Architecture. 478

10.2 MPEG-J Session. 480

10.3 Delivery of MPEG-J Data . 482

10.4 MPEG-J API List. 484

10.5 Informative: Starting the Java Virtual Machine. 492

10.6 Informative: Examples of MPEG-J API usage. 492

Annex A (normative) Curve-based animators . 502

Annex B (normative) Procedural textures algorithms. 505

Annex C (informative) Text Processing in BIFS . 510

Annex D (informative) Patent statements . 512

Bibliography . 513

iv © ISO/IEC 2005 – All rights reserved

Foreword

ISO (the International Organization for Standardization) and IEC (the International Electrotechnical

Commission) form the specialized system for worldwide standardization. National bodies that are members of

ISO or IEC participate in the development of International Standards through technical committees

established by the respective organization to deal with particular fields of technical activity. ISO and IEC

technical committees collaborate in fields of mutual interest. Other international organizations, governmental

and non-governmental, in liaison with ISO and IEC, also take part in the work. In the field of information

technology, ISO and IEC have established a joint technical committee, ISO/IEC JTC 1.

International Standards are drafted in accordance with the rules given in the ISO/IEC Directives, Part 2.

The main task of the joint technical committee is to prepare International Standards. Draft International

Standards adopted by the joint technical committee are circulated to national bodies for voting. Publication as

an International Standard requires approval by at least 75 % of the national bodies casting a vote.

ISO/IEC 14496-11 was prepared by Joint Technical Committee ISO/IEC JTC 1, Information technology,

Subcommittee SC 29, Coding of audio, picture, multimedia and hypermedia information.

ISO/IEC 14496 consists of the following parts, under the general title Information technology — Coding of

audio-visual objects:

— Part 1: Systems

— Part 2: Visual

— Part 3: Audio

— Part 4: Conformance testing

— Part 5: Reference software

— Part 6: Delivery Multimedia Integration Framework (DMIF)

— Part 7: Optimized reference software for coding of audio-visual objects [Technical Report]

— Part 8: Carriage of ISO/IEC 14496 contents over IP networks

— Part 9: Reference hardware description [Technical Report]

— Part 10: Advanced Video Coding

— Part 11: Scene description and application engine

— Part 12: ISO base media file format

— Part 13: Intellectual Property Management and Protection (IPMP) extensions

— Part 14: MP4 file format

— Part 15: Advanced Video Coding (AVC) file format

— Part 16: Animation Framework eXtension (AFX)

© ISO/IEC 2005 – All rights reserved v

— Part 17: Streaming text format

— Part 18: Font compression and streaming

— Part 19: Synthesized texture stream

— Part 20: Lightweight Application Scene Representation (LASeR) and Simple Aggregation Format (SAF)

The following parts are under preparation:

— Part 21: MPEG-J GFX

vi © ISO/IEC 2005 – All rights reserved

0 Introduction

0.1 Scene Description

0.1.1 Overview

ISO/IEC 14496 addresses the coding of audio-visual objects of various types: natural video and audio objects as well as

textures, text, 2- and 3-dimensional graphics, and also synthetic music and sound effects. To reconstruct a multimedia

scene at the terminal, it is hence not sufficient to transmit the raw audio-visual data to a receiving terminal. Additional

information is needed in order to combine this audio-visual data at the terminal and construct and present to the end-user

a meaningful multimedia scene. This information, called scene description, determines the placement of audio-visual

objects in space and time and is transmitted together with the coded objects as illustrated in Figure 1. Note that the scene

description only describes the structure of the scene. The action of assembling these objects in the same representation

space is called composition. The action of transforming these audio-visual objects from a common representation space to

a specific presentation device (i.e. speakers and a viewing window) is called rendering.

audiovisual

voice

audiovisual

presentation

sprite

multiplexed

downstream control / data

2D background

multiplexed

upstream control / data

y

3D objects

scene

coordinate

system

x

z

user events

video audio

compositor

compositor

projection

plane

hypothetical viewer

display

speaker

user input

Figure 1 — An example of an object-based multimedia scene

Independent coding of different objects may achieve higher compression, and also brings the ability to manipulate content

at the terminal. The behaviors of objects and their response to user inputs can thus also be represented in the scene

description.

The scene description framework used in this part of ISO/IEC 14496 is based largely on ISO/IEC 14772-1:1998 (Virtual

Reality Modeling Language – VRML).

0.1.2 Composition and Rendering

ISO/IEC 14496-11 defines the syntax and semantics of bitstreams that describe the spatio-temporal relationships of audio-

visual objects. For visual data, particular composition algorithms are not mandated since they are implementation-

© ISO/IEC 2005 – All rights reserved vii

dependent; for audio data, subclause 7.1.1.2.13 and the semantics of the AudioBIFS nodes normatively define the

composition process. The manner in which the composed scene is presented to the user is not specified for audio or

visual data. The scene description representation is termed “BInary Format for Scenes” (BIFS).

0.1.3 Scene Description

In order to facilitate the development of authoring, editing and interaction tools, scene descriptions are coded

independently from the audio-visual media that form part of the scene. This permits modification of the scene without

having to decode or process in any way the audio-visual media. The following clauses detail the scene description

capabilities that are provided by ISO/IEC 14496-11.

0.1.3.1 Grouping of audio-visual objects

A scene description follows a hierarchical structure that can be represented as a graph. Nodes of the graph form audio-

visual objects, as illustrated in Figure 2. The structure is not necessarily static; nodes may be added, deleted or be

modified.

scene

person 2D background furniture audiovisual

presentation

voice sprite globe desk

Figure 2 — Logical structure of example scene

0.1.3.2 Spatio-Temporal positioning of objects

Audio-visual objects have both a spatial and a temporal extent. Complex audio-visual objects are constructed by

combining appropriate scene description nodes to build up the scene graph. Audio-visual objects may be located in 2D or

3D space. Each audio-visual object has a local co-ordinate system. A local co-ordinate system is one in which the audio-

visual object has a pre-defined (but possibly varying) spatio-temporal location and scale (size and orientation). Audio-

visual objects are positioned in a scene by specifying a co-ordinate transformation from the object’s local co-ordinate

system into another co-ordinate system defined by a parent node in the scene graph.

0.1.3.3 Attributes of audio-visual objects

Scene description nodes expose a set of parameters through which aspects of their appearance and behavior can be

controlled.

EXAMPLE ⎯ the volume of a sound; the color of a synthetic visual object; the source of a streaming video.

0.1.3.4 Behavior of audio-visual objects

ISO/IEC 14496-11 provides tools for enabling dynamic scene behavior and user interaction with the presented content.

User interaction can be separated into two major categories: client-side and server-side. Client-side interaction is an

integral part of the scene description described herein. Server-side interaction is not dealt with.

Client-side interaction involves content manipulation that is handled locally at the end-user’s terminal. It consists of the

modification of attributes of scene objects according to specified user actions.

viii © ISO/IEC 2005 – All rights reserved

EXAMPLE ⎯ A user can click on a scene to start an animation or video sequence. The facilities for describing such

interactive behavior are part of the scene description, thus ensuring the same behavior in all terminals conforming to

ISO/IEC 14496-11.

0.2 Extensible MPEG-4 Textual Format

0.2.1 Overview

The Extensible MPEG-4 Textual format (XMT) is a framework (illustrated in Figure 3) for representing MPEG-4 scene

description using a textual syntax. The XMT allows the content authors to exchange their content with other authors, tools

or service providers, and facilitates interoperability with both the Extensible 3D (X3D) being developed by the Web3D and

the Synchronized Multimedia Integration Language (SMIL) from the W3C.

SM I L P la y e r

Parse

SM IL

Co m p ile

SV G

VR M L

Bro w ser

XM T

MPEG -7

MPEG -4

R epre se n ta tion

MPE G -4

( e .g . m p4)

Pla yer

X3D

Figure 3 — Overview of the XMT Framework

0.2.2 Interoperability of XMT

The XMT format can be interchangeable between SMIL players, VRML players, and MPEG-4 players. The format can be

parsed and played directly by a W3C SMIL player, preprocessed to Web3D X3D and played back by a VRML player, or

compiled to an MPEG-4 representation such as MP4, which can then be played by an MPEG-4 player. See below for a

graphical description of interoperability of the XMT.

0.2.3 Two-tier Architecture: XMT-A and XMT-Ω Formats

The XMT framework consists of two levels of textual syntax and semantics: the XMT-A format and the XMT-Ω format,

which we will abbreviate by A and Ω, respectively, and use them interchangeably where there is no confusion.

The XMT-A is an XML-based version of MPEG-4 content, which contains a subset of the X3D. Also contained in XMT-A

is an MPEG-4 extension to the X3D to represent MPEG-4 specific features. The XMT-A provides a straightforward, one-

to-one mapping between the textual and binary formats.

The XMT-Ω is a high-level abstraction of MPEG-4 features designed based on the W3C SMIL. The XMT provides a

default mapping from Ω to A, for there is no deterministic mapping between the two, and it also provides content authors

with an escape mechanism from Ω to A.

In addition an XMT-C (Common) section contains the definition of elements and attributes that may be used within either

XMT-A or XMT-Ω.

© ISO/IEC 2005 – All rights reserved ix

0.3 MPEG-J

0.3.1 Overview

MPEG-J is a flexible programmatic control system that represents an audio-visual session in a manner that allows the

session to adapt to the operating characteristics when presented at the terminal. Two important characteristics are

supported: first, the capability to allow graceful degradation under limited or time varying resources, and second, the ability

to respond to user interaction and provide enhanced multimedia functionality.

More specifically, 9.7 normatively defines:

The format and delivery of Java byte code by specifying the MPEG-J stream format and the delivery mechanism of

such a stream (Java byte code and associated data);

The MPEG-J Session and the MPEG-J application lifecycle; and

The interactions and behavior of byte code through the specification of Java APIs.

0.3.2 Organization MPEG-J specification

10.1 gives an overall architecture of the MPEG-J system. MPEG-J Session start-up is walked through in 10.2. The

Delivery of MPEG-J data to the terminal is specified in 10.3. 10.4 specifies the different categories of APIs that a program

in the form of Java bytecode would use. 10.5 is an informative annex on starting the Java Virtual Machine. The electronic

annex attached to this document lists the normative MPEG-J APIs in the HTML format. 10.6 illustrates the usage of

MPEG-J APIs through a few examples.

----------------------

The International Organization for Standardization (ISO) and International Electrotechnical Commission (IEC) draw

attention to the fact that it is claimed that compliance with this document may involve the use of patents.

The ISO and IEC take no position concerning the evidence, validity and scope of these patent rights.

The holder of these patent rights have assured the ISO and IEC that they are willing to negotiate licences under

reasonable and non-discriminatory terms and conditions with applicants throughout the world. In this respect, the

statement of the holder of this patent right is registered with the ISO and IEC. Information may be obtained from the

companies listed in Annex D.

Attention is drawn to the possibility that some of the elements of this document may be the subject of patent rights other

than those identified in Annex D. ISO and IEC shall not be held responsible for identifying any or all such patent rights.

x © ISO/IEC 2005 – All rights reserved

INTERNATIONAL STANDARD ISO/IEC 14496-11:2005(E)

Information technology — Coding of audio-visual objects —

Part 11:

Scene description and application engine

1 Scope

This part of ISO/IEC 14496 specifies:

1. the coded representation of the spatio-temporal positioning of audio-visual objects as well as their behavior in

response to interaction (scene description);

2. the Extensible MPEG-4 Textual (XMT) format, a textual representation of the multimedia content described in

ISO/IEC 14496 using the Extensible Markup Language (XML); and

3. a system level description of an application engine (format, delivery, lifecycle, and behavior of downloadable Java byte

code applications).

2 Normative references

The following referenced documents are indispensable for the application of this document. For dated references, only the

edition cited applies. For undated references, the latest edition of the referenced document (including any amendments)

applies.

ISO 639-2:1998, Codes for the representation of names of languages — Part 2: Alpha-3 code

ISO 3166-1:1997, Codes for the representation of names of countries and their subdivisions — Part 1: Country codes

ISO 9613-1:1993, Acoustics — Attenuation of sound during propagation outdoors — Part 1: Calculation of the absorption

of sound by the atmosphere

ISO/IEC 11172-2:1993, Information technology — Coding of moving pictures and associated audio for digital storage

media at up to about 1,5 Mbit/s — Part 2: Video

ISO/IEC 11172-3:1993, Information technology — Coding of moving pictures and associated audio for digital storage

media at up to about 1,5 Mbit/s — Part 3: Audio

ISO/IEC 13818-3:1998, Information technology — Generic coding of moving pictures and associated audio information —

Part 3: Audio

ISO/IEC 13818-7: 2004, Information technology — Generic coding of moving pictures and associated audio information —

Part 7: Advanced Audio Coding (AAC)

ISO/IEC 14496-2:2004, Information technology — Coding of audio-visual objects — Part 2: Visual

ISO/IEC 14772-1:1997, Information technology — Computer graphics and image processing — The Virtual Reality

Modeling Language — Part 1: Functional specification and UTF-8 encoding

ISO/IEC 14772-1:1997/Amd.1:2003, Information technology — Computer graphics and image processing — The Virtual

Reality Modeling Language — Part 1: Functional specification and UTF-8 encoding — Amendment 1: Enhanced

interoperability

ISO/IEC 16262:2002, Information technology — ECMAScript language specification

ISO/IEC 13818-2:2000, Information technology — Generic coding of moving pictures and associated audio information —

Part 2: Video

ISO/IEC 10918-1:1994, Information technology — Digital compression and coding of continuous-tone still images:

Requirements and guidelines

© ISO/IEC 2005 – All rights reserved 1

IEEE Std 754-1985, Standard for Binary Floating-Point Arithmetic

Addison-Wesley:September 1996, The Java Language Specification, by James Gosling, Bill Joy and Guy Steele, ISBN 0-

201-63451-1

Addison-Wesley:September 1996, The Java Virtual Machine Specification, by T. Lindholm and F. Yellin, ISBN 0-201-

63452-X

Addison-Wesley:July 1998, Java Class Libraries Vol. 1 The Java Class Libraries, Second Edition Volume 1, by Patrick

Chan, Rosanna Lee and Douglas Kramer, ISBN 0-201-31002-3

Addison-Wesley:July 1998, Java Class Libraries Vol. 2 The Java Class Libraries, Second Edition Volume 2, by Patrick

Chan and Rosanna Lee, ISBN 0-201-31003-1

Addison-Wesley, May 1996, Java API, The Java Application Programming Interface, Volume 1: Core Packages, by

J. Gosling, F. Yellin and the Java Team, ISBN 0-201-63453-8

DAVIC 1.4.1 specification Part 9: Information Representation

ANSI/SMPTE 291M-1996, Television — Ancillary Data Packet and Space Formatting

SMPTE 315M -1999, Television — Camera Positioning Information Conveyed by Ancillary Data Packets

3 Additional reference

ISO/IEC 13522-6:1998, Information technology — Coding of multimedia and hypermedia information — Part 6: Support for

enhanced interactive applications. This reference contains the full normative references to Java APIs and the Java Virtual

Machine as described in the normative references above.

4 Terms and definitions

For the purposes of this document, the following terms and definitions apply.

4.1

Access Unit (AU)

individually accessible portion of data within an elementary stream

NOTE An access unit is the smallest data entity to which timing information can be attributed.

4.2

Alpha map

representation of the transparency parameters associated with a texture map

4.3

atom

object-oriented building block defined by a unique type identifier and length

4.4

audio-visual object

representation of a natural or synthetic object that has an audio and/or visual manifestation

NOTE The representation corresponds to a node or a group of nodes in the BIFS scene description. Each audio-

visual object is associated with zero or more elementary streams using one or more object descriptors.

4.5

audio-visual scene (AV scene)

set of audio-visual objects together with scene description information that defines their spatial and temporal attributes

including behaviors resulting from object and user interactions

4.6

Binary Format for Scene (BIFS)

coded representation of a parametric scene description format

4.7

buffer model

model that defines how a terminal complying with ISO/IEC 14496 manages the buffer resources that are needed to

decode a presentation

2 © ISO/IEC 2005 – All rights reserved

4.8

byte aligned

position in a coded bit stream with a distance of a multiple of 8-bits from the first bit in the stream

4.9

chunk

contiguous set of samples stored for one stream

4.10

clock reference

special time stamp that conveys a reading of a time base

4.11

composition

process of applying scene description information in order to identify the spatio-temporal attributes and hierarchies of

audio-visual objects

4.12

Composition Memory (CM)

random access memory that contains composition units

4.13

Composition Time Stamp (CTS)

indication of the nominal composition time of a composition unit

4.14

Composition Unit (CU)

individually accessible portion of the output that a decoder produces from access units

4.15

compression layer

layer of a system according to the specifications in ISO/IEC 14496 that translates between the coded representation of an

elementary stream and its decoded representation

NOTE It incorporates the decoders.

4.16

container atom

atom whose sole purpose is to contain and group a set of related atoms

4.17

decoder

entity that translates between the coded representation of an elementary stream and its decoded representation

4.18

decoding buffer (DB)

buffer at the input of a decoder that contains access units

4.19

decoder configuration

configuration of a decoder for processing its elementary stream data by using information contained in its elementary

stream descriptor

4.20

Decoding Time Stamp (DTS)

indication of the nominal decoding time of an access unit

4.21

delivery layer

generic abstraction for delivery mechanisms (computer networks, etc.) able to store or transmit a number of multiplexed

elementary streams or M4Mux streams

4.22

descriptor

data structure that is used to describe particular aspects of an elementary stream or a coded audio-visual object

4.23

DMIF Application Interface (DAI)

interface specified in ISO/IEC 14496-6

NOTE It is used here to model the exchange of SL-packetized stream data and associated control information

between the sync layer and the delivery layer.

© ISO/IEC 2005 – All rights reserved 3

4.24

Elementary Stream (ES)

consecutive flow of mono-media data from a single source entity to a single destination entity on the compression layer

4.25

Elementary Stream Descriptor (ESD)

structure contained in object descriptors that describes the encoding format, initialization information, sync layer

configuration, and other descriptive information about the content carried in an elementary stream

4.26

Elementary Stream Interface (ESI)

conceptual interface modeling the exchange of elementary stream data and associated control information between the

compression layer and the sync layer

4.27

M4Mux Channel (FMC)

label to differentiate between data belonging to different constituent streams within one M4Mux Stream

NOTE A sequence of data in one M4Mux channel within a M4Mux stream corresponds to one single SL-packetized

stream.

4.28

M4Mux packet

smallest data entity managed by the M4Mux tool, consisting of a header and a payload

4.29

M4Mux stream

sequence of M4Mux Packets with data from one or more SL-packetized streams that are each identified by their own

M4Mux channel

4.30

M4Mux tool

tool that allows the interleaving of data from multiple data streams

4.31

graphics profile

profile that specifies the permissible set of graphical elements of the BIFS tool that may be used in a scene description

stream

NOTE Note BIFS comprises both graphical and scene description elements.

4.32

hint track

special track which contains instructions for packaging one or more tracks into a TransMux

NOTE It does not contain media data (an elementary stream).

4.33

hinter

tool that is run on a completed file to add one or more hint tracks to the file to facilitate streaming

4.34

inter

mode for coding parameters that uses previously coded parameters to construct a prediction

4.35

interaction stream

elementary stream that conveys user interaction information

4.36

intra

mode for coding parameters that does not make reference to previously coded parameters to perform the encoding

4.37

initial object descriptor

special object descriptor that allows the receiving terminal to gain initial access to portions of content encoded according

to ISO/IEC 14496

NOTE It conveys profile and level information to describe the complexity of the content.

4 © ISO/IEC 2005 – All rights reserved

4.38

Intellectual Property Identification (IPI)

unique identification of one or more elementary streams corresponding to parts of one or more audio-visual objects

4.39

Intellectual Property Management and Protection System (IPMP)

generic term for mechanisms and tools to manage and protect intellectual property

NOTE Only the interface to such systems is normatively defined.

4.40

media node

following list of time dependent nodes that refers to a media stream through a URL field: AnimationStream,

AudioBuffer, AudioClip, AudioSource, Inline, MovieTexture

4.41

media stream

one or more elementary streams whose ES descriptors are aggregated in one object descriptor and that are jointly

decoded to form a representation of an AV object

4.42

media time line

time line expressing normal play back time of a media stream

4.43

movie atom

container atom whose sub-atoms define the meta-data for a presentation (‘moov’)

4.44

movie data atom

container atom which can hold the actual media data for a presentation (‘mdat’)

4.45

MP4 file

name of the file format described in this specification

4.46

Object Clock Reference (OCR)

clock reference that is used by a decoder to recover the time base of the encoder of an elementary stream

4.47

Object Content Information (OCI)

additional information about content conveyed through one or more elementary streams

NOTE It is either aggregated to individual elementary stream descriptors or is itself conveyed as an elementary stream.

4.48

Object Descriptor (OD)

descriptor that aggregates one or more elementary streams by means of their elementary stream descriptors and defines

their logical dependencies

4.49

Object Descriptor Command

command that identifies the action to be taken on a list of object descriptors or object descriptor IDs, e.g. update or remove

4.50

Object Descriptor Profile

profile that specifies the configurations of the object descriptor tool and the sync layer tool that are allowed

4.51

Object Descriptor Stream

elementary stream that conveys object descriptors encapsulated in object descriptor commands

4.52

Object Time Base (OTB)

time base valid for a given elementary stream, and hence for its decoder

NOTE The OTB is conveyed to the decoder via object clock references. All time stamps relating to this object’s

decoding process refer to this time base.

4.53

Parametric Audio Decoder

set of tools for representing and decoding speech signals coded at bit rates between 6 Kbps and 16 Kbps, according to

the specifications in ISO/IEC 14496-3

© ISO/IEC 2005 – All rights reserved 5

4.54

Quality of Service (QoS)

performance that an elementary stream requests from the delivery channel through which it is transported

NOTE QoS is characterized by a set of parameters (e.g. bit rate, delay jitter, bit error rate, etc.).

4.55

random access

process of beginning to read and decode a coded representation at an arbitrary point within the elementary stream

4.56

reference point

location in the data or control flow of a system that has some defined characteristics

4.57

rendering

action of transforming a scene description and its constituent audio-visual objects from a common representation space to

a specific presentation device (i.e., speakers and a viewing window)

4.58

rendering area

portion of the display device’s screen into which the scene description and its constituent audio-visual objects are to be

rendered

4.59

sample

access unit for an elementary stream

NOTE In hint tracks, a sample defines the formation of one or more TransMux packets.

4.60

sample table

packed directory for the timing and physical layout of the samples in a track

4.61

scene description

information that describes the spatio-temporal positioning of audio-visual objects as well as their behavior resulting from

object and user interactions

NOTE The scene description makes reference to elementary streams with audio-visual data by means of pointers to

object descriptors.

4.62

scene description stream

elementary stream that conveys scene description information

4.63

scene graph elements

elements of the BIFS tool that relate only to the structure of the audio-visual scene (spatio-temporal positioning of audio-

visual objects as well as their behavior resulting from object and user interactions) excluding the audio, visual and

graphics nodes as specified in 14496-11

4.64

scene graph profile

profile that defines the permissible set of scene graph elements of the BIFS tool that may be used in a scene description

stream

NOTE Note BIFS comprises both graphical and scene description elements.

4.65

seekable

media stream is seekable if it is possible to play back the stream from any position

4.66

SL-Packetized Stream (SPS)

sequence of sync layer packets that encapsulate one elementary stream

4.67

stream object

media stream or a segment thereof

NOTE A stream object is referenced through a URL field in the scene in the form “OD:n” or “OD:n#”.

6 © ISO/IEC 2005 – All rights reserved

4.68

structured audio

method of describing synthetic sound effects and music as defined by ISO/IEC 14496-3

4.69

Sync Layer (SL)

layer to adapt elementary stream data for communication across the DMIF Application Interface, providing timing and

synchronization information, as well as fragmentation and random access information

NOTE The sync layer syntax is configurable and can be configured to be empty.

4.70

Sync Layer Configuration

configuration of the sync layer syntax for a particular elementary stream using information contained in its elementary

stream descriptor

4.71

Sync Layer Packet (SL-Packet)

smallest data entity managed by the sync layer consisting of a configurable header and a payload

NOTE The payload may consist of one complete access unit or a partial access unit.

4.72

Syntactic Description Language (SDL)

language defined by ISO/IEC 14496-1 that allows the description of a bitstream’s syntax

4.73

Systems Decoder Model (SDM)

model that provides an abstract view of the behavior of a terminal compliant to ISO/IEC 14496

NOTE It consists of the buffer model and the timing model.

4.74

System Time Base (STB)

time base of the terminal

NOTE Its resolution is implementation-dependent. All operations in the terminal are performed according to this time

base.

4.75

terminal

system that sends, or receives and presents the coded representation of an interactive audio-visual scene as defined by

ISO/IEC 14496-11

NOTE It can be a stand alone system, or part of an application system complying with ISO/IEC 14496.

4.76

time base

notion of a clock, equivalent to a counter that is periodically incremented

4.77

timing model

model that specifies the semantic meaning of timing information, how it is incorporated (explicitly or implicitly) in the coded

representation of information, and how it can be recovered at the receiving terminal

4.78

time stamp

indication of a particular time instant relative to a time base

4.79

track

collection of related samples in an MP4 file

NOTE For media data, a track corresponds to an elementary stream. For hint tracks, a track corresponds to a

TransMuxchannel

4.80

interaction stream

elementary stream that conveys user interaction information

© ISO/IEC 2005 – All rights reserved 7

5 Abbreviations and Symbols

AFX Animation Framework eXtension

AU Access Unit

AV Audio-visual

BIFS Binary Format for Scene

CM Composition Memory

CTS Composition Time Stamp

CU Composition Unit

DAI DMIF Application Interface (see ISO/IEC 14496-6)

DB Decoding Buffer

DTS Decoding Time Stamp

ES Elementary Stream

ESI Elementary Stream Interface

ESID Elementary Stream Identifier

FAP Facial Animation Parameters

FAPU FAP Units

FDP Facial Definition Parameters

FIG FAP Interpolation Graph

FIT FAP Interpolation Table

FMC M4Mux Channel

FMOD The floating point modulo (remainder) operator which returns the remainder of x/y such that:

Fmod(x/y) = x – k*y, where k is an integer,

sgn( fmod(x/y) ) = sgn(x), and

abs( fmod(x/y) ) < abs(y)

IP Intellectual Property

IPI Intellectual Property Identification

IPMP Intellectual Property Management and Protection

NCT Node Coding Tables

NDT Node Data Type

NINT Nearest INTeger value

OCI Object Content Information

OCR Object Clock Reference

OD Object Descriptor

ODID Object Descriptor Identifier

OTB Object Time Base

PLL Phase Locked Loop

QoS Quality of Service

SAOL Structured Audio Orchestra Language

SASL Structured Audio Score Language

SDL Syntactic Description Language

SDM Systems Decoder Model

SL Synchronization Layer

SL-Packet Synchronization Layer Packet

SPS SL-Packetized Stream

STB System Time Base

TTS Text-To-Speech

URL Universal Resource Locator

VOP Video Object Plane

VRML Virtual Reality Modeling Language

6 Conventions

For the purpose of unambiguously defining the syntax of the various bitstream components defined by the normative parts

of ISO/IEC 14496 a syntactic description language is used. This language allows the specification of the mapping of the

various parameters in a binary format as well as how they are placed in a serialized bitstream. The definition of the

language is provided in 14496-1.

8 © ISO/IEC 2005 – All rights reserved

7 MPEG-4 Systems Node Semantics

7.1 Scene Description

7.1.1 Concepts

7.1.1.1 BIFS Elementary Streams

7.1.1.1.1 Overview

BIFS is a compact binary format representing a pre-defined set of audio-visual objects, their behaviors, and their spatio-

temporal relationships. The BIFS scene description may, in general, be time-varying. Consequently, BIFS data is carried

in a dedicated elementary stream and is subject to the provisions of the systems decoder model (see 8.2). Portions of

BIFS data that become valid at a given point in time are contained in BIFS CommandFrames or AnimationFrames and

are delivered within time-stamped access units. Note that the initial BIFS scene is sent as a BIFS-Command, although it is

not required, in general, that a BIFS CommandFrame contains a complete BIFS scene description.

7.1.1.1.2 BIFS Decoder Configuration

BIFS configuration information is contained in a BIFSConfig (see 8.5.2) syntax structure, which is transmitted as

DecoderSpecificInfo for the BIFS elementary stream in the corresponding object descriptor (see 7.2.6.7, ISO/IEC

14496-1). This gives basic information that must be known by the terminal in order to parse the BIFS elementary stream.

In particular, it indic

...

INTERNATIONAL ISO/IEC

STANDARD 14496-11

First edition

2005-12-15

Information technology — Coding of

audio-visual objects —

Part 11:

Scene description and application engine

Technologies de l'information — Codage des objets audiovisuels —

Partie 11: Description de scène et moteur d'application

Reference number

©

ISO/IEC 2005

PDF disclaimer

This PDF file may contain embedded typefaces. In accordance with Adobe's licensing policy, this file may be printed or viewed but

shall not be edited unless the typefaces which are embedded are licensed to and installed on the computer performing the editing. In

downloading this file, parties accept therein the responsibility of not infringing Adobe's licensing policy. The ISO Central Secretariat

accepts no liability in this area.

Adobe is a trademark of Adobe Systems Incorporated.

Details of the software products used to create this PDF file can be found in the General Info relative to the file; the PDF-creation

parameters were optimized for printing. Every care has been taken to ensure that the file is suitable for use by ISO member bodies. In

the unlikely event that a problem relating to it is found, please inform the Central Secretariat at the address given below.

This CD-ROM contains:

1) the publication ISO/IEC 14496-11:2005 in portable document format (PDF), which can be viewed

using Adobe® Acrobat® Reader;

2) electronic attachments containing: Extensible MPEG-4 Textual (XMT) files using the Extensible

Markup Language (XML); normative MPEG-J Application Programming Interfaces (APIs) in

Hypertext Markup Language (HTML); and MPEG-4 Syste

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.

Loading comments...