ISO/IEC 23090-12:2025

(Main)Information technology — Coded representation of immersive media — Part 12: MPEG immersive video

Information technology — Coded representation of immersive media — Part 12: MPEG immersive video

This document specifies the syntax, semantics and decoding processes for MPEG immersive video (MIV), as an extension of ISO/IEC 23090-5. It provides support for playback of a three-dimensional (3D) scene within a limited range of viewing positions and orientations, with 6 Degrees of Freedom (6DoF).

Technologies de l'information — Représentation codée de média immersifs — Partie 12: Vidéo immersive MPEG

General Information

- Status

- Published

- Publication Date

- 31-Aug-2025

- Current Stage

- 6060 - International Standard published

- Start Date

- 01-Sep-2025

- Due Date

- 14-Aug-2026

- Completion Date

- 01-Sep-2025

Relations

- Effective Date

- 19-Aug-2023

Overview - ISO/IEC 23090-12:2025 (MPEG Immersive Video)

ISO/IEC 23090-12:2025 specifies the syntax, semantics, and decoding processes for MPEG Immersive Video (MIV) as an extension of ISO/IEC 23090-5. The standard enables coded representation and playback of three-dimensional (3D) scenes with six degrees of freedom (6DoF) within a limited range of viewing positions and orientations. It defines bitstream formats, V3C characteristics, and post‑decoding workflows needed to store, distribute, and render immersive video.

Key Topics - technical scope and requirements

- Bitstream architecture and formats: V3C unit and NAL unit formats, partitioning of atlas frames into tiles, mapping of views to V3C components.

- Syntax and semantics: Tabular syntax definitions, descriptor and function specifications, and semantic rules that govern decoding and interpretation.

- Decoding and reconstruction: Detailed decoding processes for atlas data, occupancy/geometry/attribute/packed video, and common atlas data; pre- and post-reconstruction steps.

- Adaptation and parsing: Sub-bitstream extraction, group extraction, parsing processes for playback adaptation.

- Profiles, tiers and levels: Normative annex for conformance classes and capabilities.

- Supplemental and usability metadata: Annexes for supplemental enhancement information and volumetric usability information to aid rendering and delivery.

- New/extended functionality (2025 edition): Support for colourized geometry, capture device metadata, patch margins, background views and static background atlases, decoder-side depth estimation, chroma dynamic range modification, and additional depth quantization options.

- Interoperability considerations: References to related video coding technologies and normative cross-references (e.g., AVC, HEVC, VVC, V3C/V‑PCC).

Applications - practical use cases

- VR/AR playback and streaming: Deliver immersive experiences enabling viewer translation and rotation (6DoF) in real-time or on-demand streaming.

- Volumetric content distribution: Efficient storage and delivery of multi-view and multi-camera captures for virtual production and telepresence.

- Device and player implementation: Decoder and renderer developers implement the MIV bitstream syntax and decoding pipelines to enable interoperable immersive media players.

- Content creation and tooling: Production tools for multi-camera capture, atlas generation, and bitstream packaging conform to the standard for cross-platform compatibility.

- Research and testing: Standardized conformance tests, performance evaluation, and feature development for immersive codecs and renderers.

Related Standards

- ISO/IEC 23090-5 (V3C and V‑PCC) - foundation for volumetric video and atlas-based coding

- ISO/IEC 23090-3 (Versatile Video Coding, VVC)

- ISO/IEC 23008-2 (HEVC) and ISO/IEC 14496-10 (AVC) - referenced video codec technologies used in implementations

Keywords: ISO/IEC 23090-12:2025, MPEG immersive video, MIV, 6DoF, immersive video, V3C, bitstream format, decoding, atlas, NAL, volumetric video.

Get Certified

Connect with accredited certification bodies for this standard

BSI Group

BSI (British Standards Institution) is the business standards company that helps organizations make excellence a habit.

NYCE

Mexican standards and certification body.

Sponsored listings

Frequently Asked Questions

ISO/IEC 23090-12:2025 is a standard published by the International Organization for Standardization (ISO). Its full title is "Information technology — Coded representation of immersive media — Part 12: MPEG immersive video". This standard covers: This document specifies the syntax, semantics and decoding processes for MPEG immersive video (MIV), as an extension of ISO/IEC 23090-5. It provides support for playback of a three-dimensional (3D) scene within a limited range of viewing positions and orientations, with 6 Degrees of Freedom (6DoF).

This document specifies the syntax, semantics and decoding processes for MPEG immersive video (MIV), as an extension of ISO/IEC 23090-5. It provides support for playback of a three-dimensional (3D) scene within a limited range of viewing positions and orientations, with 6 Degrees of Freedom (6DoF).

ISO/IEC 23090-12:2025 is classified under the following ICS (International Classification for Standards) categories: 35.040.40 - Coding of audio, video, multimedia and hypermedia information. The ICS classification helps identify the subject area and facilitates finding related standards.

ISO/IEC 23090-12:2025 has the following relationships with other standards: It is inter standard links to ISO/IEC 23090-12:2023. Understanding these relationships helps ensure you are using the most current and applicable version of the standard.

ISO/IEC 23090-12:2025 is available in PDF format for immediate download after purchase. The document can be added to your cart and obtained through the secure checkout process. Digital delivery ensures instant access to the complete standard document.

Standards Content (Sample)

International

Standard

ISO/IEC 23090-12

Second edition

Information technology — Coded

2025-09

representation of immersive media —

Part 12:

MPEG immersive video

Technologies de l'information — Représentation codée de média

immersifs —

Partie 12: Vidéo immersive MPEG

Reference number

© ISO/IEC 2025

All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may

be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on

the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below

or ISO’s member body in the country of the requester.

ISO copyright office

CP 401 • Ch. de Blandonnet 8

CH-1214 Vernier, Geneva

Phone: +41 22 749 01 11

Email: copyright@iso.org

Website: www.iso.org

Published in Switzerland

© ISO/IEC 2025 – All rights reserved

ii

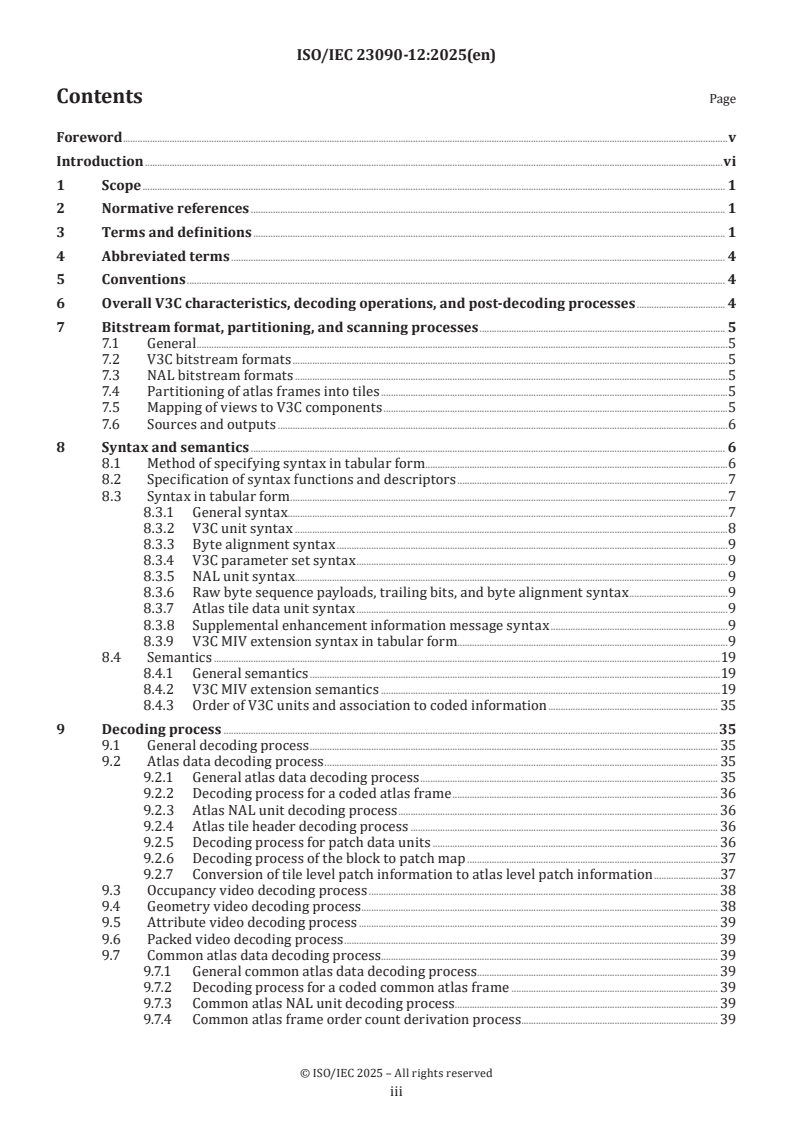

Contents Page

Foreword .v

Introduction .vi

1 Scope . 1

2 Normative references . 1

3 Terms and definitions . 1

4 Abbreviated terms . 4

5 Conventions . 4

6 Overall V3C characteristics, decoding operations, and post-decoding processes . 4

7 Bitstream format, partitioning, and scanning processes . 5

7.1 General .5

7.2 V3C bitstream formats .5

7.3 NAL bitstream formats .5

7.4 Partitioning of atlas frames into tiles .5

7.5 Mapping of views to V3C components .5

7.6 Sources and outputs .6

8 Syntax and semantics . 6

8.1 Method of specifying syntax in tabular form . .6

8.2 Specification of syntax functions and descriptors .7

8.3 Syntax in tabular form .7

8.3.1 General syntax.7

8.3.2 V3C unit syntax .8

8.3.3 Byte alignment syntax .9

8.3.4 V3C parameter set syntax .9

8.3.5 NAL unit syntax . .9

8.3.6 Raw byte sequence payloads, trailing bits, and byte alignment syntax .9

8.3.7 Atlas tile data unit syntax .9

8.3.8 Supplemental enhancement information message syntax .9

8.3.9 V3C MIV extension syntax in tabular form.9

8.4 Semantics .19

8.4.1 General semantics .19

8.4.2 V3C MIV extension semantics .19

8.4.3 Order of V3C units and association to coded information . 35

9 Decoding process .35

9.1 General decoding process . 35

9.2 Atlas data decoding process . 35

9.2.1 General atlas data decoding process . 35

9.2.2 Decoding process for a coded atlas frame . 36

9.2.3 Atlas NAL unit decoding process . 36

9.2.4 Atlas tile header decoding process . 36

9.2.5 Decoding process for patch data units . 36

9.2.6 Decoding process of the block to patch map .37

9.2.7 Conversion of tile level patch information to atlas level patch information .37

9.3 Occupancy video decoding process . 38

9.4 Geometry video decoding process . 38

9.5 Attribute video decoding process . 39

9.6 Packed video decoding process . 39

9.7 Common atlas data decoding process. 39

9.7.1 General common atlas data decoding process. 39

9.7.2 Decoding process for a coded common atlas frame . 39

9.7.3 Common atlas NAL unit decoding process . . 39

9.7.4 Common atlas frame order count derivation process . . 39

© ISO/IEC 2025 – All rights reserved

iii

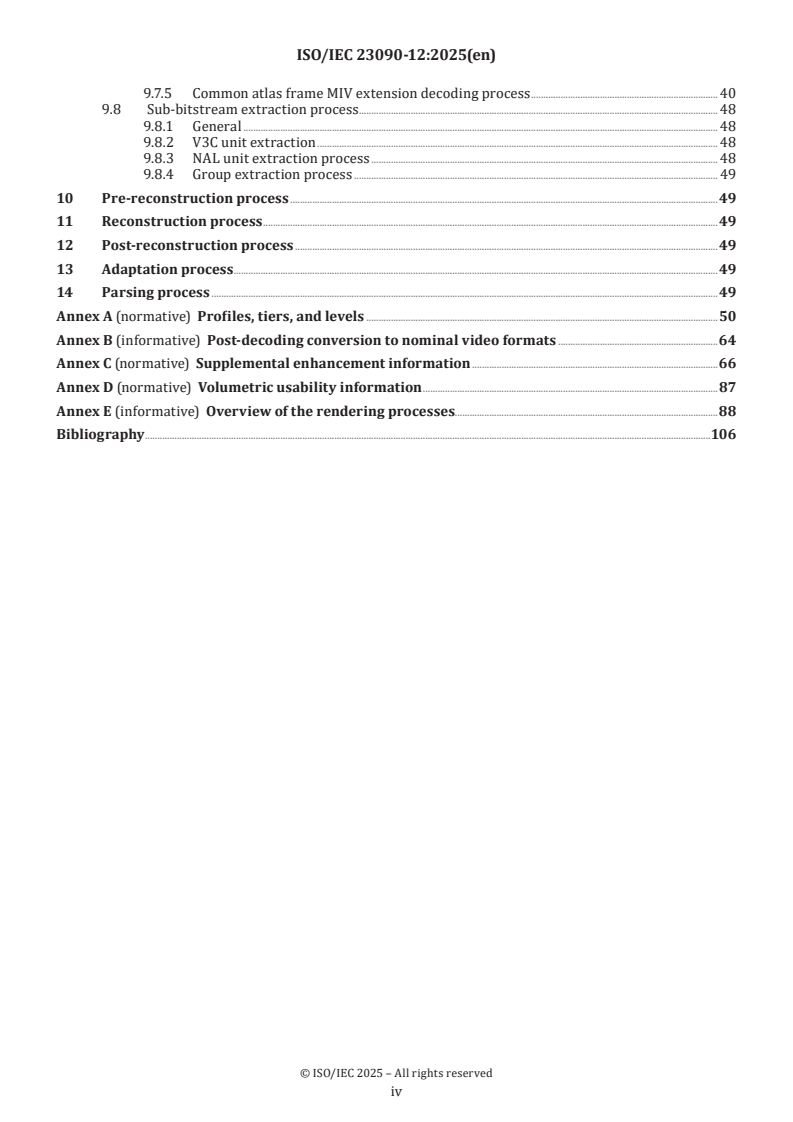

9.7.5 Common atlas frame MIV extension decoding process . 40

9.8 Sub-bitstream extraction process . 48

9.8.1 General . 48

9.8.2 V3C unit extraction . 48

9.8.3 NAL unit extraction process . 48

9.8.4 Group extraction process . 49

10 Pre-reconstruction process .49

11 Reconstruction process .49

12 Post-reconstruction process .49

13 Adaptation process .49

14 Parsing process .49

Annex A (normative) Profiles, tiers, and levels .50

Annex B (informative) Post-decoding conversion to nominal video formats .64

Annex C (normative) Supplemental enhancement information .66

Annex D (normative) Volumetric usability information .87

Annex E (informative) Overview of the rendering processes.88

Bibliography .106

© ISO/IEC 2025 – All rights reserved

iv

Foreword

ISO (the International Organization for Standardization) and IEC (the International Electrotechnical

Commission) form the specialized system for worldwide standardization. National bodies that are

members of ISO or IEC participate in the development of International Standards through technical

committees established by the respective organization to deal with particular fields of technical activity.

ISO and IEC technical committees collaborate in fields of mutual interest. Other international organizations,

governmental and non-governmental, in liaison with ISO and IEC, also take part in the work.

The procedures used to develop this document and those intended for its further maintenance are described

in the ISO/IEC Directives, Part 1. In particular, the different approval criteria needed for the different types

of document should be noted. This document was drafted in accordance with the editorial rules of the ISO/

IEC Directives, Part 2 (see www.iso.org/directives or www.iec.ch/members_experts/refdocs).

ISO and IEC draw attention to the possibility that the implementation of this document may involve the

use of (a) patent(s). ISO and IEC take no position concerning the evidence, validity or applicability of any

claimed patent rights in respect thereof. As of the date of publication of this document, ISO and IEC had

received notice of (a) patent(s) which may be required to implement this document. However, implementers

are cautioned that this may not represent the latest information, which may be obtained from the patent

database available at www.iso.org/patents and https://patents.iec.ch. ISO and IEC shall not be held

responsible for identifying any or all such patent rights.

Any trade name used in this document is information given for the convenience of users and does not

constitute an endorsement.

For an explanation of the voluntary nature of standards, the meaning of ISO specific terms and expressions

related to conformity assessment, as well as information about ISO's adherence to the World Trade

Organization (WTO) principles in the Technical Barriers to Trade (TBT) see www.iso.org/iso/foreword.html.

In the IEC, see www.iec.ch/understanding-standards.

This document was prepared by Joint Technical Committee ISO/IEC JTC 1, Information technology,

Subcommittee SC 29, Coding of audio, picture, multimedia and hypermedia information.

This second edition cancels and replaces the first edition (ISO/IEC 23090-12:2023), which has been

technically revised.

The main changes are as follows:

— Additional functionality: colourized geometry, capture device information, patch margins, background

views, static background atlases, support for decoder-side depth estimation, chroma dynamic range

modification, piecewise linear normalized disparity quantization, and linear depth quantization was added.

A list of all parts in the ISO/IEC 23090 series can be found on the ISO and IEC websites.

Any feedback or questions on this document should be directed to the user’s national standards

body. A complete listing of these bodies can be found at www.iso.org/members.html and

www.iec.ch/national-committees.

© ISO/IEC 2025 – All rights reserved

v

Introduction

This document was developed to support compression of immersive video content, in which a real or virtual

3D scene is captured by multiple real or virtual cameras. The use of this document enables storage and

distribution of immersive video content over existing and future networks, for playback with 6 degrees of

freedom of view position and orientation.

© ISO/IEC 2025 – All rights reserved

vi

International Standard ISO/IEC 23090-12:2025(en)

Information technology — Coded representation of

immersive media —

Part 12:

MPEG immersive video

1 Scope

This document specifies the syntax, semantics and decoding processes for MPEG immersive video (MIV), as

an extension of ISO/IEC 23090-5. It provides support for playback of a three-dimensional (3D) scene within

a limited range of viewing positions and orientations, with 6 Degrees of Freedom (6DoF).

2 Normative references

The following documents are referred to in the text in such a way that some or all of their content constitutes

requirements of this document. For dated references, only the edition cited applies. For undated references,

the latest edition of the referenced document (including any amendments) applies.

ISO/IEC 14496-10:2022, Information technology — Coding of audio-visual objects — Part 10: Advanced video coding

ISO/IEC 23008-2:2023, Information technology — High efficiency coding and media delivery in heterogeneous

environments — Part 2: High efficiency video coding

ISO/IEC 23090-3:2021, Information technology — Coded representation of immersive media — Part 3: Versatile

video coding

ISO/IEC 23090-5:2025, Information technology — Coded representation of immersive media — Part 5: Visual

Volumetric video-based coding (V3C) and Video-based point cloud compression (V-PCC)

3 Terms and definitions

For the purposes of this document, the terms and definitions given in ISO/IEC 23090-5 and the following apply.

ISO and IEC maintain terminology databases for use in standardization at the following addresses:

— ISO Online browsing platform: available at https:// www .iso .org/ obp

— IEC Electropedia: available at https:// www .electropedia .org/

3.1

background view

view that may be synthesized or acquired to include scene elements that would otherwise be excluded by

other scene elements that are closer to the view position, and may consist of V3C component frames that are

static or rarely-changing parts of an MIV scene

3.2

capture device

hardware capable of observing a scene and thereby acquiring one or more video components

© ISO/IEC 2025 – All rights reserved

3.3

capture device information

CDI

collection of one or more physical models with the aim of compensating for zero or more undesirable

acquisition aspects inherent to applied capture devices (3.2)

3.4

coded MIV sequence

coded V3C sequence conforming to the constraints specified in this document

3.5

decoding process

process specified in this document that reads a bitstream and derives patch data and related information

that can be used to render a viewport (3.30)

3.6

decoding order

order in which syntax elements are processed by the decoding process (3.5)

3.7

distortion parameters

physical model of the lens distortion of a sensor (3.18) of a capture device (3.2)

3.8

field of view

FOV

angular region of the observable world in captured/recorded content or in a physical display device

3.9

MIV access unit

V3C composition unit that is a set of all sub-bitstream access units (3.21) that share the same decoding order

(3.6) count

3.10

MIV coded sub-bitstream sequence

sub-bitstream IRAP access unit (3.22) followed by zero or more sub-bitstream access units (3.21)

Note 1 to entry: An MIV coded sub-bitstream sequence is a coded sub-bitstream sequence conforming to the constraints

specified in this document.

3.11

MIV IRAP access unit

MIV access unit (3.9) for which all sub-bitstream access units (3.21) are sub-bitstream IRAP access units (3.22)

Note 1 to entry: An MIV IRAP access unit is a V3C IRAP composition unit conforming to the constraints specified in this

document.

3.12

MIV toolset profile component

toolset profile component with ptl_profile_toolset_idc equal to 64 (MIV Main), ptl_profile_toolset_idc equal

to 65 (MIV Extended), ptl_profile_toolset_idc equal to 66 (MIV Geometry Absent), ptl_profile_toolset_idc

equal to 67 (MIV 2), or ptl_profile_toolset_idc equal to 68 (MIV Simple MPI)

Note 1 to entry: The definition can be extended to accommodate future toolset profile components.

3.13

multi-plane image

MPI

set of pairs of texture and transparency attribute frames, each associated with an implicit constant

geometry frame

© ISO/IEC 2025 – All rights reserved

3.14

normalized disparity

-1

inverse of the depth value associated with a view sample (3.15), expressed in scene units

3.15

light source

physical or logical component of a capture device (3.2) for active illumination of the captured scene

3.16

pivot

point corresponding to the junction of two consecutive line segments in a piece-wise linear scaling of the

inverse of depth values

3.17

renderer

embodiment of a process to create a viewport (3.30) from a volumetric frame corresponding to a viewing

orientation (3.27) and viewing position (3.28)

3.18

sensor

physical or logical component of a capture device (3.2) that provides a video component

3.19

source

one or more video sequences, each containing geometry or an attribute such as texture and transparency

information before encoding

3.20

source view

source (3.19) video material before encoding that corresponds to the format of a view (3.23), which may have

been acquired by capture of a 3D scene by a real or virtual camera

3.21

sub-bitstream access unit

partition of a sub-bitstream that has a certain decoding order (3.6) count

Note 1 to entry: A sub-bitstream access unit is a sub-bitstream composition unit.

3.22

sub-bitstream IRAP access unit

sub-bitstream access unit (3.21) that forms an independent random-access point for the sub-bitstream

Note 1 to entry: A sub-bitstream IRAP access unit is a sub-bitstream IRAP composition unit.

3.23

view

2D rectangular arrays of view samples (3.26) consisting of attribute frames and corresponding geometry

frame representing the projection of a volumetric frame onto a surface using view parameters (3.24)

3.24

view parameters

parameters of the projection used to generate a view (3.23) from a volumetric frame, including intrinsic and

extrinsic parameters

3.25

view parameters list

listing of one or more view parameters (3.24)

3.26

view sample

position on the rectangular frame associated with a view (3.23)

© ISO/IEC 2025 – All rights reserved

3.27

viewing orientation

unit quaternion representing the orientation of a user who is consuming the visual content

3.28

viewing position

triple of x, y, z characterizing the position in the Cartesian coordinates of a user who is consuming the

visual content

3.29

viewing space

domain constraints for an intended viewport (3.30) rendering

Note 1 to entry: The domain is defined in the 3D global space and related to the viewing orientation (3.27); it defines a

scale between 0 and 1 for every point in space for a given direction of the viewport (3.30), to be used by the application.

3.30

viewport

view (3.23) suitable for display and viewing

4 Abbreviated terms

For the purposes of this document, the abbreviated terms given in ISO/IEC 23090-5 and the following apply.

CSG constructive solid geometry

ERP equirectangular projection

MIV MPEG immersive video

OMAF Omnidirectional media format

5 Conventions

The specifications in ISO/IEC 23090-5:2025, Clause 5 and its subclauses apply with the following additions.

to subclause 5.8 of ISO/IEC 23090-5:2025:

Cos( x ) the trigonometric cosine function operating on an argument x in units of radians

Dot( x, y ) dot product function, known also as scalar product function, operating on two vectors x and y

Norm( x ) = Sqrt( Dot( x, x ) )

Sin( x ) the trigonometric sine function operating on an argument x in units of radians

π the ratio of a circle's circumference to its diameter

6 Overall V3C characteristics, decoding operations, and post-decoding processes

The specifications in ISO/IEC 23090-5:2025, Clause 6 apply.

The decoded video frames can benefit from the application of additional transformations, as described in

Annex B, before any reconstruction operations. For example, the different components can be time-aligned

and converted to a nominal video format.

Informative hypothetical transcoding and rendering processes are available in Annex E.

© ISO/IEC 2025 – All rights reserved

7 Bitstream format, partitioning, and scanning processes

7.1 General

The specifications in ISO/IEC 23090-5:2025, subclause 7.1 apply.

7.2 V3C bitstream formats

The specifications in ISO/IEC 23090-5:2025, subclause 7.2 apply.

7.3 NAL bitstream formats

The specifications in ISO/IEC 23090-5:2025, subclause 7.3 apply.

7.4 Partitioning of atlas frames into tiles

The specifications in ISO/IEC 23090-5:2025, subclause 7.4 apply.

7.5 Mapping of views to V3C components

This subclause describes the concept of views and its mapping to patches in V3C components.

A view represents a field of view of a volumetric frame for a particular view position and orientation. Each

view, at a given time instance, may be represented by one 2D frame providing geometry information plus

one 2D frame per attribute, providing attribute information, and occupancy information that may either be

embedded within geometry or represented explicitly as a 2D frame. Each view may use the equirectangular,

perspective, or orthographic projection format. The atlas components of a view use the same projection

format. The projection ID of a patch maps to the view ID of a view. Multiple patches may map to the same view.

The volumetric frame and all views each have an associated reference frame. Cartesian coordinates of 3D

points can therefore be expressed according to the reference frame of the scene, as represented by the

volumetric frame, or according to the reference frame of any view. The camera extrinsic parameters (position

and orientation) of the views specify the relations between their reference frames, enabling switching of the

3D coordinate system to represent a 3D point from one reference frame attached to a given view to another

reference frame attached to another view.

A coded atlas contains information describing the patches within the atlas. For each patch, a view ID is

signalled which identifies which view the patch originated from.

A patch represents a rectangular region of a view, with corresponding regions in all present atlas

components: attribute(s), geometry, and occupancy. The size (width and height) of each patch in an atlas

is signalled. In this version of the document, the size of a patch is always the same as the corresponding

rectangular region in the view texture attribute component, but scaling may optionally be applied to the

geometry component or the occupancy component.

Figure 1 shows an illustrative example, in which two atlases contain five patches, which are mapped to three

views, with a texture attribute component and a geometry component.

© ISO/IEC 2025 – All rights reserved

Key

A0-A1 decoded attribute frames for atlas 0 and 1

G0-G1 decoded geometry frames for atlas 0 and 1

M0-M1 maps for atlas 0 and 1

P0-P8 patches

S0 stage 0 where attribute and geometry frames are decoded for each atlas

S1 stage 1 where block to patch mapping is performed

S2 stage 2 where patches are mapped to views

V0-V2 reconstructed views

Figure 1 — Example mapping of 5 patches in 2 atlases to 3 views

7.6 Sources and outputs

The volumetric video source that is represented by the bitstream is a sequence of volumetric frames.

Each volumetric frame is represented by one or more view frames, each of which may be represented by

a geometry picture, an attribute picture for each attribute, and occupancy information, which may be

conveyed in the geometry picture or represented separately.

The outputs of the decoding process are described in subclause 9.1.

The outputs of the non-normative rendering process of Annex E are a sequence of one or more views. The

number of views and the associated view parameters can be selected by the application. For example, a

single view can be output corresponding to a viewport suitable for display, or a set of views can be output

which correspond to the source view parameters.

8 Syntax and semantics

8.1 Method of specifying syntax in tabular form

The specifications in ISO/IEC 23090-5:2025, subclause 8.1 apply.

© ISO/IEC 2025 – All rights reserved

8.2 Specification of syntax functions and descriptors

The specifications in ISO/IEC 23090-5:2025, subclause 8.2 apply.

8.3 Syntax in tabular form

8.3.1 General syntax

The specifications in ISO/IEC 23090-5:2025, subclause 8.3 apply with the following additions.

Annex A specifies profiles, tiers and levels for MIV profiles.

An overview of the V3C bitstream structure with MIV extensions is represented in Figure 2.

© ISO/IEC 2025 – All rights reserved

Figure 2 — Overview of V3C bitstream with MIV extensions

8.3.2 V3C unit syntax

The specifications in ISO/IEC 23090-5:2025, subclause 8.3.2 apply.

© ISO/IEC 2025 – All rights reserved

8.3.3 Byte alignment syntax

The specifications in ISO/IEC 23090-5:2025, subclause 8.3.3 apply.

8.3.4 V3C parameter set syntax

The specifications in ISO/IEC 23090-5:2025, subclause 8.3.4 apply.

8.3.5 NAL unit syntax

The specifications in ISO/IEC 23090-5:2025, subclause 8.3.5 apply.

8.3.6 Raw byte sequence payloads, trailing bits, and byte alignment syntax

The specifications in ISO/IEC 23090-5:2025, subclause 8.3.6 apply.

8.3.7 Atlas tile data unit syntax

The specifications in ISO/IEC 23090-5:2025, subclause 8.3.7 apply.

8.3.8 Supplemental enhancement information message syntax

The specifications in ISO/IEC 23090-5:2025, subclause 8.3.8 apply.

8.3.9 V3C MIV extension syntax in tabular form

8.3.9.1 V3C parameter set MIV extension syntax

vps_miv_extension( ) { Descriptor

vme_geometry_scale_enabled_flag u(1)

vme_embedded_occupancy_enabled_flag u(1)

if( !vme_embedded_occupancy_enabled_flag )

vme_occupancy_scale_enabled_flag u(1)

group_mapping( )

}

8.3.9.2 Group mapping syntax

group_mapping( ) { Descriptor

gm_group_count u(4)

if( gm_group_count > 0 )

for( k = 0; k <= vps_atlas_count_minus1; k++ ) {

j = vps_atlas_id[ k ]

gm_group_id[ j ] u(v)

}

}

© ISO/IEC 2025 – All rights reserved

8.3.9.3 Atlas sequence parameter set MIV extension syntax

asps_miv_extension( ) { Descriptor

asme_ancillary_atlas_flag u(1)

asme_embedded_occupancy_enabled_flag u(1)

if( asme_embedded_occupancy_enabled_flag )

asme_depth_occ_threshold_flag u(1)

asme_geometry_scale_enabled_flag u(1)

if( asme_geometry_scale_enabled_flag ) {

asme_geometry_scale_factor_x_minus1 ue(v)

asme_geometry_scale_factor_y_minus1 ue(v)

}

if( !asme_embedded_occupancy_enabled_flag )

asme_occupancy_scale_enabled_flag u(1)

if( !asme_embedded_occupancy_enabled_flag && asme_occupancy_scale_enabled_flag ) {

asme_occupancy_scale_factor_x_minus1 ue(v)

asme_occupancy_scale_factor_y_minus1 ue(v)

}

asme_patch_constant_depth_flag u(1)

asme_patch_texture_offset_enabled_flag u(1)

if( asme_patch_texture_offset_enabled_flag )

asme_patch_texture_offset_bit_depth_minus1 ue(v)

asme_max_entity_id ue(v)

asme_inpaint_enabled_flag u(1)

}

8.3.9.4 Atlas frame parameter set MIV extension syntax

afps_miv_extension( ) { Descriptor

if( !afps_lod_mode_enabled_flag ) {

afme_inpaint_lod_enabled_flag u(1)

if( afme_inpaint_lod_enabled_flag ) {

afme_inpaint_lod_scale_x_minus1 ue(v)

afme_inpaint_lod_scale_y_idc ue(v)

}

}

}

© ISO/IEC 2025 – All rights reserved

8.3.9.5 Common atlas sequence parameter set MIV extension syntax

casps_miv_extension( ) { Descriptor

casme_depth_low_quality_flag u(1)

casme_depth_quantization_params_present_flag u(1)

casme_vui_params_present_flag u(1)

if( casme_vui_params_present_flag )

vui_parameters( )

}

8.3.9.6 Common atlas frame

8.3.9.6.1 MIV extension syntax

caf_miv_extension( ) { Descriptor

if( nal_unit_type == NAL_CAF_IDR ) {

miv_view_params_list( )

} else {

came_update_extrinsics_flag u(1)

came_update_intrinsics_flag u(1)

if( casme_depth_quantization_params_present_flag )

came_update_depth_quantization_flag u(1)

if( casme_chroma_scaling_present_flag )

came_update_chroma_scaling_flag u(1)

if( came_update_extrinsics_flag )

miv_view_params_update_extrinsics( )

if( came_update_intrinsics_flag )

miv_view_params_update_intrinsics( )

if( came_update_depth_quantization_flag )

miv_view_params_update_depth_quantization( )

if( came_update_chroma_scaling_flag )

miv_view_params_update_chroma_scaling( )

if( casme_capture_device_information_present_flag ) {

came_update_sensor_extrinsics_flag u(1)

came_update_distortion_parameters_flag u(1)

came_update_light_source_extrinsics_flag u(1)

if( came_update_sensor_extrinsics_flag )

miv_view_params_update_sensor_extrinsics( )

if( came_update_distortion_parameters_flag )

miv_view_params_update_distortion_parameters( )

if( came_update_light_source_extrinsics_flag )

miv_view_params_update_light_source_extrinsics( )

}

}

}

© ISO/IEC 2025 – All rights reserved

8.3.9.6.2 MIV view parameters list syntax

miv_view_params_list( ) { Descriptor

mvp_num_views_minus1 u(16)

mvp_explicit_view_id_flag u(1)

if( mvp_explicit_view_id_flag )

for( v = 0; v <= mvp_num_views_minus1; v++ )

mvp_view_id[ v ] u(16)

for( v = 0; v <= mvp_num_views_minus1; v++ ) {

camera_extrinsics( v )

mvp_inpaint_flag[ v ] u(1)

if( casme_capture_device_information_present_flag ) {

i = mvp_device_model_id[ v ] u(6)

for( s = 0; s < SensorCount[ i ]; s++ ) {

if( IntraSensorParallaxFlag[ i ] )

sensor_extrinsics( v, s )

distortion_parameters( v, s )

}

for( s = 0; s < LightSourceCount[ i ]; s++ )

light_source_extrinsics( v, s )

}

}

mvp_intrinsic_params_equal_flag u(1)

for( v = 0; v <= ( mvp_intrinsic_params_equal_flag ? 0: mvp_num_views_minus1 ); v++ )

camera_intrinsics( v )

if( casme_depth_quantization_params_present_flag ) {

mvp_depth_quantization_params_equal_flag u(1)

for( v = 0; v <= ( mvp_depth_quantization_params_equal_flag

? 0: mvp_num_views_minus1 ); v++ )

depth_quantization( v )

}

mvp_pruning_graph_params_present_flag u(1)

if ( mvp_pruning_graph_params_present_flag )

for( v = 0; v <= mvp_num_views_minus1; v++ )

pruning_parents( v )

if( casme_decoder_side_depth_estimation_flag )

mvp_depth_reprojection_flag u(1)

if( casme_chroma_scaling_present_flag )

for( v = 0; v <= mvp_num_views_minus1; v++ )

chroma_scaling( v )

if( casme_background_separation_enabled_flag )

for( v = 0; v <= mvp_num_views_minus1; v++ )

mvp_view_background_flag[ v ] u(1)

}

© ISO/IEC 2025 – All rights reserved

8.3.9.6.3 MIV view parameters update extrinsics syntax

miv_view_params_update_extrinsics( ) { Descriptor

mvpue_num_view_updates_minus1 u(16)

for( i = 0; i <= mvpue_num_view_updates_minus1; i++ ) {

mvpue_view_idx[ i ] u(16)

camera_extrinsics( mvpue_view_idx[ i ] )

}

}

8.3.9.6.4 MIV view parameters update intrinsics syntax

miv_view_params_update_intrinsics( ) { Descriptor

mvpui_num_view_updates_minus1 u(16)

for( i = 0; i <= mvpui_num_view_updates_minus1; i++ ) {

mvpui_view_idx[ i ] u(16)

camera_intrinsics( mvpui_view_idx[ i ] )

}

}

8.3.9.6.5 MIV view parameters update depth quantization syntax

miv_view_params_update_depth_quantization( ) { Descriptor

mvpudq_num_view_updates_minus1 u(16)

for( i = 0; i <= mvpudq_num_view_updates_minus1; i++ ) {

mvpudq_view_idx[ i ] u(16)

depth_quantization( mvpudq_view_idx[ i ] )

}

}

8.3.9.6.6 Camera extrinsics syntax

camera_extrinsics( v ) { Descriptor

ce_view_pos_x[ v ] fl(32)

ce_view_pos_y[ v ] fl(32)

ce_view_pos_z[ v ] fl(32)

ce_view_quat_x[ v ] i(32)

ce_view_quat_y[ v ] i(32)

ce_view_quat_z[ v ] i(32)

}

© ISO/IEC 2025 – All rights reserved

8.3.9.6.7 Camera intrinsics syntax

camera_intrinsics( v ) { Descriptor

ci_cam_type[ v ] u(8)

ci_projection_plane_width_minus1[ v ] u(16)

ci_projection_plane_height_minus1[ v ] u(16)

if( ci_cam_type[ v ] == 0 ) { /* equirectangular */

ci_erp_phi_min[ v ] fl(32)

ci_erp_phi_max[ v ] fl(32)

ci_erp_theta_min[ v ] fl(32)

ci_erp_theta_max[ v ] fl(32)

} else if( ci_cam_type[ v ] == 1 ) { /* perspective */

ci_perspective_focal_hor[ v ] fl(32)

ci_perspective_focal_ver[ v ] fl(32)

ci_perspective_principal_point_hor[ v ] fl(32)

ci_perspective_principal_point_ver[ v ] fl(32)

} else if( ci_cam_type[v] == 2 ) { /* orthographic */

ci_ortho_width[ v ] fl(32)

ci_ortho_height[ v ] fl(32)

}

}

8.3.9.6.8 Depth quantization syntax

depth_quantization( v ) { Descriptor

dq_quantization_law[ v ] ue(v)

if( dq_quantization_law[ v ] == 0 ) {

dq_norm_disp_low[ v ] fl(32)

dq_norm_disp_high[ v ] fl(32)

} else if( dq_quantization_law[ v ] == 2 ) {

dq_norm_disp_low[ v ] fl(32)

dq_norm_disp_high[ v ] fl(32)

dq_pivot_count_minus1[ v ] u(8)

for( i = 0; i <= dq_pivot_count_minus1[ v ]; i++ )

dq_pivot_norm_disp[ v ][ i ] fl(32)

} else if( dq_quantization_law[ v ] == 4 ) {

dq_linear_near[ v ] fl(32)

dq_linear_far[ v ] fl(32)

}

dq_depth_occ_threshold_default[ v ] ue(v)

}

© ISO/IEC 2025 – All rights reserved

8.3.9.6.9 Pruning parents syntax

pruning_parents( v ) { Descriptor

pp_is_root_flag[ v ] u(1)

if( !pp_is_root_flag[ v ] ) {

pp_num_parents_minus1[ v ] u(v)

for( i = 0; i <= pp_num_parents_minus1[ v ]; i++ )

pp_parent_idx[ v ][ i ] u(v)

}

}

8.3.9.6.10 MIV view parameters update chroma scaling syntax

miv_view_params_update_chroma_scaling( ) { Descriptor

mvpucs_num_view_updates_minus1 u(16)

for( i = 0; i <= mvpucs_num_view_updates_minus1; i++ ) {

mvpucs_view_idx[ i ] u(16)

chroma_scaling( mvpucs_view_idx[ i ] )

}

}

8.3.9.6.11 Chroma scaling syntax

chroma_scaling( v ) { Descriptor

cs_u_min[ v ] u(v)

cs_u_max[ v ] u(v)

cs_v_min[ v ] u(v)

cs_v_max[ v ] u(v)

}

8.3.9.6.12 Sensor extrinsics syntax

sensor_extrinsics( v, s ) { Descriptor

se_sensor_pos_x[ v ][ s ] fl(32)

se_sensor_pos_y[ v ][ s ] fl(32)

se_sensor_pos_z[ v ][ s ] fl(32)

se_sensor_quat_x[ v ][ s ] i(32)

se_sensor_quat_y[ v ][ s ] i(32)

se_sensor_quat_z[ v ][ s ] i(32)

}

© ISO/IEC 2025 – All rights reserved

8.3.9.6.13 Light source extrinsics syntax

light_source_extrinsics( v, s ) { Descriptor

lse_light_source_pos_x[ v ][ s ] fl(32)

lse_light_source_pos_y[ v ][ s ] fl(32)

lse_light_source_pos_z[ v ][ s ] fl(32)

lse_light_source_quat_x[ v ][ s ] i(32)

lse_light_source_quat_y[ v ][ s ] i(32)

lse_light_source_quat_z[ v ][ s ] i(32)

}

8.3.9.6.14 Distortion parameters syntax

distortion_parameters( v, s ) { Descriptor

dp_model_id[ v ][ s ] ue(v)

if( dp_model_id[ v ][ s ] != 0 )

dp_coefficient_count[ v ][ s ] ue(v)

for( i = 0; i

dp_coefficient[ v ][ s ][ i ] fl(32)

}

8.3.9.6.15 MIV view parameters update sensor extrinsics syntax

miv_view_params_update_sensor_extrinsics( ) { Descriptor

mvpuse_num_updates_minus1 u(16)

for( i = 0; i <= mvpuse_num_updates_minus1; i++ ) {

v = mvpuse_view_idx[ i ] u(16)

s = mvpuse_sensor_idx[ i ] u(16)

sensor_extrinsics( v, s )

}

}

8.3.9.6.16 MIV view parameters update distortion parameters syntax

miv_view_params_update_distortion_parameters( ) { Descriptor

mvpudp_num_updates_minus1 u(16)

for( i = 0; i <= mvpudp_num_updates_minus1; i++ ) {

v = mvpudp_view_idx[ i ] u(16)

s = mvpudp_sensor_idx[ i ] u(16)

distortion_parameters( v, s )

}

}

© ISO/IEC 2025 – All rights reserved

8.3.9.6.17 MIV view parameters update light source extrinsics syntax

miv_view_params_update_light_source_extrinsics( ) { Descriptor

mvpulse_num_updates_minus1 u(16)

for( i = 0; i <= mvpulse_num_updates_minus1; i++ ) {

v = mvpulse_view_idx[ i ] u(16)

s = mvpulse_sensor_idx[ i ] u(16)

light_source_extrinsics( v, s )

}

}

8.3.9.7 Patch data unit MIV extension syntax

pdu_miv_extension( tileID, p ) { Descriptor

if( asme_max_entity_id > 0

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.

Loading comments...