ISO/IEC 14496-16:2006

(Main)Information technology - Coding of audio-visual objects - Part 16: Animation Framework eXtension (AFX)

Information technology - Coding of audio-visual objects - Part 16: Animation Framework eXtension (AFX)

ISO/IEC 14496-16:2006 specifies MPEG-4 Animation Framework eXtension (AFX) model for representing 3D Graphics content. Within this model, MPEG-4 is extended with higher-level synthetic objects for specifying geometry, texture, and animation as well as dedicated compression algorithms. The geometry tools include Non Uniform Rational B-Splines (NURBS), Subdivision Surfaces, Mesh Grid, Morphing Space and Solid Representation based on implicit equations. The texture tools include Depth-Image based Representation and MultiTexturing. The animation tools refer to Non-Linear Deformers and Bone-based Animation for skinned models. AFX also specifies profiles and levels for 3D Graphics Compression tools and the encoding parameters for 3D content. In addition, AFX specifies a backchannel for progressive streaming of view-dependent information.

Technologies de l'information — Codage des objets audiovisuels — Partie 16: Extension du cadre d'animation (AFX)

General Information

Relations

Frequently Asked Questions

ISO/IEC 14496-16:2006 is a standard published by the International Organization for Standardization (ISO). Its full title is "Information technology - Coding of audio-visual objects - Part 16: Animation Framework eXtension (AFX)". This standard covers: ISO/IEC 14496-16:2006 specifies MPEG-4 Animation Framework eXtension (AFX) model for representing 3D Graphics content. Within this model, MPEG-4 is extended with higher-level synthetic objects for specifying geometry, texture, and animation as well as dedicated compression algorithms. The geometry tools include Non Uniform Rational B-Splines (NURBS), Subdivision Surfaces, Mesh Grid, Morphing Space and Solid Representation based on implicit equations. The texture tools include Depth-Image based Representation and MultiTexturing. The animation tools refer to Non-Linear Deformers and Bone-based Animation for skinned models. AFX also specifies profiles and levels for 3D Graphics Compression tools and the encoding parameters for 3D content. In addition, AFX specifies a backchannel for progressive streaming of view-dependent information.

ISO/IEC 14496-16:2006 specifies MPEG-4 Animation Framework eXtension (AFX) model for representing 3D Graphics content. Within this model, MPEG-4 is extended with higher-level synthetic objects for specifying geometry, texture, and animation as well as dedicated compression algorithms. The geometry tools include Non Uniform Rational B-Splines (NURBS), Subdivision Surfaces, Mesh Grid, Morphing Space and Solid Representation based on implicit equations. The texture tools include Depth-Image based Representation and MultiTexturing. The animation tools refer to Non-Linear Deformers and Bone-based Animation for skinned models. AFX also specifies profiles and levels for 3D Graphics Compression tools and the encoding parameters for 3D content. In addition, AFX specifies a backchannel for progressive streaming of view-dependent information.

ISO/IEC 14496-16:2006 is classified under the following ICS (International Classification for Standards) categories: 35.040 - Information coding; 35.040.40 - Coding of audio, video, multimedia and hypermedia information. The ICS classification helps identify the subject area and facilitates finding related standards.

ISO/IEC 14496-16:2006 has the following relationships with other standards: It is inter standard links to ISO/IEC 14496-16:2006/Amd 1:2007, ISO/IEC 14496-16:2006/Amd 3:2008, ISO/IEC 14496-16:2006/Amd 2:2009, ISO/IEC 14496-16:2009, ISO/IEC 14496-16:2004/Amd 1:2006, ISO/IEC 14496-16:2004, ISO/IEC 14496-16:2004/Cor 1:2005, ISO/IEC 14496-16:2004/Cor 2:2005; is excused to ISO/IEC 14496-16:2006/Amd 3:2008, ISO/IEC 14496-16:2006/Amd 1:2007, ISO/IEC 14496-16:2006/Amd 2:2009. Understanding these relationships helps ensure you are using the most current and applicable version of the standard.

You can purchase ISO/IEC 14496-16:2006 directly from iTeh Standards. The document is available in PDF format and is delivered instantly after payment. Add the standard to your cart and complete the secure checkout process. iTeh Standards is an authorized distributor of ISO standards.

Standards Content (Sample)

INTERNATIONAL ISO/IEC

STANDARD 14496-16

Second edition

2006-12-15

Information technology — Coding of

audio-visual objects —

Part 16:

Animation Framework eXtension (AFX)

Technologies de l'information — Codage des objets audiovisuels —

Partie 16: Extension du cadre d'animation (AFX)

Reference number

©

ISO/IEC 2006

PDF disclaimer

This PDF file may contain embedded typefaces. In accordance with Adobe's licensing policy, this file may be printed or viewed but

shall not be edited unless the typefaces which are embedded are licensed to and installed on the computer performing the editing. In

downloading this file, parties accept therein the responsibility of not infringing Adobe's licensing policy. The ISO Central Secretariat

accepts no liability in this area.

Adobe is a trademark of Adobe Systems Incorporated.

Details of the software products used to create this PDF file can be found in the General Info relative to the file; the PDF-creation

parameters were optimized for printing. Every care has been taken to ensure that the file is suitable for use by ISO member bodies. In

the unlikely event that a problem relating to it is found, please inform the Central Secretariat at the address given below.

© ISO/IEC 2006

All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized in any form or by any means,

electronic or mechanical, including photocopying and microfilm, without permission in writing from either ISO at the address below or

ISO's member body in the country of the requester.

ISO copyright office

Case postale 56 • CH-1211 Geneva 20

Tel. + 41 22 749 01 11

Fax + 41 22 749 09 47

E-mail copyright@iso.org

Web www.iso.org

Published in Switzerland

ii © ISO/IEC 2006 – All rights reserved

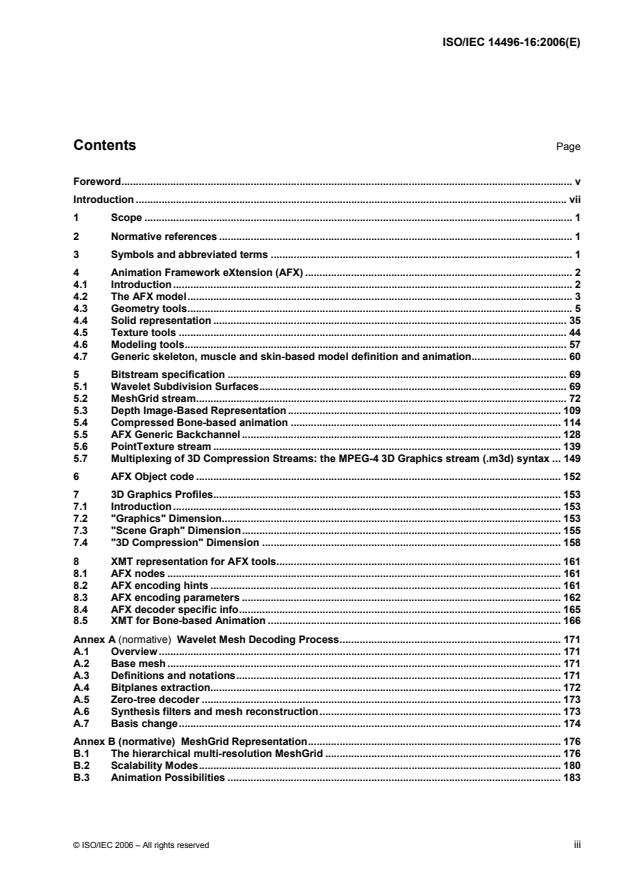

Contents Page

Foreword. v

Introduction . vii

1 Scope. 1

2 Normative references. 1

3 Symbols and abbreviated terms . 1

4 Animation Framework eXtension (AFX) . 2

4.1 Introduction . 2

4.2 The AFX model. 3

4.3 Geometry tools. 5

4.4 Solid representation . 35

4.5 Texture tools. 44

4.6 Modeling tools. 57

4.7 Generic skeleton, muscle and skin-based model definition and animation. 60

5 Bitstream specification. 69

5.1 Wavelet Subdivision Surfaces. 69

5.2 MeshGrid stream. 72

5.3 Depth Image-Based Representation. 109

5.4 Compressed Bone-based animation . 114

5.5 AFX Generic Backchannel. 128

5.6 PointTexture stream. 139

5.7 Multiplexing of 3D Compression Streams: the MPEG-4 3D Graphics stream (.m3d) syntax . 149

6 AFX Object code. 152

7 3D Graphics Profiles. 153

7.1 Introduction . 153

7.2 "Graphics" Dimension. 153

7.3 "Scene Graph" Dimension. 155

7.4 "3D Compression" Dimension. 158

8 XMT representation for AFX tools. 161

8.1 AFX nodes. 161

8.2 AFX encoding hints . 161

8.3 AFX encoding parameters . 162

8.4 AFX decoder specific info. 165

8.5 XMT for Bone-based Animation . 166

Annex A (normative) Wavelet Mesh Decoding Process. 171

A.1 Overview. 171

A.2 Base mesh. 171

A.3 Definitions and notations. 171

A.4 Bitplanes extraction. 172

A.5 Zero-tree decoder . 173

A.6 Synthesis filters and mesh reconstruction. 173

A.7 Basis change. 174

Annex B (normative) MeshGrid Representation. 176

B.1 The hierarchical multi-resolution MeshGrid . 176

B.2 Scalability Modes. 180

B.3 Animation Possibilities. 183

© ISO/IEC 2006 – All rights reserved iii

Annex C (informative) MeshGrid representation. 186

C.1 Representing Quadrilateral meshes in the MeshGrid format. 186

C.2 IndexedFaceSet models represented by the MeshGrid format. 188

C.3 Computation of the number of ROIs at the highest resolution level given an optimal ROI

size. 189

Annex D (informative) Solid representation. 190

D.1 Overview. 190

D.2 Solid Primitives. 190

D.3 Arithmetics of Forms . 191

Annex E (informative) Face and Body animation: XMT compliant animation and encoding

parameter file format . 197

E.1 XSD for FAP file format . 197

E.2 XSD for BAP file format . 198

E.3 XSD for EPF . 199

Annex F (normative) Node coding tables . 203

F.1 Node Coding tables . 203

F.2 Node Definition Type tables. 203

Annex G (Informative) Patent statements . 204

Bibliography . 205

iv © ISO/IEC 2006 – All rights reserved

Foreword

ISO (the International Organization for Standardization) and IEC (the International Electrotechnical

Commission) form the specialized system for worldwide standardization. National bodies that are members of

ISO or IEC participate in the development of International Standards through technical committees

established by the respective organization to deal with particular fields of technical activity. ISO and IEC

technical committees collaborate in fields of mutual interest. Other international organizations, governmental

and non-governmental, in liaison with ISO and IEC, also take part in the work. In the field of information

technology, ISO and IEC have established a joint technical committee, ISO/IEC JTC 1.

International Standards are drafted in accordance with the rules given in the ISO/IEC Directives, Part 2.

The main task of the joint technical committee is to prepare International Standards. Draft International

Standards adopted by the joint technical committee are circulated to national bodies for voting. Publication as

an International Standard requires approval by at least 75 % of the national bodies casting a vote.

ISO/IEC 14496-16 was prepared by Joint Technical Committee ISO/IEC JTC 1, Information technology,

Subcommittee SC 29, Coding of audio, picture, multimedia and hypermedia information.

This second edition cancels and replaces the first edition (ISO/IEC 14496-16:2004), which has been

technically revised. It also incorporates the amendment ISO/IEC 14496-16:2004/Amd.1:2006 and the

Technical Corrigenda ISO/IEC 14496-16:2004/Cor.1:2005 and ISO/IEC 14496-16:2004/Cor.2:2005.

ISO/IEC 14496 consists of the following parts, under the general title Information technology — Coding of

audio-visual objects:

⎯ Part 1: Systems

⎯ Part 2: Visual

⎯ Part 3: Audio

⎯ Part 4: Conformance testing

⎯ Part 5: Reference software

⎯ Part 6: Delivery Multimedia Integration Framework (DMIF)

⎯ Part 7: Optimized reference software for coding of audio-visual objects [Technical Report]

⎯ Part 8: Carriage of ISO/IEC 14496 contents over IP networks

⎯ Part 9: Reference hardware description [Technical Report]

⎯ Part 10: Advanced Video Coding

⎯ Part 11: Scene description and application engine

⎯ Part 12: ISO base media file format

⎯ Part 13: Intellectual Property Management and Protection (IPMP) extensions

⎯ Part 14: MP4 file format

⎯ Part 15: Advanced Video Coding (AVC) file format

⎯ Part 16: Animation Framework eXtension (AFX)

⎯ Part 17: Streaming text format

⎯ Part 18: Font compression and streaming

© ISO/IEC 2006 – All rights reserved v

⎯ Part 19: Synthesized texture stream

⎯ Part 20: Lightweight Application Scene Representation (LASeR) and Simple Aggregation Format (SAF)

⎯ Part 21: MPEG-J GFX

⎯ Part 22: Open Font Format

vi © ISO/IEC 2006 – All rights reserved

Introduction

The International Organization for Standardization (ISO) and International Electrotechnical Commission (IEC)

draw attention to the fact that it is claimed that compliance with this document may involve the use of patents.

The ISO and IEC take no position concerning the evidence, validity and scope of these patent rights.

The holders of these patent rights have assured the ISO and IEC that they are willing to negotiate licences

under reasonable and non-discriminatory terms and conditions with applicants throughout the world. In this

respect, the statement of the holder of this patent right is registered with the ISO and IEC. Information may be

obtained from the companies listed in Annex G.

Attention is drawn to the possibility that some of the elements of this document may be the subject of patent

rights other than those identified in Annex G. ISO and IEC shall not be held responsible for identifying any or

all such patent rights.

© ISO/IEC 2006 – All rights reserved vii

INTERNATIONAL STANDARD ISO/IEC 14496-16:2006(E)

Information technology — Coding of audio-visual objects —

Part 16:

Animation Framework eXtension (AFX)

1 Scope

This International Standard specifies MPEG-4 Animation Framework eXtension (AFX) model for creating

interactive multimedia contents by composing natural and synthetic objects. Within this model, MPEG-4 is

extended with higher-level synthetic objects for geometry, texture, and animation as well as dedicated

compressed representations.

AFX also specifies a backchannel for progressive streaming of view-dependent information.

2 Normative references

The following referenced documents are indispensable for the application of this document. For dated

references, only the edition cited applies. For undated references, the latest edition of the referenced

document (including any amendments) applies.

ISO/IEC 14496-1:2004, Information technology — Coding of audio-visual objects — Part 1: Systems

ISO/IEC 14496-2:2004, Information technology — Coding of audio-visual objects — Part 2: Visual

ISO/IEC 14496-11:2005, Information technology — Coding of audio-visual objects — Part 11: Scene

description and application engine

3 Symbols and abbreviated terms

List of symbols and abbreviated terms.

AFX Animation Framework eXtension

BIFS BInary Format for Scene

DIBR Depth-Image Based Representation

ES Elementary Stream

IBR Image-Based Rendering

NDT Node Data Type

OD Object Descriptor

VRML Virtual Reality Modelling Language

© ISO/IEC 2006 – All rights reserved 1

Render

Composite

DMUX

4 Animation Framework eXtension (AFX)

4.1 Introduction

“Most people think the word ‘animation’ means movement.

But it doesn’t. It comes from ‘animus’ which means ‘life or to live’.

Making it move is not animation, but just the mechanics of it.”

Frank Thomas and Ollie Johnston

Disney Animation: the illusion of life, 1981

MPEG-4, ISO/IEC 14496, is a multimedia standard that enables composition of multiple audio-visual objects

on a terminal. Audio and visual objects can come from natural sources (e.g. a microphone, a camera) or from

synthetic ones (i.e. made by a computer); each source is called a media or a stream. On their terminals, users

can display, play, and interact with MPEG-4 audio-visual contents, which can be downloaded previously or

streamed from remote servers. Moreover, each object may be protected to ensure a user has the right

credentials before downloading and displaying it.

Unlike natural audio and video objects, computer graphics objects are purely synthetic. Mixing computer

graphics objects with traditional audio and video enables augmented reality applications, i.e. applications

mixing natural and synthetic objects. Examples of such contents range from DVD menus, and TV's Electronic

Programming Guides to medical and training applications, games, and so on.

Like other computer graphics specifications, MPEG-4 synthetic objects are organized in a scene graph based

on VRML97 [1], which is a direct acyclic tree where nodes represent objects and branches their properties,

called fields. As each object can receive and emit events, two branches can be connected by the means of a

route, which propagates events from one field of one node to another field of another node. As any other

MPEG-4 media, scenes may receive updates from a server that modify the topology of the scene graph.

The Animation Framework eXtension (AFX) proposes a conceptual organization of synthetic models for

computer animations as well as specific compression schemes; models defined in ISO/IEC 14496-11 and in

this part fit in organization (see Figure 2).

Elementary Stream Interface

DMIF

Audio

Audio DB Audio CB

Decode

Video

Video CB

Video DB

Decode

OD

OD DB

Decode

Animation

AFX Decoded

AFX DB

AFX Framework

Decode

eXtension

BIFS

Decoded

BIFS Tree

BIFS DB

Decode BIFS

IPMP-Ds

Possible IPMP

IPMP DB IPMP-ES

IPMP System(s)

Control Points

Figure 1 — Animation Framework and MPEG-4 Systems.

2 © ISO/IEC 2006 – All rights reserved

Figure 1 shows the position of the Animation Framework within MPEG-4 Terminal architecture. It extends the

existing BIFS tree with new tools and define the AFX streams that carry dedicated compressed object

representations and a backchannel for view-dependent features.

4.2 The AFX model

To understand the AFX model, let's take an example. Suppose one wants to build an avatar. The avatar

consists of geometry elements that describe its legs, arms, head and so on. Simple geometric elements can

be used and deformed to produce more physically realistic geometry. Then, skin, hair, cloths are added.

These may be physic-based models attached to the geometry. Whenever the geometry is deformed, these

models deform and thanks to their physics, they may produce wrinkles. Biomechanical models are used for

motion, collision response, and so on. Finally, our avatar may exhibit special behaviors when it encounters

objects in its world. It might also learn from experiences: for example, if it touches a hot surface and gets hurt,

next time, it will avoid touching it.

This hierarchy also works in a top to bottom manner: if it touches a hot surface, its behavior may be to retract

its hand. Retracting its hand follows a biomechanical pattern. The speed of the movement is based on the

physical property of its hand linked to the rest of its body, which in turn modify geometric properties that define

the hand.

AFX defines 6 groups of components, following [38].

Terminal

Terminal

Cognitive

Behavior

Biomechanical

Physics

Animation

Animation

Modeling

Geometry

Animation Framework eXtension – AFX ‘effects’

Figure 2 — Models in computer games and animation [38].

a) Geometric component. These models capture the form and appearance of an object. Many characters in

animations and games can be quite efficiently controlled at this low-level. Due to the predictable nature of

motion, building higher-level models for characters that are controlled at the geometric level is generally

much simpler.

b) Modeling component. These models are an extension of geometric models and add linear and non-linear

deformations to them. They capture transformation of the models without changing its original shape.

Animations can be made on changing the deformation parameters independently of the geometric models.

c) Physical component. These models capture additional aspects of the world such as an object’s mass

inertia, and how it responds to forces such as gravity. The use of physical models allows many motions to

be created automatically and with unparallel realism.

© ISO/IEC 2006 – All rights reserved 3

d) Biomechanical component. Real animals have muscles that they use to exert forces and torques on their

own bodies. If we already have built physical models of characters, they can use virtual muscles to move

themselves around. These models have their roots in control theory.

e) Behavioral component. A character may expose a reactive behavior when its behavior is solely based on

its perception of the current situation (i.e. no memory of previous situations). Goal-directed behaviors can

be used to define a cognitive character’s goals. They can also be used to model flocking behaviors.

f) Cognitive component. If the character is able to learn from stimuli from the world, it may be able to adapt

its behavior. These models are related to artificial intelligence techniques.

AFX specification currently deals with the first four categories. Models of the last two categories are typically

application-specific and often designed programmatically. Similarly, while the first four categories can be

animated using existing tools such as interpolators, the last two categories have their own logic and cannot be

animated the same way.

In each category, one can find many models for any applications: from simple models that require little

processing power (low-level models) to more complex models (high-level models) that require more

computations by the terminal. VRML [1] and BIFS specifications provide low-level models that belong to the

Geometry component with the exception of Face and Body Animation tools that belong to the Biomechanical

component.

Higher-level components can be defined as providing a compact representation of functionality in a more

abstract manner. Typically, this abstraction leads to mathematical models that need few parameters. These

models cannot be rendered directly by a graphic card: internally, they are converted to low-level primitives a

graphic card can render. Besides a more compact representation, this abstraction often provides other

functionalities such as but not limited to compact representation, view-dependent subdivision, automatic level-

of-details, smoother graphical representation , scalability across terminals, and progressive local refinements.

For example, a subdivision surface can be subdivided based on the area viewed by the user. For animations,

piecewise-linear interpolators require few computations but require lots of data in order to represent a curved

path. Higher-level animation models represent animation using piecewise-spline interpolators with less values

and provide more control over the animation path and timeline.

In the remaining of this document, this conceptual organization of tools is followed in the same spirit an author

will create content: the geometry is first defined with or without solid modeling tools, then texture is added to it.

Objects can be deformed and animated using modeling and animation tools. Finally, avatars need

biomechanical tools. Behavioral and Cognitive models can be programmatically implemented using JavaScript

or MPEG-J defined in ISO/IEC 14496-11.

NOTE

Some generic tools developed originally within the Animation Framework eXtension have been relocated in

ISO/IEC 14496-11/AMD1 along with other generic tools. This includes:

⎯ Spline-based generic animation tools, called Animator nodes;

⎯ Optimized interpolator compression tools;

⎯ BitWrapper node that enables compressed representation of exisiting nodes;

⎯ Procedural textures based on fractal plasma.

4 © ISO/IEC 2006 – All rights reserved

4.3 Geometry tools

4.3.1 Introduction

Geometry tools consist of the following technologies:

⎯ NURBS, which consists of the following nodes: NurbsSurface, NurbsCurve, and

NurbsCurve2D;

⎯ Subdivision surfaces consisting of the following nodes: SubdivisionSurface,

SubdivSurfaceSector and WaveletSubdivisionSurface of which the latter enables to add

wavelet-encoded details at different resolutions to subdivision surfaces;

⎯ MeshGrid, which consists of the MeshGrid node;

4.3.2 Non-Uniform Rational B-Spline (NURBS)

4.3.2.1 Introduction

A Non-Uniform Rational B-Spline (NURBS) curve of degree p > 0 (and hence of order p+1) and control points

{P} (0 ≤ i ≤ n−1; n ≥ p+1) has for Equation 1 [37], [63], [88]:

i

n−1

N (u)w P

∑ i, p i i

n−1

i=0

C(u)= R (u)P =

∑

i, p i

n−1

i=0

N (u)w

∑ i, p i

i=0

Equation 1 — NURBS curve definition.

The parameter u ∈ [0, 1] allows to travel along the curve and {R } are its rational basis functions. The latter

i,p

th

can in turn be expressed in terms of some positive (and not all null) weights {w}, and {N }, the p -degree B-

i i,p

Spline basis functions defined on the possibly non-uniform, but always non-decreasing knot sequence/vector

of length m = n + p+1: U = {u , u , …, u }, where 0 ≤ u ≤ u ≤ 1 ∀ 0 ≤ i ≤ m − 2.

0 1 m−1 i i+1

The B-Spline basis functions are defined recursively using the Cox de Boor formula:

1 if u ≤ u< u ;

⎧

i i+1

N (u) =

⎨

i,0

0 otherwise;

⎩

u − u

u− u

i+ p+1

i

N (u) = N (u)+ N (u).

i, p i, p−1 i+1, p−1

u − u u − u

i+ p i i+ p+1 i+1

If u = u = … = u , it is said that u has multiplicity k. C(u) is infinitely differentiable inside knot spans, i.e.,

i i+1 i+k−1 i

for u < u < u , but only p−k times differentiable at the parameter value corresponding to a knot of multiplicity k,

i i+1

so setting several consecutive knots to the same value u decreases the smoothness of the curve at u. In

j j

general, knots cannot have multiplicity greater than p, but the first and/or last knot of U can have multiplicity

p+1, i.e., u = … = u = 0 and/or u = … = u = 1, which causes C to interpolate the corresponding

0 p m−p−1 m−1

endpoint(s) of the control polygon defined by {P}, i.e., C(0) = P and/or C(1) = P . Therefore, a knot vector of

i 0 n−1

⎧ ⎫

⎪ ⎪

the kind U= u = 0,…,0,u ,…,u ,1,…,1= u causes the curve to be endpoint interpolating, i.e., to

⎨ ⎬

0 p+1 m− p−2 m−1

����� ���� ���

⎪ ⎪

p+1 p+1

⎩ ⎭

interpolate both endpoints of its control polygon. Extreme knots, multiple or not, may enclose any non-

decreasing subsequence of interior knots: 0 < u ≤ u < 1. An endpoint interpolating curve with no interior

i i+1

th

knots, i.e., one with U consisting of p+1 zeroes followed by p+1 ones, with no other values in between, is a p -

© ISO/IEC 2006 – All rights reserved 5

degree Bézier curve: e.g., a cubic Bézier curve can be described with four control points (of which the first and

last will lie on the curve) and a knot vector U = {0, 0, 0, 0, 1, 1, 1, 1}.

It is possible to represent all types of curves with NURBS and, in particular, all conic curves (including

parabolas, hyperbolas, ellipses, etc.) can be represented using rational functions, unlike when using merely

polynomial functions.

Other interesting properties of NURBS curves are the following:

⎯ Affine invariance: rotations, translations, scalings, and shears can be applied to the curve by applying

them to {P }.

i

⎯ Convex hull property: the curve lies within the convex hull defined by {P}. The control polygon defined by

i

{P} represents a piecewise approximation to the curve. As a general rule, the lower the degree, the closer

i

the NURBS curve follows its control polygon.

⎯ Local control: if the control point P is moved or the weight w is changed, only the portion of the curve

i i

swept when u < u < u is affected by the change.

i i+p+1

NURBS surfaces are defined as tensor products of two NURBS curves, of possibly different degrees and/or

numbers of control points:

n−1 l−1

N (u)N (v)w P

∑∑ i, p j,q i, j i, j

i=0 j=0

C(u,v)=

n−1 l−1

N (u)N (v)w

∑∑ i, p j,q i, j

i=0 j=0

Equation 2 — NURBS surface definition.

The two independent parameters u, v ∈ [0, 1] allow to travel across the surface. The B-Spline basis functions

are defined as previously, and the resulting surface has the same interesting properties that NURBS curves

have. Multiplicity of knots may be used to introduce sharp features (corners, creases, etc.) in an otherwise

smooth surface, or to have it interpolate the perimeter of its control polyhedron.

4.3.2.2 NurbsCurve

4.3.2.2.1 Node interface

NurbsCurve { #%NDT=SFGeometryNode

eventIn MFInt32 set_colorIndex

exposedField SFColorNode color NULL

exposedField MFVec4f controlPoint []

exposedField SFInt32 tessellation 0 # [0, ∞)

field MFInt32 colorIndex [] # [-1, ∞)

field SFBool colorPerVertex TRUE

field MFFloat knot [] # (-∞, ∞)

field SFInt32 order 4 # [3, 34]

}

4.3.2.2.2 Functionality and semantics

The NurbsCurve node describes a 3D NURBS curve, which is displayed as a curved line, similarly to what

is done with the IndexedLineSet primitive.

The order field defines the order of the NURBS curve, which is its degree plus one.

6 © ISO/IEC 2006 – All rights reserved

The controlPoint field defines a set of control points in a coordinate system where the weight is the last

component. The number of control points must be greater than or equal to the order of the curve. All weight

values must be greater than or equal to 0, and at least one weight must be strictly greater than 0. If the weight

of a control point is increased above 1, that point is more closely approximated by the surface. However the

surface is not changed if all weights are multiplied by a common factor.

The knot field defines the knot vector. The number of knots must be equal to the number of control points

plus the order of the curve, and they must be ordered non-decreasingly. By setting consecutive knots to the

same value, the degree of continuity of the curve at that parameter value is decreased. If o is the value of the

field order, o consecutive knots with the same value at the beginning (resp. end) of the knot vector cause the

curve to interpolate the first (resp. last) control point. Other than at its extremes, there may not be more than

o−1 consecutive knots of equal value within the knot vector. If the length of the knot vector is 0, a default knot

vector consisting of o 0’s followed by o 1’s, with no other values in between, will be used, and a Bézier curve

of degree o−1 will be obtained. A closed curve may be specified by repeating the starting control point at the

end and specifying a periodic knot vector.

The tessellation field gives hints to the curve tessellator as to the number of subdivision steps that must be

used to approximate the curve with linear segments: for instance, if the value t of this field is greater than or

equal to that of the order field, t can be interpreted as the absolute number of tesselation steps, whereas

t = 0 lets the browser choose a suitable tessellation.

Fields color, colorIndex, colorPerVertex, and set_colorIndex have the same semantic as for

IndexedLineSet applied to the control points.

4.3.2.3 NurbsCurve2D

4.3.2.3.1 Node interface

NurbsCurve2D { #%NDT=SFGeometryNode

eventIn MFInt32 set_colorIndex

exposedField SFColorNode color NULL

exposedField MFVec3f controlPoint []

exposedField SFInt32 tessellation 0 # [0, ∞)

field MFInt32 colorIndex [] # [-1, ∞)

field SFBool colorPerVertex TRUE

field MFFloat knot [] # (-∞, ∞)

field SFInt32 order 4 # [3, 34]

}

4.3.2.3.2 Functionality and semantics

The NurbsCurve2D is the 2D version of NurbsCurve; it follows the same semantic as NurbsCurve

with 2D control points.

4.3.2.4 NurbsSurface

4.3.2.4.1 Node interface

NurbsSurface { #%NDT=SFGeometryNode

eventIn MFInt32 set_colorIndex

eventIn MFInt32 set_texCoordIndex

exposedField SFColorNode color NULL

exposedField MFVec4f controlPoint []

exposedField SFTextureCoordinateNode texCoord NULL

exposedField SFInt32 uTessellation 0 # [0, ∞)

exposedField SFInt32 vTessellation 0 # [0 ,∞)

field MFInt32 colorIndex [] # [-1, ∞)

© ISO/IEC 2006 – All rights reserved 7

field SFBool colorPerVertex TRUE

field SFBool solid TRUE

field MFInt32 texCoordIndex [] # [-1 ,∞)

field SFInt32 uDimension 4 # [3, 258]

field MFFloat uKnot [] # (-∞,∞)

field SFInt32 uOrder 4 # [3, 34]

field SFInt32 vDimension 4 # [3, 258]

field MFFloat vKnot [] # (-∞,∞)

field SFInt32 vOrder 4 # [3, 34]

}

4.3.2.4.2 Functionality and semantics

The NurbsSurface Node describes a 3D NURBS surface. Similar 3D surface nodes are ElevationGrid

and IndexedFaceSet. In particular, NurbsSurface extends the definition of IndexedFaceSet. If an

implementation does not support NurbsSurface, it should still be able to display its control polygon as a

set of (triangulated) quadrilaterals, which is the coarsest approximation of the NURBS surface.

The uOrder field defines the order of the surface in the u dimension, which is its degree in the u dimension

plus one. Similarly, the vOrder field define the order of the surface in the v dimension.

The uDimension and vDimension fields define respectively the number of control points in the u and v

dimensions, which must be greater than or equal to the respective orders of the curve.

The controlPoint field defines a set of control points in a coordinate system where the weight is the last

component. These control points form an array of dimension uDimension x vDimension, in a similar way

to the regular grid formed by the control points of an ElevationGrid, the difference being that, in the case

of a NurbsSurface, the points need not be regularly spaced. The number of control points in each

dimension must be greater than or equal to the corresponding order of the curve. Specifically, the three spatial

coordinates (x, y, z) and weight (w) of control point P (NB: 0 ≤ i < uDimension ≥ uOrder and

i,j

0 ≤ j < vDimension ≥ vOrder) are obtained as follows:

P[i,j].x = controlPoint[i + j*uDimension].x

P[i,j].y = controlPoint[i + j*uDimension].y

P[i,j].z = controlPoint[i + j*uDimension].z

P[i,j].w = controlPoint[i + j*uDimension].w

All weight values must be greater than or equal to 0, and at least one weight must be strictly greater than 0.

If the weight of a control point is increased above 1, that point is more closely approximated by the surface.

However the surface is not changed if all weights are multiplied by a common factor.

The uKnot and vKnot fields define the knot vectors in the u and v dimensions respectively, and their

semantics are analogous to that of the knot field of the NurbsCurve node.

The uTessellation and vTessellation fields give hints to the surface tessellator as to the number of

subdivision steps that must be used to approximate the surface with (triangulated) quadrilaterals: for instance,

if the value t of the uTessellation field is greater than or equal to that of the uOrder field, t can be

interpreted as the absolute number of tesselation steps in the u dimension, whereas t = 0 lets the browser

choose a suitable tessellation.

solid is a rendering hints that indicates if the surface is closed. If FALSE, two-side lighting is enabled. If

TRUE, only one-side lighting is applied.

color, colorIndex, colorPerVertex, set_colorIndex, texCoord, texCoordIndex, and

set_textCoordIndex have the same functionality and semantics as for IndexedFaceSet and they apply

8 © ISO/IEC 2006 – All rights reserved

on the control points defined in controlPoint, just like these fields apply on coord points of an

IndexedFaceSet.

4.3.3 Subdivision surfaces

4.3.3.1 Introduction

Subdivision is a recursive refinement process that splits the facets or vertices of a polygonal mesh (the initial

“control hull”) to yield a smooth limit surface. The refined mesh obtained after each subdivision step is used as

the control hull for the next step, so all successive (and hierarchically nested) meshes can be regarded as

control hulls. The refinement of a mesh is performed both on its topology, as the vertex connectivity is made

richer and richer, and on its geometry, as the new vertices are positioned in such a way that the angles

formed by the new facets are smaller than those formed by the old facets.

⇒ ⇒ ⇒

Figure 3 — Nested control meshes.

Figure 3 shows an example of how subdivision would be applied to a triangular mesh. At each step, one

triangle is shaded to highlight the correspondence between one level and the next: each triangle is broken

down into four new triangles, whose vertex positions are perturbed to have a smoother and smoother surface.

4.3.3.1.1 Piecewise Smooth Surfaces

Subdivision schemes for piecewise smooth surfaces include common modeling primitives such as

quadrilateral free-form patches with creases and corners.

A somewhat informal description of piecewise smooth surfaces is given here; for simplicity, only surfaces

without self intersections are considered. For a closed C -continuous surface in ℜ , each point has a

neighborhood that can be smoothly deformed into an open planar disk D. A surface with a smooth boundary

can be described in a similar way, but neighborhoods of boundary points can be smoothly deformed into a

half-disk H, with closed boundary (Figure 4). In order to allow piecewise smooth boundaries, two additional

types of local charts are introduced: concave and convex corner charts, Q��and Q� .

Figure 4 — The charts for a surface with piecewise smooth boundary.

Piecewise-smooth surfaces are constructed out of surfaces with piecewise smooth boundaries joined together.

If two surface patches have a common boundary, but different normal directions along the boundary, the

resulting surface has a sharp crease.

© ISO/IEC 2006 – All rights reserved 9

Two adjacent smooth segments of a boundary are allowed to be joined, producing a crease ending in a dart

(cf. [40]). For dart vertices an additional chart Q��is required; the surface near a dart can be deformed into this

chart smoothly everywhere except at an open edge starting at the center of the disk.

4.3.3.1.2 Tagged meshes

Before describing the set of subdivision rules, the description of the tagged meshes is described, which the

algorithms accept as input. These meshes represent piecewise-smooth surfaces with features described

above.

The complete list of tags is as follows. Edges can be tagged as crease edges. A vertex with incident crease

edges receives one of the following tags:

⎯ Crease vertex: joins exactly two incident crease edges smoothly.

⎯ Corner vertex: connects two or more creases in a corner (convex or concave).

⎯ Dart vertex: causes the crease to blend smoothly into the surface; this is the only allowed type of crease

termination at an interior non-corner vertex.

Figure 5 — Features: (a) concave corner, (b) convex corner, (c) smooth boundary/crease, (d) corner

with two convex sectors.

Boundary edges are automatically assigned crease tags; boundary vertices that do not have a corner tag are

assumed to be smooth crease vertices.

Crease edges divide the mesh into separate patches, several of which can meet in a corner vertex. At a

corner vertex, the creases meeting at that vertex separate the ring of triangles around the vertex into sectors.

Each sector of the mesh is labeled as convex sector or concave sector indicating how the surface should

approach the corner.

In all formulas k denotes the number of faces incident at a vertex in a given sector. Note that this is different

from the standard definition of the vertex degree: faces, rather than edges are counted. Both quantities

coincide for interior vertices with no incident crease edges. This number is referred as crease degree.

The only restriction on sector tags is that concave sectors consist of at least two faces. An example of a

tagged mesh is given in Figure 6.

10 © ISO/IEC 2006 – All rights reserved

Figure 6 — Crease edges meeting in a corner with two convex (light grey) and one concave (dark grey)

sectors. The subdivision scheme modifies the rules for edges incident to crease vertices (e.g. e ) and

corners (e.g. e ).

4.3.3.2 SubdivisionSurface

4.3.3.2.1 Node interface

SubdivisionSurface { #%NDT=SFGeometryNode, SFBaseMeshNode

eventIn MFInt32 set_colorIndex

eventIn MFInt32 set_coordIndex

eventIn MFInt32 set_texCoordIndex

eventIn MFInt32 set_creaseEdgeIndex

eventIn MFInt32 set_cornerVertexIndex

eventIn MFInt32 set_creaseVertexIndex

eventIn MFInt32 set_dartVertexIndex

exposedField SFColorNode color NULL

exposedField SFCoordinateNode coord NULL

exposedField SFTextureCoordinateNode texCoord NULL

exposedField SFInt32 subdivisionType 0 # 0 – midpoint, 1 – Loop, 2 – butterfly,

# 3 – Catmull-Clark

exposedField SFInt32 subdivisionSubType 0 # 0 – Loop, 1 – Warren-Loop

field MFInt32 invisibleEdgeIndex [] # [0, ∞)

# extended Loop if non-empty

exposedField SFInt32 subdivisionLevel 0 # [-1, ∞)

# IndexedFaceSet fields

field SFBool ccw TRUE

field MFInt32 colorIndex [] # [-1, ∞)

field SFBool colorPerVertex TRUE

field SFBool convex TRUE

field MFInt32 coordIndex [] # [-1, ∞)

field SFBool solid TRUE

field MFInt32 texCoordIndex [] # [-1, ∞)

# tags

field MFInt32 creaseEdgeIndex [] # [-1, ∞)

field MFInt32 dartVertexIndex [] # [-1, ∞)

field MFInt32 creaseVertexIndex [] # [-1, ∞)

field MFInt32 cornerVertexIndex [] # [-1, ∞)

# sector information

exposedField MFSubdivSurfaceSectorNode sectors []

}

4.3.3.2.2 Functionality and semantics

The SubdivisionSurface node is similar to the existing IndexedFaceSet node with all fields relating to

face normals and the creaseAngle field removed since normals are generated by the subdivision algorithm

at run-time and their behavior at facet boundaries is control

...

INTERNATIONAL ISO/IEC

STANDARD 14496-16

Second edition

2006-12-15

Information technology — Coding of

audio-visual objects —

Part 16:

Animation Framework eXtension (AFX)

Technologies de l'information — Codage des objets audiovisuels —

Partie 16: Extension du cadre d'animation (AFX)

Reference number

©

ISO/IEC 2006

PDF disclaimer

PDF files may contain embedded typefaces. In accordance with Adobe's licensing policy, such files may be printed or viewed but shall

not be edited unless the typefaces which are embedded are licensed to and installed on the computer performing the editing. In

downloading a PDF file, parties accept therein the responsibility of not infringing Adobe's licensing policy. The ISO Central Secretariat

accepts no liability in this area.

Adobe is a trademark of Adobe Systems Incorporated.

Details of the software products used to create the PDF file(s) constituting this document can be found in the General Info relative to

the file(s); the PDF-creation parameters were optimized for printing. Every care has been taken to ensure that the files are suitable for

use by ISO member bodies. In the unlikely event that a problem relating to them is found, please inform the Central Secretariat at the

address given below.

This CD-ROM/DVD contains:

1) the publication ISO/IEC 14496-16:2006 in portable document format (PDF), which can be viewed

using Adobe® Acrobat® Reader; and

2) electronic attachments containing Animation Framework eXtension (AFX) software.

Adobe and Acrobat are trademarks of Adobe Systems Incorporated.

This second edition cancels and replaces the first edition (ISO/IEC 14496-16:2004), which has been

technically revised. It also incorporates the amendment ISO/IEC 14496-16:2004/Amd.1:2006 and the

Technical Corrigenda ISO/IEC 14496-16:2004/Cor.1:2005 and ISO/IEC 14496-16:2004/Cor.2:2005.

© ISO/IEC 2006

All rights reserved. Unless otherwise specified, no part of this CD-ROM may be reproduced, stored in a retrieval system or transmitted in

any form or by any means without

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.

Loading comments...