ISO/IEC 42005:2025

(Main)Information technology — Artificial intelligence (AI) — AI system impact assessment

Information technology — Artificial intelligence (AI) — AI system impact assessment

This document provides guidance for organizations performing artificial intelligence (AI) system impact assessments for individuals and societies that can be affected by an AI system and its foreseeable applications. It includes considerations for how and when to perform such assessments and at what stages of the AI system life cycle, as well as guidance for AI system impact assessment documentation. Additionally, this guidance includes how this AI system impact assessment process can be integrated into an organization’s AI risk management and AI management system. This document is intended for use by organizations developing, providing or using AI systems. This document is applicable to any organization, regardless of size, type and nature.

Technologies de l'information — Intelligence artificielle (IA) — Évaluation de l'impact des systèmes d'IA

General Information

- Status

- Published

- Publication Date

- 27-May-2025

- Technical Committee

- ISO/IEC JTC 1/SC 42 - Artificial intelligence

- Drafting Committee

- ISO/IEC JTC 1/SC 42 - Artificial intelligence

- Current Stage

- 6060 - International Standard published

- Start Date

- 28-May-2025

- Due Date

- 09-Aug-2025

- Completion Date

- 28-May-2025

Overview

ISO/IEC 42005:2025 - Information technology - Artificial intelligence (AI) - AI system impact assessment provides guidance for organizations to perform formal, documented AI system impact assessments that evaluate effects on individuals, groups and societies. The standard covers when and how to assess impacts across the AI lifecycle, how to document findings, and how to integrate the assessment process into an organization’s AI risk management and AI management system. It is intended for any organization developing, providing or using AI systems, regardless of size or sector.

Key topics and requirements

ISO/IEC 42005:2025 addresses practical elements required to build a repeatable impact-assessment capability:

- Developing and implementing the assessment process - establishing documented procedures and responsibilities.

- Integration with existing governance, risk and management processes (AI risk management, AI management system).

- Timing and scope - guidance on at which life‑cycle stages to perform assessments and defining assessment boundaries.

- Allocation of responsibilities and approval workflows.

- Thresholds and impact scales - defining sensitive or restricted uses and impact levels.

- Performing assessments and analysis - identifying actual and reasonably foreseeable impacts, including benefits and harms.

- Documentation requirements - comprehensive AI system information (purpose, intended/unintended uses, data quality, algorithms, models, deployment environment).

- Recording, reporting, monitoring and review - maintaining traceable records and updating assessments as systems evolve.

- Mitigation and measures - documenting measures to address harms and enhance benefits.

Practical applications and users

ISO/IEC 42005:2025 is intended for:

- AI developers and system integrators documenting impacts and controls.

- Service providers and platform operators assessing deployment risks.

- Compliance, legal and risk teams integrating AI impact assessments into organizational risk frameworks.

- Procurement and product managers requiring objective assessment evidence for third‑party AI systems.

- Regulators and auditors seeking a standardized approach to evaluate organizational practices.

Practical uses include assessing fairness, privacy, safety, environmental and societal impacts; informing design decisions; preparing compliance evidence; and supporting stakeholder transparency to build trustworthy AI.

Related standards

- ISO/IEC 22989 (AI concepts and terminology)

- ISO/IEC 23053 (ML framework)

- ISO/IEC 42001, ISO/IEC 23894, ISO/IEC 38507 (governance, risk and management system alignment)

ISO/IEC 42005:2025 helps organizations operationalize impact assessment as part of a broader AI governance and risk management ecosystem, improving transparency and accountability for AI deployments.

Get Certified

Connect with accredited certification bodies for this standard

BSI Group

BSI (British Standards Institution) is the business standards company that helps organizations make excellence a habit.

NYCE

Mexican standards and certification body.

Sponsored listings

Frequently Asked Questions

ISO/IEC 42005:2025 is a standard published by the International Organization for Standardization (ISO). Its full title is "Information technology — Artificial intelligence (AI) — AI system impact assessment". This standard covers: This document provides guidance for organizations performing artificial intelligence (AI) system impact assessments for individuals and societies that can be affected by an AI system and its foreseeable applications. It includes considerations for how and when to perform such assessments and at what stages of the AI system life cycle, as well as guidance for AI system impact assessment documentation. Additionally, this guidance includes how this AI system impact assessment process can be integrated into an organization’s AI risk management and AI management system. This document is intended for use by organizations developing, providing or using AI systems. This document is applicable to any organization, regardless of size, type and nature.

This document provides guidance for organizations performing artificial intelligence (AI) system impact assessments for individuals and societies that can be affected by an AI system and its foreseeable applications. It includes considerations for how and when to perform such assessments and at what stages of the AI system life cycle, as well as guidance for AI system impact assessment documentation. Additionally, this guidance includes how this AI system impact assessment process can be integrated into an organization’s AI risk management and AI management system. This document is intended for use by organizations developing, providing or using AI systems. This document is applicable to any organization, regardless of size, type and nature.

ISO/IEC 42005:2025 is classified under the following ICS (International Classification for Standards) categories: 35.020 - Information technology (IT) in general. The ICS classification helps identify the subject area and facilitates finding related standards.

ISO/IEC 42005:2025 is available in PDF format for immediate download after purchase. The document can be added to your cart and obtained through the secure checkout process. Digital delivery ensures instant access to the complete standard document.

Standards Content (Sample)

International

Standard

ISO/IEC 42005

First edition

Information technology — Artificial

2025-05

intelligence (AI) — AI system

impact assessment

Technologies de l'information — Intelligence artificielle (IA) —

Évaluation de l'impact des systèmes d'IA

Reference number

© ISO/IEC 2025

All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may

be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on

the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below

or ISO’s member body in the country of the requester.

ISO copyright office

CP 401 • Ch. de Blandonnet 8

CH-1214 Vernier, Geneva

Phone: +41 22 749 01 11

Email: copyright@iso.org

Website: www.iso.org

Published in Switzerland

© ISO/IEC 2025 – All rights reserved

ii

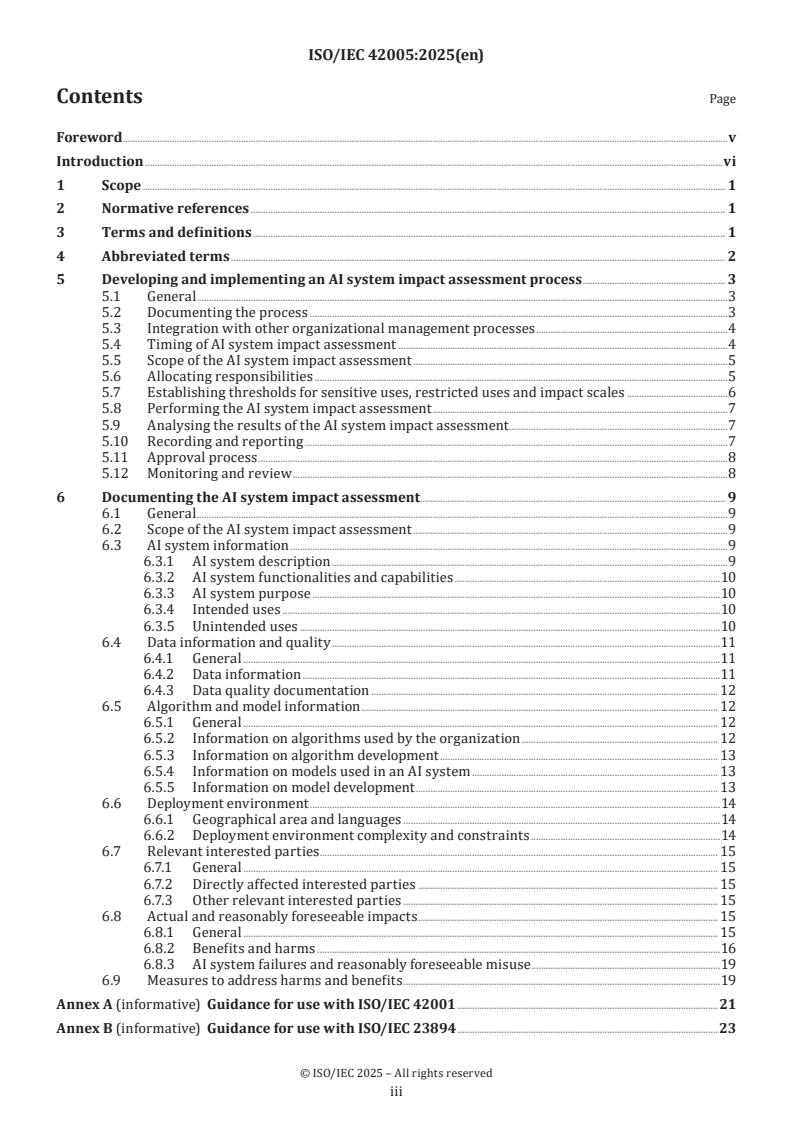

Contents Page

Foreword .v

Introduction .vi

1 Scope . 1

2 Normative references . 1

3 Terms and definitions . 1

4 Abbreviated terms . 2

5 Developing and implementing an AI system impact assessment process . 3

5.1 General .3

5.2 Documenting the process .3

5.3 Integration with other organizational management processes .4

5.4 Timing of AI system impact assessment .4

5.5 Scope of the AI system impact assessment .5

5.6 Allocating responsibilities .5

5.7 Establishing thresholds for sensitive uses, restricted uses and impact scales .6

5.8 Performing the AI system impact assessment .7

5.9 Analysing the results of the AI system impact assessment .7

5.10 Recording and reporting .7

5.11 Approval process .8

5.12 Monitoring and review .8

6 Documenting the AI system impact assessment . 9

6.1 General .9

6.2 Scope of the AI system impact assessment .9

6.3 AI system information .9

6.3.1 AI system description .9

6.3.2 AI system functionalities and capabilities .10

6.3.3 AI system purpose .10

6.3.4 Intended uses .10

6.3.5 Unintended uses .10

6.4 Data information and quality .11

6.4.1 General .11

6.4.2 Data information .11

6.4.3 Data quality documentation . 12

6.5 Algorithm and model information . 12

6.5.1 General . 12

6.5.2 Information on algorithms used by the organization . 12

6.5.3 Information on algorithm development . 13

6.5.4 Information on models used in an AI system . 13

6.5.5 Information on model development . 13

6.6 Deployment environment .14

6.6.1 Geographical area and languages .14

6.6.2 Deployment environment complexity and constraints .14

6.7 Relevant interested parties . 15

6.7.1 General . 15

6.7.2 Directly affected interested parties . 15

6.7.3 Other relevant interested parties . 15

6.8 Actual and reasonably foreseeable impacts . 15

6.8.1 General . 15

6.8.2 Benefits and harms .16

6.8.3 AI system failures and reasonably foreseeable misuse .19

6.9 Measures to address harms and benefits .19

Annex A (informative) Guidance for use with ISO/IEC 42001 .21

Annex B (informative) Guidance for use with ISO/IEC 23894 .23

© ISO/IEC 2025 – All rights reserved

iii

Annex C (informative) Harms and benefits taxonomy .25

Annex D (informative) Aligning AI system impact assessment with other assessments .27

Annex E (informative) Example of an AI system impact assessment template .31

Bibliography .38

© ISO/IEC 2025 – All rights reserved

iv

Foreword

ISO (the International Organization for Standardization) and IEC (the International Electrotechnical

Commission) form the specialized system for worldwide standardization. National bodies that are

members of ISO or IEC participate in the development of International Standards through technical

committees established by the respective organization to deal with particular fields of technical activity.

ISO and IEC technical committees collaborate in fields of mutual interest. Other international organizations,

governmental and non-governmental, in liaison with ISO and IEC, also take part in the work.

The procedures used to develop this document and those intended for its further maintenance are described

in the ISO/IEC Directives, Part 1. In particular, the different approval criteria needed for the different types

of document should be noted. This document was drafted in accordance with the editorial rules of the ISO/

IEC Directives, Part 2 (see www.iso.org/directives or www.iec.ch/members_experts/refdocs).

ISO and IEC draw attention to the possibility that the implementation of this document may involve the

use of (a) patent(s). ISO and IEC take no position concerning the evidence, validity or applicability of any

claimed patent rights in respect thereof. As of the date of publication of this document, ISO and IEC had not

received notice of (a) patent(s) which may be required to implement this document. However, implementers

are cautioned that this may not represent the latest information, which may be obtained from the patent

database available at www.iso.org/patents and https://patents.iec.ch. ISO and IEC shall not be held

responsible for identifying any or all such patent rights.

Any trade name used in this document is information given for the convenience of users and does not

constitute an endorsement.

For an explanation of the voluntary nature of standards, the meaning of ISO specific terms and expressions

related to conformity assessment, as well as information about ISO's adherence to the World Trade

Organization (WTO) principles in the Technical Barriers to Trade (TBT) see www.iso.org/iso/foreword.html.

In the IEC, see www.iec.ch/understanding-standards.

This document was prepared by Joint Technical Committee ISO/IEC JTC 1, Information technology,

Subcommittee SC 42, Artificial intelligence.

Any feedback or questions on this document should be directed to the user’s national standards

body. A complete listing of these bodies can be found at www.iso.org/members.html and

www.iec.ch/national-committees.

© ISO/IEC 2025 – All rights reserved

v

Introduction

The growing application of systems, products, services and components of such that incorporate some form

of artificial intelligence (AI) has led to a growing concern about how AI systems can potentially impact

all levels of society. AI brings with it the promise of great benefits: automation of difficult or dangerous

jobs, faster and more accurate analysis of large sets of data, advances in healthcare etc. However, there are

concerns about reasonably foreseeable negative effects of AI systems, including potentially harmful, unfair

or discriminatory outcomes, environmental harm and unwanted reductions in workforce.

The development and use of seemingly benign AI systems can have the potential to significantly impact

(both positively and negatively) individuals, groups of individuals and the society as a whole. To foster

transparency and trustworthiness of systems using AI technologies, an organization developing and using

these technologies can take actions to assure affected interested parties that these impacts have been

appropriately considered. AI system impact assessments play an important role in the broader ecosystem

of governance, risk and conformity assessment activities, which together can create a system of trust and

accountability.

ISO/IEC 38507, ISO/IEC 23894 and ISO/IEC 42001 all form important pieces of this ecosystem, for governance,

risk and conformity assessment (via a management system) respectively. Each of these highlights the need

for consideration of impacts to individuals and societies. A governing body can understand these impacts

to ensure that the development and use of AI systems align to company values and goals. An organization

performing risk management activities can understand reasonably foreseeable impacts to individuals and

societies to appropriately incorporate into their overall organizational risk assessment. An organization

developing or using AI systems can incorporate understanding and documentation about these impacts into

its management system to ensure that the AI systems in question meet expectations of relevant interested

parties, as well as internal and external requirements.

The act of performing AI system impact assessments and utilizing their documented outcomes are integral

to activities at all organizational levels to produce AI systems that are trustworthy and transparent. To

this end, this document provides guidance for an organization on how to both implement a process for

completing such assessments and promote a common understanding of the components necessary to

produce an effective assessment.

© ISO/IEC 2025 – All rights reserved

vi

International Standard ISO/IEC 42005:2025(en)

Information technology — Artificial intelligence (AI) — AI

system impact assessment

1 Scope

This document provides guidance for organizations performing artificial intelligence (AI) system impact

assessments for individuals and societies that can be affected by an AI system and its foreseeable

applications. It includes considerations for how and when to perform such assessments and at what stages of

the AI system life cycle, as well as guidance for AI system impact assessment documentation.

Additionally, this guidance includes how this AI system impact assessment process can be integrated into an

organization’s AI risk management and AI management system.

This document is intended for use by organizations developing, providing or using AI systems. This

document is applicable to any organization, regardless of size, type and nature.

2 Normative references

The following documents are referred to in the text in such a way that some or all of their content constitutes

requirements of this document. For dated references, only the edition cited applies. For undated references,

the latest edition of the referenced document (including any amendments) applies.

ISO/IEC 22989, Information technology — Artificial intelligence — Artificial intelligence concepts and

terminology

ISO/IEC 23053, Framework for Artificial Intelligence (AI) Systems Using Machine Learning (ML)

3 Terms and definitions

For the purposes of this document, the terms and definitions given in ISO/IEC 22989, ISO/IEC 23053 and the

following apply.

ISO and IEC maintain terminology databases for use in standardization at the following addresses:

— ISO Online browsing platform: available at https:// www .iso .org/ obp

— IEC Electropedia: available at https:// www .electropedia .org/

3.1

AI system impact assessment

formal, documented process by which the impacts to individuals, groups of individuals and societies are

considered by an organization developing, providing, or using products or services utilizing artificial

intelligence

3.2

intended use

use for which an AI system is designed

3.3

unintended use

use for which an AI system is not designed

© ISO/IEC 2025 – All rights reserved

3.4

intended users

groups of people or information systems for which an AI system is designed

[SOURCE: ISO 20282-1:2006, 3.12, modified — “people” has been replaced with “people or information

systems” and “a product” has been replaced with “an AI system”.]

3.5

interested party

stakeholder

person or organization that can affect, be affected by, or perceive itself to be affected by a decision or activity

[SOURCE: ISO/IEC 42001:2023, 3.2]

3.6

reasonably foreseeable misuse

use of an AI system in a way not intended by the AI system developer or provider, but which can result from

readily predictable behaviour of intended users

Note 1 to entry: Readily predictable human behaviour includes the behaviour of all types of users, e.g. the elderly,

children and persons with disabilities. For more information, see ISO 10377.

Note 2 to entry: In the context of consumer safety, the term “reasonably foreseeable use” is increasingly used as a

synonym for “intended use”, and “unintended use” as a synonym for “reasonably foreseeable misuse.”

Note 3 to entry: The specific definitions can vary somewhat, depending on the specific application area of the standard

or regulation.

[SOURCE: ISO/IEC Guide 51:2014, 3.7, modified — “a product or system” has been replaced with “an AI

system”, and “supplier” has been replaced with “AI system developer or provider”.]

3.7

restricted use

use of an AI system that is constrained by laws, organizational policies or contractual agreements

3.8

sensitive use

use of an AI system that can have a significant adverse impact on individuals, group of individuals or societies

3.9

top management

person or group of people who directs and controls an organization at the highest level

Note 1 to entry: Top management has the power to delegate authority and provide resources within the organization.

Note 2 to entry: If the scope of the management system covers only part of an organization, then top management

refers to those who direct and control that part of the organization.

[SOURCE: ISO/IEC 42001:2023, 3.3]

4 Abbreviated terms

AI artificial intelligence

BIA business impact assessment

EIA environmental impact assessment

FIA financial impact assessment

HRIA human rights impact assessment

© ISO/IEC 2025 – All rights reserved

IT information technology

ML machine learning

PIA privacy impact assessment

PII personally identifiable information

SIA security impact assessment

5 Developing and implementing an AI system impact assessment process

5.1 General

5.1.1 The organization should have a structured and consistent approach for performing and documenting

AI system impact assessments. The process used can vary depending on a range of factors.

5.1.2 Internal factors include:

a) organizational context, governance, objectives, policies and procedures;

b) contractual obligations;

c) intended use of the Al system to be developed or used;

d) risk appetite.

5.1.3 External factors include:

a) applicable legal requirements, including prohibited uses of AI systems;

b) policies, guidelines and decisions from regulators that have an impact on the interpretation or

enforcement of legal requirements in the development and use of Al systems;

c) incentives or consequences associated with the intended use of Al systems.

d) culture, traditions, values, norms and ethics with respect to development and use of AI systems;

e) competitive landscape and trends for new products and services using Al systems;

5.1.4 Clause 5 details possible elements of an AI system impact assessment process that the organization

can consider when implementing such a process.

5.2 Documenting the process

The organization should document the process for completing AI system impact assessments. Such

documentation should be kept up to date and made available, where appropriate, to relevant interested

parties. Documentation of the process should include information on the topics in Clause 5. The intended

results of the process documentation can vary depending on the organization’s needs and the type of the AI

system, and can include, for example:

a) documented procedural guidance;

b) AI system impact alignment guide or template (refer to Annex D) or a standalone template (see Annex E);

c) use cases for awareness-raising and training;

d) input in various management reviews in the related AI management system;

e) completed AI system impact assessments and other artefacts from the assessment process.

© ISO/IEC 2025 – All rights reserved

Documentation should be maintained throughout the AI system impact assessment process within the data

retention policies of the organization and its legal obligations related to data retention, i.e. at the stages of

design, redesign, deployment and evaluation.

5.3 Integration with other organizational management processes

The organization should document how the AI system impact assessment is integrated with other

organizational processes. This can include considerations such as:

a) how the organization integrates the AI system impact assessment with organizational risk assessment;

b) how the organization integrates the AI system impact assessment with other types of impact

assessments;

c) which organizational governance, risk and compliance processes are in place or planned for the

treatment of reasonably foreseeable impacts.

NOTE Annexes A, B and D provide additional information.

5.4 Timing of AI system impact assessment

5.4.1 As part of establishing the AI system impact assessment process, the organization should determine

and define when such assessments should be performed and to what level, or when a previous AI system

impact assessment can be reused, repurposed or revised, and to what extent. Determining the timing of the

AI system impact assessments can be impacted by factors such as, but not limited to:

a) applicable legal requirements;

b) contractual and professional obligations and duties;

c) internal structures, policies, processes, procedures and resources, including technology;

d) risk level of the AI system (the organization can consider ISO/IEC 23894:2023, 6.3.4 for additional

guidance);

e) expectations of relevant interested parties, including customers;

f) internal AI system life cycle processes;

For additional guidance on the timing of AI system impact assessments and how they can be connected or

aligned with other impact assessments conducted by the organisation, see Annex D.

5.4.2 The organization should consider reassessment when changes arise in factors such as, but not

limited to:

a) change in intended use of the AI system, including changes to the users of the AI system;

b) change in customer expectations;

c) change in the AI system itself, including changes to:

1) the data used;

2) the complexity or type of the AI system;

3) the performance of the AI system;

d) changes in the operational environment of the AI system;

e) change in context surrounding the AI system, including changes to:

1) the applicable legal requirements;

© ISO/IEC 2025 – All rights reserved

2) contractual obligations;

3) internal policies;

4) the relevant interested parties of the AI system;

5) the locations and sectors in which the organization operates or anticipates operating.

AI risk assessments and AI system impact assessments should be conducted prior to implementing the

change triggering reassessment.

5.4.3 Timing considerations can include:

a) how often AI system impact assessments are performed;

b) at what stage of the AI system life cycle the AI system impact assessment is performed;

c) how frequently the AI system impact assessment is updated;

d) under what circumstances a new AI system impact assessment or an update is needed;

e) what other impact assessments are linked to the AI system impact assessment.

5.4.4 The organization should consider whether it uses tools for triaging when an AI system impact

assessment is required. For example, if the organization determines that AI system impact assessments are

only to be done on “high-risk” AI systems, they should document as part of the process what constitutes a

“high-risk” AI system and what triggers the need for an impact assessment. A triaging process can require a

briefer version of the AI system impact assessment to determine if the AI system is high-risk and requires a

full AI system impact assessment.

5.5 Scope of the AI system impact assessment

The organization should define the scope of the AI system impact assessment, including the applicability

and the boundaries of the AI system impact assessments considering the internal and external factors

provided in 5.1, the expectations of relevant interested parties and the reasonably foreseeable impacts on

individuals, groups of individuals or societies. The scope of an AI system impact assessment can include, but

is not limited to:

a) the entire AI system or a component of the AI system that provides functionalities explicitly useable by

users (e.g. when a change is made to one or more components of the AI system);

b) a description of the role of the organization within the AI ecosystem (e.g. data provider, model provider,

service provider or product provider).

If a system is composed of interconnected AI systems, the organization should consider whether to perform

a single AI system impact assessment.

NOTE Changes to an AI system component can have implications to the overall AI system impact on various

interested parties.

5.6 Allocating responsibilities

The organization should ensure that the responsibilities for the AI system impact assignment are assigned

and communicated within the organization. The relevant responsibilities depend on multiple factors,

including the nature of the AI system impact assessment, its scope and its extent, the existence of a previous

assessment, and can include responsibilities for:

a) establishing the scope of the assessment;

b) ensuring the allocation of appropriate resources;

c) ensuring the availability of documented information:

© ISO/IEC 2025 – All rights reserved

d) liaising with the relevant interested parties that can contribute to the assessment (e.g. groups and

personnel responsible for research and development, marketing and sales, security and operations,

legal personnel, labour representatives and other relevant interested parties);

e) reporting and communication of the results or any relevant information to other organizational process

or functions;

f) establishing approvals process and escalations;

g) ensuring the availability of the AI system impact assessment to relevant management reviews within

the AI management system and the organization;

The assignment of responsibilities should consider the required experience and competency, the relevance

of the role and the necessary access to information.

The organization should ensure that the assignment of responsibilities takes into account the relevant

need for a multidisciplinary approach to consider impacts that can be reasonably foreseen and addressed

throughout the AI system’s life cycle.

NOTE Relevant disciplines can include engineering, health and safety, human rights, ethics and social sciences

such as anthropology, sociology and psychology.

5.7 Establishing thresholds for sensitive uses, restricted uses and impact scales

A critical part of the AI system impact assessments processes is ensuring that thresholds, particularly

around AI system use, are documented. The organization should define those thresholds, based on the

context in which they operate. This can include considerations such as:

a) applicable legal requirements;

b) expectations of relevant interested parties;

c) state of the art;

d) benefits of the AI system;

e) cultural, labour and societal norms;

f) applicable AI ethical frameworks.

Depending on the types and amounts of thresholds, the organization can implement additional processes,

including reviews and approvals. For example, the organization can decide that certain uses or reasonably

foreseeable misuses are sensitive or prohibited by organizational policy. Legal or other external requirements

can further determine the sensitivity of uses or reasonably foreseeable misuses of an AI system. If an AI

system impact assessment indicates that its planned use falls under a “sensitive” or a “restricted” category,

the organization should document what the next steps are as part of the overall process.

EXAMPLE 1 An example of a sensitive use case can be an AI systems designed to automate lending decisions, as

such systems can have significant adverse financial impacts on individuals.

EXAMPLE 2 If a sensitive or restricted use is identified, then such uses can be escalated to management (including

approvals). Consider an AI system which heavily impacts rights, particularly those of children, and with adverse

impacts that cannot be mitigated by technical improvements. Such issues can be escalated to decide whether to review

the development of this AI system due to adverse impact.

The organization should also consider how overall impact scales are determined and reasonably foreseeable

impacts are calculated. The results of an AI system impact assessment can indicate, for example, that

intended uses for an AI system are not sensitive but can affect a large population of users, and that should

factor into the impact scale and next procedural steps the organization determines in the AI system impact

assessment process.

The organization should consider the magnitude of reasonably foreseeable impacts when defining the

various pieces of the AI system impact assessment. The organization should have criteria for the types

© ISO/IEC 2025 – All rights reserved

of reasonably foreseeable impacts that can be an outcome of the AI system. For example, to assess the

magnitude of reasonably foreseeable impacts, the AI system impact assessment can include criteria for

rating impacts as severe if they can lead to high adverse consequences for the organization, for groups of

individuals or to societies (see, for example, Section 3.2 of Reference [16]).

5.8 Performing the AI system impact assessment

In performing the AI system impact assessment, the organization should consider, as appropriate:

a) identification of reasonably foreseeable AI system impacts (such as impact to human rights and

fundamental rights) to all identified individuals, groups of individuals and to societies. Both beneficial

impacts and harmful impacts should be considered.

b) further considerations include, but are not limited to:

1) how such impacts also translate into organizational risks;

2) how multiple AI-related organizational objectives can impact groups of individuals and societies for

example, as outlined in Section 3.2 of Reference [16].

c) identifying impacts to individuals and groups of individuals, as outlined in Reference [16] and in

[16]

Section 3.3 periodically or as appropriate, for example, as early as possible and at identified stages of

the AI system life cycle.

d) assessment of the magnitude and likelihood of identified impacts and determine how these impacts will

be addressed as part of the risk management process.

Inputs from diverse communities should be encouraged and integrated into the assessment as they can add

varied perspectives.

5.9 Analysing the results of the AI system impact assessment

The results of the AI system impact assessment can play a crucial role in the responsible use and development

of AI systems. The assessment should be analysed and incorporated into both technical and management

decisions.

On a technical level, analysis of AI system impact assessment results can be used to improve product or

service quality, build-in safeguards against unintended use or reasonably foreseeable misuse, improve

safety and robustness of AI systems, etc. It can also include determining the types of measures to address

benefits and harm as detailed in 6.8.2. Additionally, the results of the assessment should be compared to

the thresholds established in 5.7 and if the thresholds are not met, an action plan to remediate should be

identified.

On a management level, analysis can be used to inform the organizational risk management processes on

foreseeable impacts of AI systems. This can include consideration of individual AI system impact assessments,

but also analysing several assessments holistically to determine how trends can affect organizational risks

and objectives at a broad level.

5.10 Recording and reporting

5.10.1 The organization should define and document expectations for how the AI system impact assessment

is to be recorded, as well as expectations for reporting the results of the AI system impact assessment and

measures taken to address the identified impacts. The format of the assessment can depend on the needs of

the organization, and can be captured in a document template, a system, or through any other method that

enables recording and reporting.

5.10.2 The organization can have both internal and external reporting needs. Internal reporting

considerations can include reporting to:

a) top management;

© ISO/IEC 2025 – All rights reserved

b) personnel and persons doing work under the organization, or, where appropriate, their representatives;

c) AI producers;

d) AI partners.

5.10.3 External reporting should be commensurate with who the relevant interest parties are, their

expectations, the need for transparency, whilst ensuring that information is not inappropriately divulged

from a security, privacy or confidentiality perspective. External reporting considerations can include

reporting to:

a) relevant authorities, including policy makers and regulators;

b) AI customers;

c) AI partners;

d) individuals, group of individuals or societies affected by the AI system.

5.10.4 Reporting of AI system impact assessment to relevant interested parties can include:

a) actual or reasonably foreseeable impact information related to the intended AI system use cases, as well

as other potentially beneficial uses and reasonably foreseeable misuses (at least at a broad level);

b) as many dimensions of identified impacts as appropriate, including as many of the types of impacts

outlined in 6.8 as reasonably possible;

c) measures taken to address the identified impacts.

When reporting information on reasonably foreseeable misuses, the organization can omit details that can

be useful to attackers.

5.10.5 Reports should be structured and worded such that they can provide the information needed by

relevant interested parties to assess where necessary the AI system’s compliance with legal requirements

related to impact assessments. They should also be structured and worded in a way that, where certain

portions are unavailable for intellectual property protection or security purposes, they remain

comprehensible.

5.11 Approval process

The organization should document any approvals required as part of the AI system impact assessment

process. This can include approvals related to exceeding established thresholds (see 5.7) or a final approval

of the overall AI system impact assessment. Considerations can include:

a) when approvals are required (e.g. when a threshold is exceeded, after the AI system impact

b) assessment is completed);

c) who has the responsibility for approval (this can vary depending on the type of approval);

d) whether external approvals are required in any situations (e.g. regulatory, external relevant interested

parties).

5.12 Monitoring and review

5.12.1 The organization should define and document processes for monitoring and review of AI system

impact assessments, including the following:

a) when they are performed (whether on an established cadence or triggered by events, or both);

b) who performs the monitoring and review;

© ISO/IEC 2025 – All rights reserved

c) what activities do the monitoring and review consist of.

5.12.2 The AI system assessment review output should include decisions related to:

a) continual improvement opportunities and any need for changes to the AI system impact assessment

planning and implementation;

b) changes to the risk assessment and risk treatment processes;

c) changes to the AI objectives;

d) changes to the thresholds for sensitive uses, restricted uses, and impact scales.

6 Documenting the AI system impact assessment

6.1 General

This clause contains guidance that can be included in a documented AI system impact assessment. The

organization should determine its needs based on its context and not all of the guidance in this clause is

applicable to every organization. For example, it is possible that an organization implementing an AI system

developed by a third-party does not have details on the data (6.4) or algorithm information (6.5).

6.2 Scope of the AI system impact assessment

The scope of the AI system impact assessment should describe the AI system under consideration, its

foreseeable impacts, and internal and external influences that affect these impacts. The scope of the AI

system impact assessment should describe the AI system with the following:

a) an identification of the AI system under consideration;

b) an identification of individuals, groups of individuals and societies that can be affected by the AI system,

including their needs and expectations as relevant to the AI system impact assessment and as reasonably

identifiable by the organization;

c) a description of intended uses and use case types;

d) a list of reasonably foreseeable misuses that can affect such identification of individuals, groups of

individuals and societies.

If relevant, the scope description should clearly indicate exceptions from the items listed in a) to d).

The scope of the impact assessment can include or be complemented by other impact assessments (e.g.

human rights impact assessment or human rights due diligence procedures, see Annex D), based on different

parts of the AI system information as described in 6.3.

6.3 AI system information

6.3.1 AI system description

The AI system impact assessment should include a basic description of the AI system, describing what the

AI system does and, at a high-level, how it works. The description should include what kind of capabilities

the AI system has, to give potential reviewers the necessary context to understand the AI system and the

environment in which it operates. The AI system description can include additional detail as determined by

the organization, such as:

a) overview of the AI system architecture;

b) technical requirements and specifications;

c) demonstrations and proof of concepts.

© ISO/IEC 2025 – All rights reserved

6.3.2 AI system functionalities and capabilities

The documentation on the AI system features should be a more specific and detailed description of the

functionalities and capabilities, and whether they are current or planned. This can include a high-level

description of, for example:

a) predictions, types of data, algorithms and models used by the AI system (refer also to 6.4 and 6.5);

b) user interaction;

c) hardware and software configurations;

d) alerts and thresholds.

In the documentation of AI system functionalities and capabilities, the organization should identify and limit

the disclosure of the technical details to specific groups of individuals to reduce the possibility of misuses.

Other documentation to consider related to AI system functionalities and capabilities is the dependence on

or relationship to other systems and their functionalities and capabilities; for example, if the AI system being

documented relies on a model that is part of another product or system.

6.3.3 AI system purpose

The purpose of the AI system should be documented to help potential reviewers understand why the

organization is building or using the AI system and how the AI technology contributes to achieving these

objectives. When documenting the purpose of the AI system, the organization should consider the following:

a) the end user or primary customer of the AI system;

b) how the AI system addresses user needs;

c) the value proposition of the AI system;

d) any trade-offs made in relation to the decision to use the AI system.

6.3.4 Intended uses

The impact of the AI system on individuals, groups of individuals and societies depends in part on its

intended uses. Intended uses can be detailed by use cases or scenario which can include:

a) what the AI system is designed to do or achieve for specific groups of end users or customers;

b) where and when end users can use the AI system;

c) whether there are limitations on the AI system (including temporal limitations such as the time period

the AI system is supposed to be in operation).

AI systems an

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.

Loading comments...