ISO/IEC 18039:2019

(Main)Information technology — Computer graphics, image processing and environmental data representation — Mixed and augmented reality (MAR) reference model

Information technology — Computer graphics, image processing and environmental data representation — Mixed and augmented reality (MAR) reference model

This document defines the scope and key concepts of mixed and augmented reality, the relevant terms and their definitions and a generalized system architecture that together serve as a reference model for mixed and augmented reality (MAR) applications, components, systems, services and specifications. This architectural reference model establishes the set of required sub-modules and their minimum functions, the associated information content and the information models to be provided and/or supported by a compliant MAR system. The reference model is intended for use by current and future developers of MAR applications, components, systems, services or specifications to describe, compare, contrast and communicate their architectural design and implementation. The MAR reference model is designed to apply to MAR systems independent of specific algorithms, implementation methods, computational platforms, display systems and sensors or devices used. This document does not specify how a particular MAR application, component, system, service or specification is designed, developed or implemented. It does not specify the bindings of those designs and concepts to programming languages or the encoding of MAR information through any coding technique or interchange format. This document contains a list of representative system classes and use cases with respect to the reference model.

Technologies de l'information — Infographie, traitement de l'image et représentation des données environnementales — Modèle de référence en réalité mixte et augmentée

General Information

- Status

- Published

- Publication Date

- 26-Feb-2019

- Current Stage

- 9093 - International Standard confirmed

- Start Date

- 02-Jul-2024

- Completion Date

- 12-Feb-2026

Overview

ISO/IEC 18039:2019 - Mixed and Augmented Reality (MAR) reference model - defines the scope, key concepts and a generalized system architecture for mixed and augmented reality. The standard provides a common vocabulary, a set of required sub‑modules and their minimum functions, and the information content and models that a compliant MAR system should provide or support. It is intended as a neutral architectural reference for describing, comparing and communicating MAR applications, components, systems, services and specifications - independent of algorithms, platforms, display types or sensor hardware.

Key topics and technical scope

- Terminology and definitions for mixed reality, augmented reality and related concepts to ensure consistent communication across projects and stakeholders.

- Generalized MAR system architecture with multiple viewpoints (enterprise, computational, information) to map design, implementation and business perspectives.

- Core computational sub‑modules described in the model, including:

- Sensors / real‑world capturers

- Context analyser (recognizer and tracker)

- Spatial mapper

- Event mapper

- MAR execution engine

- Renderer

- Display and user interface

- MAR system API

- Information viewpoint specifying the information content and information models for each submodule (sensors, recognizer, tracker, mapper, renderer, UI).

- System classification and use cases, covering classes such as visual augmentation, 3D video, GNSS/POI systems, audio and 3D audio systems, with representative examples mapped to the reference model.

- Non‑functional considerations including conformance, performance, safety, security, privacy, usability and accessibility.

- Annexes that map existing MAR solutions and concrete use cases to the reference model for practical guidance.

Practical applications and who uses it

ISO/IEC 18039:2019 is useful for:

- MAR application and system architects designing modular, interoperable AR/MR solutions.

- Developers and component vendors who need a common reference for APIs, data models and submodule responsibilities.

- Standards developers and researchers extending or creating related AR/MR specifications.

- Product managers and business strategists deriving MAR business models and comparing system classes.

- Security, privacy and usability engineers ensuring MAR deployments address non‑functional requirements.

Use cases include designing cross‑platform AR toolchains, comparing architectures across devices, defining information exchange models and documenting conformance requirements.

Related standards

ISO/IEC 18039:2019 sits within ISO/IEC JTC 1 SC 24’s remit (computer graphics, image processing and environmental data representation) and complements other standards addressing graphics, imaging and environmental data representation. Annexes A and B provide mappings to existing MAR technologies and representative system examples.

Get Certified

Connect with accredited certification bodies for this standard

BSI Group

BSI (British Standards Institution) is the business standards company that helps organizations make excellence a habit.

NYCE

Mexican standards and certification body.

Sponsored listings

Frequently Asked Questions

ISO/IEC 18039:2019 is a standard published by the International Organization for Standardization (ISO). Its full title is "Information technology — Computer graphics, image processing and environmental data representation — Mixed and augmented reality (MAR) reference model". This standard covers: This document defines the scope and key concepts of mixed and augmented reality, the relevant terms and their definitions and a generalized system architecture that together serve as a reference model for mixed and augmented reality (MAR) applications, components, systems, services and specifications. This architectural reference model establishes the set of required sub-modules and their minimum functions, the associated information content and the information models to be provided and/or supported by a compliant MAR system. The reference model is intended for use by current and future developers of MAR applications, components, systems, services or specifications to describe, compare, contrast and communicate their architectural design and implementation. The MAR reference model is designed to apply to MAR systems independent of specific algorithms, implementation methods, computational platforms, display systems and sensors or devices used. This document does not specify how a particular MAR application, component, system, service or specification is designed, developed or implemented. It does not specify the bindings of those designs and concepts to programming languages or the encoding of MAR information through any coding technique or interchange format. This document contains a list of representative system classes and use cases with respect to the reference model.

This document defines the scope and key concepts of mixed and augmented reality, the relevant terms and their definitions and a generalized system architecture that together serve as a reference model for mixed and augmented reality (MAR) applications, components, systems, services and specifications. This architectural reference model establishes the set of required sub-modules and their minimum functions, the associated information content and the information models to be provided and/or supported by a compliant MAR system. The reference model is intended for use by current and future developers of MAR applications, components, systems, services or specifications to describe, compare, contrast and communicate their architectural design and implementation. The MAR reference model is designed to apply to MAR systems independent of specific algorithms, implementation methods, computational platforms, display systems and sensors or devices used. This document does not specify how a particular MAR application, component, system, service or specification is designed, developed or implemented. It does not specify the bindings of those designs and concepts to programming languages or the encoding of MAR information through any coding technique or interchange format. This document contains a list of representative system classes and use cases with respect to the reference model.

ISO/IEC 18039:2019 is classified under the following ICS (International Classification for Standards) categories: 35.140 - Computer graphics. The ICS classification helps identify the subject area and facilitates finding related standards.

ISO/IEC 18039:2019 is available in PDF format for immediate download after purchase. The document can be added to your cart and obtained through the secure checkout process. Digital delivery ensures instant access to the complete standard document.

Standards Content (Sample)

INTERNATIONAL ISO/IEC

STANDARD 18039

First edition

2019-02

Information technology — Computer

graphics, image processing and

environmental data representation —

Mixed and augmented reality (MAR)

reference model

Technologies de l'information — Infographie, traitement de l'image

et représentation des données environnementales — Modèle de

référence en réalité mixte et augmentée

Reference number

©

ISO/IEC 2019

© ISO/IEC 2019

All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may

be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting

on the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address

below or ISO’s member body in the country of the requester.

ISO copyright office

CP 401 • Ch. de Blandonnet 8

CH-1214 Vernier, Geneva

Phone: +41 22 749 01 11

Fax: +41 22 749 09 47

Email: copyright@iso.org

Website: www.iso.org

Published in Switzerland

ii © ISO/IEC 2019 – All rights reserved

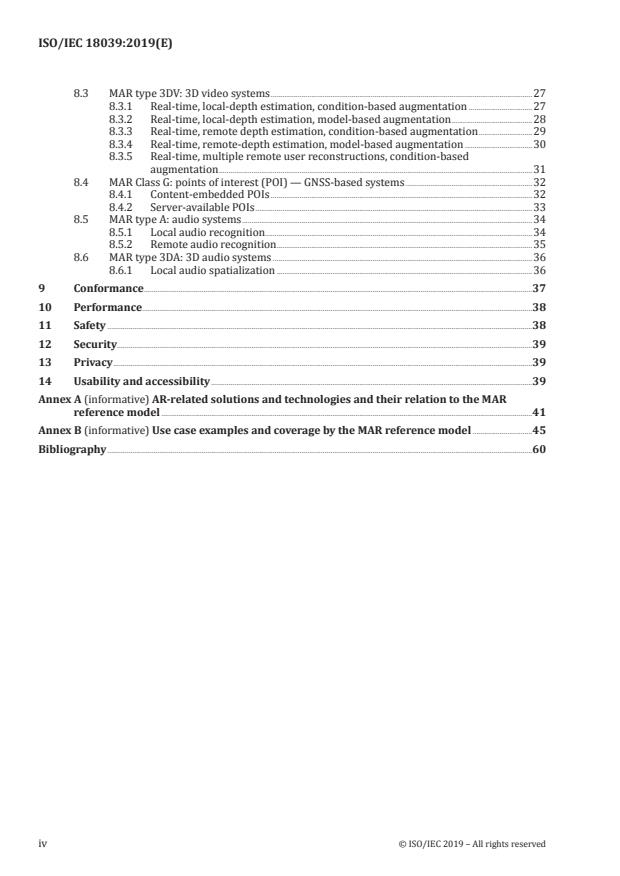

Contents Page

Foreword .v

Introduction .vi

1 Scope . 1

2 Normative references . 1

3 Terms, definitions and abbreviated terms . 1

3.1 Terms and definitions . 1

3.2 Abbreviated terms . 4

4 Mixed and augmented reality (MAR) domain and concepts . 4

4.1 General . 4

4.2 MAR continuum . 6

5 MAR reference model usage example . 7

5.1 Designing an MAR application or service . 7

5.2 Deriving an MAR business model . 7

5.3 Extending existing or creating new standards for MAR . 7

6 MAR reference system architecture . 8

6.1 Overview . 8

6.2 Viewpoints . 9

6.3 Enterprise viewpoint . 9

6.3.1 General. 9

6.3.2 Classes of actors .10

6.3.3 Business model of MAR systems .11

6.3.4 Criteria for successful MAR systems .12

6.4 Computational viewpoint .12

6.4.1 General.12

6.4.2 Sensors: pure sensor and real world capturer .12

6.4.3 Context analyser: recognizer and tracker .13

6.4.4 Spatial mapper .14

6.4.5 Event mapper . . .14

6.4.6 MAR execution engine .15

6.4.7 Renderer .15

6.4.8 Display and user interface .16

6.4.9 MAR system API .17

6.5 Information viewpoint .17

6.5.1 General.17

6.5.2 Sensors .17

6.5.3 Recognizer .18

6.5.4 Tracker . .19

6.5.5 Spatial mapper .19

6.5.6 Event mapper . . .19

6.5.7 Execution engine .20

6.5.8 Renderer .20

6.5.9 Display and user interface .20

7 MAR component classification framework .21

8 MAR system classes .22

8.1 General .22

8.2 MAR Class V — Visual augmentation systems.22

8.2.1 Local recognition and tracking .22

8.2.2 Local registration, remote recognition and tracking .23

8.2.3 Remote recognition, local tracking and registration .24

8.2.4 Remote recognition, registration and composition .26

8.2.5 MAR Class V-R: visual augmentation with 3D environment reconstruction .27

© ISO/IEC 2019 – All rights reserved iii

8.3 MAR type 3DV: 3D video systems .27

8.3.1 Real-time, local-depth estimation, condition-based augmentation .27

8.3.2 Real-time, local-depth estimation, model-based augmentation .28

8.3.3 Real-time, remote depth estimation, condition-based augmentation .29

8.3.4 Real-time, remote-depth estimation, model-based augmentation .30

8.3.5 Real-time, multiple remote user reconstructions, condition-based

augmentation .31

8.4 MAR Class G: points of interest (POI) — GNSS-based systems .32

8.4.1 Content-embedded POIs .32

8.4.2 Server-available POIs .33

8.5 MAR type A: audio systems .34

8.5.1 Local audio recognition . .34

8.5.2 Remote audio recognition .35

8.6 MAR type 3DA: 3D audio systems .36

8.6.1 Local audio spatialization .36

9 Conformance .37

10 Performance .38

11 Safety .38

12 Security.39

13 Privacy .39

14 Usability and accessibility .39

Annex A (informative) AR-related solutions and technologies and their relation to the MAR

reference model .41

Annex B (informative) Use case examples and coverage by the MAR reference model .45

Bibliography .60

iv © ISO/IEC 2019 – All rights reserved

Foreword

ISO (the International Organization for Standardization) and IEC (the International Electrotechnical

Commission) form the specialized system for worldwide standardization. National bodies that

are members of ISO or IEC participate in the development of International Standards through

technical committees established by the respective organization to deal with particular fields of

technical activity. ISO and IEC technical committees collaborate in fields of mutual interest. Other

international organizations, governmental and non-governmental, in liaison with ISO and IEC, also

take part in the work.

The procedures used to develop this document and those intended for its further maintenance are

described in the ISO/IEC Directives, Part 1. In particular, the different approval criteria needed for

the different types of document should be noted. This document was drafted in accordance with the

editorial rules of the ISO/IEC Directives, Part 2 (see www .iso .org/directives).

Attention is drawn to the possibility that some of the elements of this document may be the subject

of patent rights. ISO and IEC shall not be held responsible for identifying any or all such patent

rights. Details of any patent rights identified during the development of the document will be in the

Introduction and/or on the ISO list of patent declarations received (see www .iso .org/patents) or the IEC

list of patent declarations received (see http: //patents .iec .ch).

Any trade name used in this document is information given for the convenience of users and does not

constitute an endorsement.

For an explanation of the voluntary nature of standards, the meaning of ISO specific terms and

expressions related to conformity assessment, as well as information about ISO's adherence to the

World Trade Organization (WTO) principles in the Technical Barriers to Trade (TBT) see www .iso

.org/iso/foreword .html.

This document was prepared by Joint Technical Committee ISO/IEC JTC 1, Information technology,

Subcommittee SC 24, Computer graphics, image processing and environmental data representation.

Any feedback or questions on this document should be directed to the user’s national standards body. A

complete listing of these bodies can be found at www .iso .org/members .html.

© ISO/IEC 2019 – All rights reserved v

Introduction

This document contains annexes:

— Annex A gives examples of existing MAR solutions and technologies and how they fit into the MAR

reference model.

— Annex B gives examples of representative MAR systems and how their architecture maps to the

MAR reference model.

vi © ISO/IEC 2019 – All rights reserved

INTERNATIONAL STANDARD ISO/IEC 18039:2019(E)

Information technology — Computer graphics, image

processing and environmental data representation —

Mixed and augmented reality (MAR) reference model

1 Scope

This document defines the scope and key concepts of mixed and augmented reality, the relevant terms

and their definitions and a generalized system architecture that together serve as a reference model for

mixed and augmented reality (MAR) applications, components, systems, services and specifications.

This architectural reference model establishes the set of required sub-modules and their minimum

functions, the associated information content and the information models to be provided and/or

supported by a compliant MAR system.

The reference model is intended for use by current and future developers of MAR applications,

components, systems, services or specifications to describe, compare, contrast and communicate

their architectural design and implementation. The MAR reference model is designed to apply to MAR

systems independent of specific algorithms, implementation methods, computational platforms, display

systems and sensors or devices used.

This document does not specify how a particular MAR application, component, system, service or

specification is designed, developed or implemented. It does not specify the bindings of those designs

and concepts to programming languages or the encoding of MAR information through any coding

technique or interchange format. This document contains a list of representative system classes and

use cases with respect to the reference model.

2 Normative references

There are no normative references in this document.

3 Terms, definitions and abbreviated terms

For the purposes of this document, the following terms and definitions apply.

ISO and IEC maintain terminological databases for use in standardization at the following addresses:

— ISO Online browsing platform: available at https: //www .iso .org/obp

— IEC Electropedia: available at http: //www .electropedia .org/

3.1 Terms and definitions

3.1.1

augmentation

virtual object (3.1.24) data (computer-generated, synthetic) added on to or associated with target

physical object (3.1.15) data (live video, real world image) in an MAR scene (3.1.9)

Note 1 to entry: This equally applies to physical object data added on to or associated with target virtual object data.

3.1.2

augmented reality

type of mixed reality system (3.1.13) in which virtual world (3.1.25) data are embedded and/or registered

with the representation of physical world (3.1.16) data

© ISO/IEC 2019 – All rights reserved 1

3.1.3

augmented virtuality system

type of mixed reality system (3.1.13) in which physical world (3.1.16) data are embedded and/or

registered with the representation of virtual world (3.1.25) data

3.1.4

display

device by which rendering results are presented to a user using various modalities such as visual,

auditory, haptics, olfactory, thermal, motion

Note 1 to entry: In addition, any actuator can be considered display if it is controlled by MAR system.

3.1.5

feature

primitive geometric element (e.g. points, lines, polygons, colour, texture, shapes) and/or attribute of a

given (usually physical) object used in its detection, recognition and tracking

3.1.6

MAR event

trigger resulting from the detection of a condition relevant to MAR content and augmentation (3.1.1)

EXAMPLE Detection of a marker.

3.1.7

MAR execution engine

collection of hardware and software elements that produce the result of combining components that

represent on the one hand the real world and its objects, and on the other those that are virtual,

synthetic and computer generated

3.1.8

MAR experience

human visualization and interaction of an MAR scene (3.1.9)

3.1.9

MAR scene

observable spatio-temporal organization of physical and virtual objects (3.1.24) which is the result of an

MAR scene representation (3.1.10) being interpreted by an MAR execution engine (3.1.7) and which has at

least one physical (3.1.15) and one virtual object

3.1.10

MAR scene representation

data structure that arranges the logical and spatial representation of a graphical scene, including the

physical (3.1.15) and virtual objects (3.1.24) that are used by the MAR execution engine (3.1.7) to produce

an MAR scene (3.1.9)

3.1.11

marker

metadata embedded in or associated with a physical object (3.1.15) that specifies the location of a super-

imposed object

3.1.12

MAR continuum

spectrum spanning physical and virtual realities according to a proportional composition of physical

and virtual data representations

[1]

Note 1 to entry: Originally proposed by Milgram et al. .

2 © ISO/IEC 2019 – All rights reserved

3.1.13

mixed reality system

mixed and augmented reality system

system that uses a mixture of representations of physical world (3.1.16) data and virtual world (3.1.25)

data as its presentation medium

3.1.14

natural feature

feature (3.1.5) that is not artificially inserted for the purpose of easy detection/recognition/tracking

3.1.15

physical object

real object

object that exists in the real world

3.1.16

physical world

physical reality spatial organization of multiple physical objects (3.1.15)

3.1.17

point of interest

single or collection of target locations

Note 1 to entry: Aside from location data, a point of interest is usually associated with metadata such as identifier

and other location specific information.

3.1.18

recognizer

MAR component (hardware and software) that processes sensor (3.1.19) output and generates MAR

events (3.1.6) based on conditions indicated by the content creator

3.1.19

sensor

device that returns detected values related to detected or measured condition or property

Note 1 to entry: Sensor may be an aggregate of sensors.

3.1.20

spatial registration

establishment of the spatial relationship or mapping between two models, typically between virtual

object (3.1.24) and target physical object (3.1.15)

3.1.21

target image

target object (3.1.22) represented by a 2D image

3.1.22

target object

physical (3.1.15) or virtual object (3.1.24) that is designated, designed or chosen to allow detection,

recognition and tracking, and finally augmentation (3.1.1)

3.1.23

tracker

MAR component (hardware and software) that analyses signals from sensors (3.1.19) and provides

some characteristics of tracked entity (e.g. position, orientation, amplitude, profile)

© ISO/IEC 2019 – All rights reserved 3

3.1.24

virtual object

computer-generated entity that is designated for augmentation (3.1.1) in association with a physical

object (3.1.15) data representation

Note 1 to entry: In the context of MAR, it usually has perceptual (e.g. visual, aural) characteristics and, optionally,

dynamic reactive behaviour.

3.1.25

virtual world

virtual environment

spatial organization of multiple virtual objects (3.1.24), potentially including global behaviour

3.2 Abbreviated terms

Abbreviated term Definition

API Application program interface

AR Augmented reality

AVH Audio, visual, haptic

GNSS Global navigation satellite system

MAR Mixed and augmented reality

MAR-RM Mixed and augmented reality reference model

MR Mixed reality

POI Points of interest

PTAM Parallel tracking and mapping

SLAM Simultaneous localization and mapping

UI User interface

VR Virtual reality

4 Mixed and augmented reality (MAR) domain and concepts

4.1 General

MAR refers to a spatially coordinated combination of media/information components that represent

on the one hand the real world and its objects, and on the other those that are virtual, synthetic and

computer generated. The virtual component can be represented and presented in many modalities

(e.g. visual, aural, touch, haptic, olfactory) as illustrated in Figure 1. The figure shows an MAR system

in which a virtual fish is augmented above a real world object (registered by using markers), visually,

aurally and haptically.

4 © ISO/IEC 2019 – All rights reserved

SOURCE Magic Vision Lab, University of South Australia, reproduced with the permission of the authors

Figure 1 — The concept of MAR as a combination of representations of physical objects and

computer mediated virtual objects in various modalities (e.g. text, voice and force feedback)

Through such combinations, the physical (or virtual) object can be presented in an information-

rich fashion through augmentation with the virtual (or real) counterpart. Thus, the idea of

spatially-coordinated combination is important for highlighting the mutual association between the

physical and virtual worlds. This is also often referred to as registration and can be done in various

dimensions. The most typical registration is spatial, where the position and orientation of a real object

are computed and used to control the position and orientation of a virtual object. Temporal registration

can also occur when the presence of a real object is detected and a virtual object is to be displayed.

Registration can have various precision performances; it can vary in its degree of tightness (as

illustrated in Figure 2). For example, in the spatial dimension, it can be measured in terms of distance

or angles; in the temporal dimension, in terms of milliseconds.

© ISO/IEC 2019 – All rights reserved 5

[2]

NOTE Virtual brain imagery tightly registered on a real human body image is shown on the left-hand side

[3]

and tourist information overlaid less tightly over a street scene is shown on the right-hand side.

Figure 2 — The notion of registration precision at different degrees

[4]

An MAR system refers to real-time processing . For example, while a live close-captioned broadcast

would qualify as an MAR service, an offline production of a subtitled movie would not.

4.2 MAR continuum

Since an MAR system or its contents combines real and virtual components, an MAR continuum can

be defined according to the relative proportion of the real and virtual, encompassing the physical

reality (“All physical, no virtual”) on one end and the VR (“All virtual, no physical”) on the other end

[1]

(as illustrated in Figure 3). A single instance of a system at any point on this continuum that uses a

mixture of both real and virtual presentation media is called an MR system. In addition, for historical

reasons, MR is often synonymously or interchangeably used with AR, which is actually a particular

type of MR (see Clause 7). In this document, the term “mixed and augmented reality” is used to avoid

such confusion and emphasize that the same model applies to all combinations of real and digital

components along the continuum. The two extreme ends in the continuum (the physical reality and the

VR) are not in the scope of this document.

6 © ISO/IEC 2019 – All rights reserved

[1]

Figure 3 — The MAR (or reality-virtuality) continuum : definition of different types of MR

according to the relative portion between the real world representation and the virtual

Two notable types of MAR or points in the continuum are the AR and augmented virtuality. An AR

system is a type of mixed reality system in which the medium representing the virtual objects is

embedded into the medium representing the physical world (e.g. video). In this case, the physical reality

makes up a larger proportion of the final composition than the computer-generated information. An

augmented virtuality system is a type of MR system in which the medium representing physical objects

(e.g. video) is embedded into the computer-generated information (as illustrated in Figure 3).

5 MAR reference model usage example

5.1 Designing an MAR application or service

The MAR reference model is a reference guide in designing an MAR service and developing an MAR

system, application or content. With respect to the given application (or service) requirements, the

designer may refer to and select the necessary components from those specified in the MAR reference

architecture (see Clause 6). The functionalities, the interconnections between components, the data/

information model for input and output, and relevant existing standards for various parts can be

cross-checked to ensure generality and completeness. The component classification scheme described

in Clause 7 can help the designer to specify a more precise scope and capabilities, while the specific

system classes defined in Clause 8 can facilitate the process of model, system or service refinement.

5.2 Deriving an MAR business model

The MAR-RM document introduces an enterprise viewpoint with the objective of specifying the

industrial ecosystem, identifying the types of actors and describing various value chains. A set of

business requirements is also expressed. Based on this viewpoint, companies may identify current

business models or invent new ones.

5.3 Extending existing or creating new standards for MAR

Another expected usage of the MAR-RM is in extending or creating new application standards for MAR

functionalities. MAR is an interdisciplinary application domain involving many different technologies,

solutions and information models, and naturally there are ample opportunities for extending existing

technology solutions and standards for MAR. The MAR-RM can be used to match and identify

components for those that can require extension and/or new standardization. The computational and

© ISO/IEC 2019 – All rights reserved 7

information models can provide the initial and minimum basis for such extensions or for new standards.

In addition, strategic plans for future standardization can be made. In the case when competing de facto

standards exist, the reference model can be used to make comparisons and evaluate their completeness

and generality. Based on this analysis and the maturity of the standards, incorporation of de facto

standards into open ones may be considered (e.g. markers, API, POI constructs).

6 MAR reference system architecture

6.1 Overview

An MAR system requires several different components to fulfil its basic objectives: real-time recognition

of the physical world context, the registration of target physical objects with their corresponding

virtual objects, display of MAR content and handling of user interaction(s). A high-level representation

of the typical components of an MAR system is given in Figure 4. The central pink area indicates the

scope of the MAR-RM. The blue round boxes are the main computational modules and the dotted box

represents the required information constructs. Arrows indicate data flow. Control signals are not

explicitly shown, to avoid particular technical or implementation details in this document. Also, this

reference system architecture should not to be taken as something rigid and unchangeable, but as a

depiction of a typical case at the macro scale. Flexibility exists in actual application.

The MAR execution engine has a key role in the overall architecture and is responsible for:

— processing the content as specified and expressed in the MAR scene, including additional media

content provided in media assets;

— processing the user input(s);

— processing the context provided by the sensors capturing the real world;

— managing the presentation of the final result (aural, visual, haptic and commands to additional

actuators); and

— managing the communication with additional services.

Figure 4 — Major components and their interconnection in an MAR system at a high macro level

8 © ISO/IEC 2019 – All rights reserved

6.2 Viewpoints

In order to detail the global architecture presented in Figure 4, the reference model considers three

analysis angles, called viewpoints: enterprise, computation and information. This viewpoint-wise

exposition permits readers, who can be interested in or focused on particular aspects or viewpoints,

to better understand the MAR architecture. The definition of each viewpoint is provided in Table 1. In

this subclause, the terms "view" and "viewpoint" are used in the context of information modelling and

establishing a reference architecture. They should not be confused with the same term that refers to

the location of the virtual camera in computer graphics or virtual environments.

The notion of view is separate from that of the viewpoint: a viewpoint identifies the set of concerns,

representations and modelling techniques used to describe the architecture to address those concerns,

[5]

and a view is the result of applying a viewpoint to a particular system .

Table 1 — Definitions of the MAR viewpoints

Viewpoint Viewpoint definition Topics covered by MAR-RM

Enterprise Articulates the business entities in the system Actors and their roles.

that should be understandable by all stakehold-

Potential business models for each actor.

ers. This focuses on purpose, scope and policies,

and introduces the objectives of different actors

Desirable characteristics for the actors

involved in the field.

at both ends of the value chain (creators

and users).

Computational Identifies the functionalities of system compo- Services provided by each AR main

nents and their interfaces. component.

Specifies the services and protocols that each Interface description for some use cases.

component exposes to the environment.

Information Provides the semantics of information in the Context information such as spatial reg-

different components in the views, the overall istration, captured video and audio.

structure and abstract content type, as well as

Content information such as virtual

information sources.

objects, application behaviour and user

Describes how the information is processed interaction(s) management.

inside each component. This view does not pro-

Service information such as remote

vide a full semantic and syntax of data but only a

processing of the context data.

minimum of functional elements, and should be

used to guide the application developer or stand-

ard creator for creating their own information

structures.

6.3 Enterprise viewpoint

6.3.1 General

The Enterprise viewpoint (see Figure 5) describes the actors involved in an MAR system, their

objectives, roles and requirements. The actors can be classified according to their role. Several types of

actors from the list in 6.3.2 can commercially exploit an MAR system.

© ISO/IEC 2019 – All rights reserved 9

Key

MARATC MAR authoring tools creator

CA content aggregator

TO telecommunication operator

MAR EC MAR experience creator

CC content creator

DM device manufacturer

DMCP device middleware/component provider

EUP MAR consumer/end user profile

SMCP service middleware/component provider

MARSP MAR service provider

Figure 5 — The “Enterprise viewpoint” of an MAR system and the main actors

6.3.2 Classes of actors

6.3.2.1 Class 1: providers of authoring/publishing capabilities

— MAR authoring tools creator: a software platform provider of the tool used to create (author) an

MAR-enabled application or service; the output of the MAR authoring tool is called MAR scene

representation.

— MAR experience creator: a person that designs and implements an MAR-enabled application or

service.

— Content creator: a designer (person or organization) that creates multimedia content (scenes,

objects); even the end user of the MAR system can be the designer of the content.

6.3.2.2 Class 2: providers of MAR execution engine components

— Device manufacturer: an organization that produces devices in charge of augmentation and used as

end-user terminals.

10 © ISO/IEC 2019 – All rights reserved

— Device middleware/component provider: an organization that creates and provides hardware,

software and/or middleware for the augmentation device, which can be one of the following

modules:

— multimedia player or browser engine provider (rendering, interaction engine, execution, etc.);

— context knowledge provider (satellites, etc.); or

— sensor manufacturers (inertial, geomagnetic, camera, microphone, etc.).

6.3.2.3 Class 3: service providers

— MAR service provider: an organization that discovers and delivers services.

— Content aggregator: an organization aggregating, storing, processing and serving content.

— Telecommunication operator: an organization that manages telecommunication among other actors.

— Service middleware/component provider: an organization that creates and provides hardware,

software and/or middleware for processing servers, including services such as:

— location providers (network-based location services, image databases, RFID-based location, etc.);

— semantic provider (indexed image or text databases, etc.).

6.3.2.4 Class 4: MAR end user

The MAR consumer and end-user profile is a person who experiences the real world synchronized with

digital assets and uses an MAR scene representation, an MAR execution engine and MAR services in

order to satisfy information access and communication needs. By means of their digital information

display and interaction devices, such as smart phones, desktops and tablets, users of MAR hear, see

and/or feel digital information associated with natural features of the real world, in real time.

6.3.3 Business model of MAR systems

The different business models of the actors in the MAR system are as follows.

— The MAR authoring tools creator may provide the authoring software or content environment to an

MAR experience creator. Such tools range in complexity from full programming environments to

relatively easy-to-use online content creation systems.

— The content creator prepares a digital asset (text, picture, video, 3D model, animation, etc.) that may

be used in the MAR experience.

— An MAR experience creator creates an MAR experience in the form of an MAR rich media

representation. They can associate media assets with features in the real world, transforming

them into MAR-enabled digital assets. The MAR experience creator also defines the global and/or

local behaviour of the MAR experience. The creator should consider the performances of obtaining

and processing the context, as well as performance of the AR engine. A typical case is where the

MAR experience creator specifies a set of minimal requirements to be satisfied by the hardware or

software components.

— A middleware/component provider produces the components necessary for core enablers to provide

key software and hardware technologies in the fields of sensors, local image processing, display,

remote computer vision and remote processing of sensor data for MAR experiences. There are

two types of middleware/component providers: device (executed locally) and services (executed

remotely).

— An MAR service provider supports the delivery of MAR experiences. This can be via catalogues that

assist in discovering an MAR experience.

© ISO/IEC 2019 – All rights reserved 11

6.3.4 Criteria for successful MAR systems

The Enterprise-related requirements for the successful implementation of an MAR system are expressed

with respect to two types of actors. While the end-user experience for MAR should be more engaging

than browsing Web pages, it should be possible to create, transport and consume MAR experiences

with the same ease as is currently possible for Web pages.

6.4 Computational viewpoint

6.4.1 General

The Computational viewpoint (see Figure 6) describes the overall interworking of an MAR system. It

identifies major processing components (hardware and software), defines their roles and describes

how they interconnect.

Figure 6 — Computational viewpoint: illustrating and identifying the major computational

blocks in the MAR system and service

6.4.2 Sensors: pure sensor and real world capturer

A sensor is a hardware (and optionally software) component able to measure specific physical

properties. In the context of MAR, a sensor is used to detect, recognize and track the target physical

object to be augmented. In this case, it is called a “pure sensor”.

Another use of a sensor is to capture and stream to the execution engine the data representation of the

physical world or objects for composing an MAR scene. In such a case, it is called a “real world capturer”.

A typical example is the video camera that captures the real world as a video for use as a background in

an augmented reality scene. Another example is augmented virtuality, where a person is filmed in the

real world and the corresponding video is embedded into a virtual world. The captured real world data

can be in any modality, such as visual, aural, haptic. The real world data can be sensed and captured

ahead of time or remotely (not of the local space) in various formats, including 3D models, point clouds

and image-based (light field) models.

12 © ISO/IEC 2019 – All rights reserved

A sensor can measure different physical properties, and interpret and convert these observations

into digital signals. The captured data can be used (1) to compute only the context in the tracker and

recognizer, or (2) to both compute the context and contribute to the composition of the scene. Depending

on the nature of the physical property, different types of devices can be used (cameras, environmental

sensors, etc.). One or more sensors can simultaneously capture signals.

The input and output of the sensors are:

— input: real world signals; and

— output: sensor observations with or without additional metadata (position, time, etc.).

The sensors can be categorized as set out in Table 2.

Table 2 — Sensor categories

Dimension Types

1. Modality and type Visual Auditory Electro-mag- Haptic/ Temperature Other phys-

of the sensed and/ netic waves tactile ical proper-

or captured data (e.g. GNSS) ties

2. State of sensed and/ Live Pre-captured — — — —

or captured data

6.4.3 Context analyser: recognizer and tracker

The context analyser is composed of the recognizer and the tracker.

The recognizer is a hardware or software component that analyses signals from the real world and

produces MAR events and data by comparing these with a local or remote target signal (i.e. target for

augmentation).

The tracker is able to detect and measure changes of the properties of the target signals (e.g. pose,

orientation, volume).

Recognition can only be based on prior captured target signals. Both the recognizer and the tracker can

be con

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.

Loading comments...